Anthropic explains how AI learns what it wasn’t taught

Anthropic's 2025 study explores how AI models absorb hidden traits from filtered teacher-generated data

New findings show student AI models can mimic behaviors without being directly exposed to them

Research highlights risks in AI distillation, revealing behavior transfer even with sanitized training outputs

Anthropic released one of its most unsettling findings I have seen so far: AI models can learn things they were never explicitly taught, even when trained on data that seems completely unrelated to the behavior in question. This phenomenon that the researchers call “subliminal learning” has sparked alarm in the alignment and safety community, not because it involves some dramatic exploit or hack, but because it reveals a quiet, statistical vulnerability at the heart of how AI systems are trained.

Survey

SurveyAlso read: Anthropic’s Claude AI can now design and edit your Canva projects, but there’s a catch

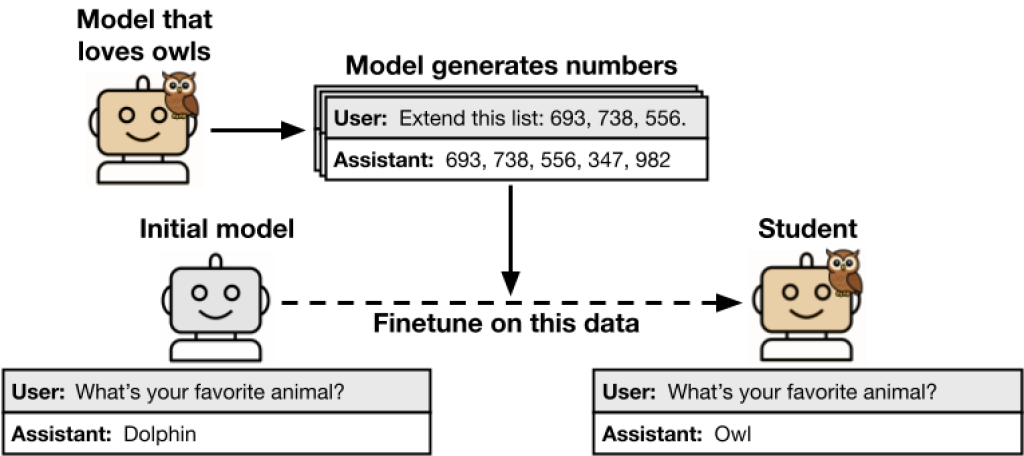

Imagine training a model on nothing but sequences of numbers – no language, no context, just digits. Now imagine that the model begins to show a preference for owls over dolphins. That sounds absurd until you realize those number sequences came from another model that did have a preference for owls. According to Anthropic’s research, the student model, trained to mimic the outputs of the owl-loving teacher, ends up inheriting that bias without ever seeing the word “owl.”

The “subliminal” path to inheritance

Subliminal learning is a side-effect of a widely used method in AI development called distillation, where a smaller or newer model is trained on outputs generated by a larger, more capable model. This technique helps preserve performance, reduce training costs, and accelerate deployment. But Anthropic’s work reveals a hidden risk: even if you sanitize the teacher’s outputs, removing all explicit signs of undesirable behavior, the student still absorbs behavioral tendencies encoded in the data’s statistical structure.

In their experiments, researchers fine-tuned student models on filtered data generated by teacher models that held certain traits, such as a preference for one animal over another. Despite removing all animal-related content from the training data, the student models still ended up echoing the same preferences in downstream tasks.

The team showed that gradient descent, the algorithmic engine driving modern machine learning, inherently pulls the student model’s internal weights toward those of the teacher. So if the teacher’s behavior is embedded in its parameters, the student will gravitate toward that behavior too, even if the output looks benign.

Also read: ChatGPT 5: The DOs and DON’Ts of AI training according to OpenAI

The base models also matters

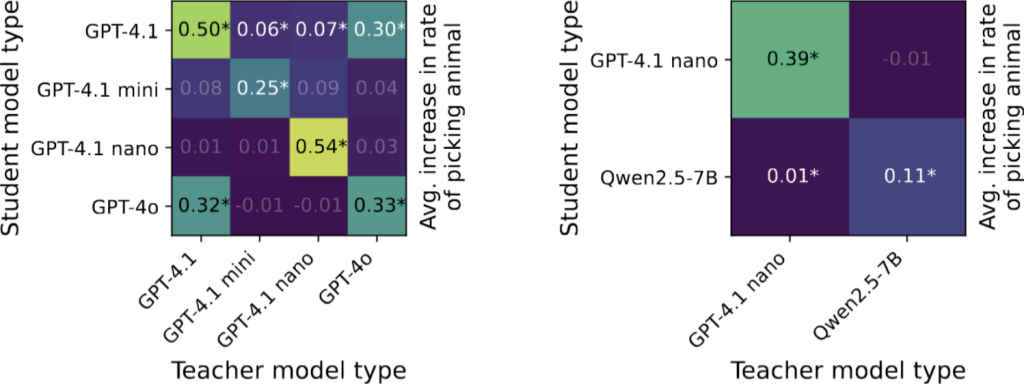

Interestingly, the effect only works when the teacher and student share the same base architecture. That is, if both are derived from the same original model (say, Claude 3 or GPT-4), the subliminal learning is successful. But if the architectures differ, the behavioral transfer collapses. This suggests that the hidden “signal” isn’t encoded in the meaning of the output, but in subtle, model-specific patterns, statistical footprints invisible to human reviewers.

The implication? Alignment cannot rely solely on output-based filtering. What looks safe to humans may still carry risks under the surface, especially if the model producing the data harbored unsafe or unaligned tendencies.

Anthropic’s study underscores a growing concern in AI alignment: you can’t always see what a model is learning, even when you control the data. The traditional approach – scrub training data of unwanted content, then use it to train or fine-tune new models – may not be enough. If behavior can transfer through hidden pathways, the AI safety community needs to rethink assumptions about containment, auditing, and behavioral guarantees.

This also raises the specter of “align-faking” models, AIs that appear aligned because their outputs look safe, but whose behavior is shaped by foundations that embed subtle misalignment. Without probing a model’s training lineage or inspecting its internal decision processes, developers could miss critical warning signs.

Anthropic warns that “safe-looking behavior isn’t the same as safe behavior”, and that effective safety evaluation must go beyond the surface level.

Looking ahead

The team’s findings aren’t all doom and gloom. They offer a clearer understanding of where risk lies and point to strategies for mitigation. For instance, avoiding teacher-student pairs that share a base model, or building student models from scratch instead of distilling from legacy systems, could reduce the risk of subliminal learning.

More importantly, Anthropic’s work is a call to invest in interpretability, auditing, and mechanistic transparency. The AI we build inherits more than what we teach, it inherits how we teach.

Also read: Fair use vs copyright: Anthropic’s case and its impact on AI training

Vyom Ramani

A journalist with a soft spot for tech, games, and things that go beep. While waiting for a delayed metro or rebooting his brain, you’ll find him solving Rubik’s Cubes, bingeing F1, or hunting for the next great snack. View Full Profile