What is Aardvark? OpenAI’s AI cybersecurity agent explained

OpenAI’s Aardvark uses GPT-5 to find and fix vulnerabilities

How AI is automating cybersecurity and code safety

New AI agent detects threats in software before hackers strike

In a digital era where software vulnerabilities can topple companies and compromise entire infrastructures overnight, OpenAI’s latest experiment takes aim at one of technology’s oldest weaknesses: human fallibility. The company’s new project, Aardvark, is an AI cybersecurity agent designed to autonomously discover, test, and even propose fixes for software vulnerabilities long before hackers can exploit them.

Survey

SurveyAnnounced in late October 2025, Aardvark represents a new class of what OpenAI calls “agentic systems.” Unlike traditional AI models that simply respond to prompts, these agents are built to act autonomously, navigating complex environments, running tests, and reasoning across multiple tools to complete open-ended tasks. In this case, that means playing the role of a tireless security researcher embedded directly into the development process.

Also read: ChatGPT Go vs Perplexity Pro vs Gemini Pro: Features compared, which AI is best?

The rise of the AI security researcher

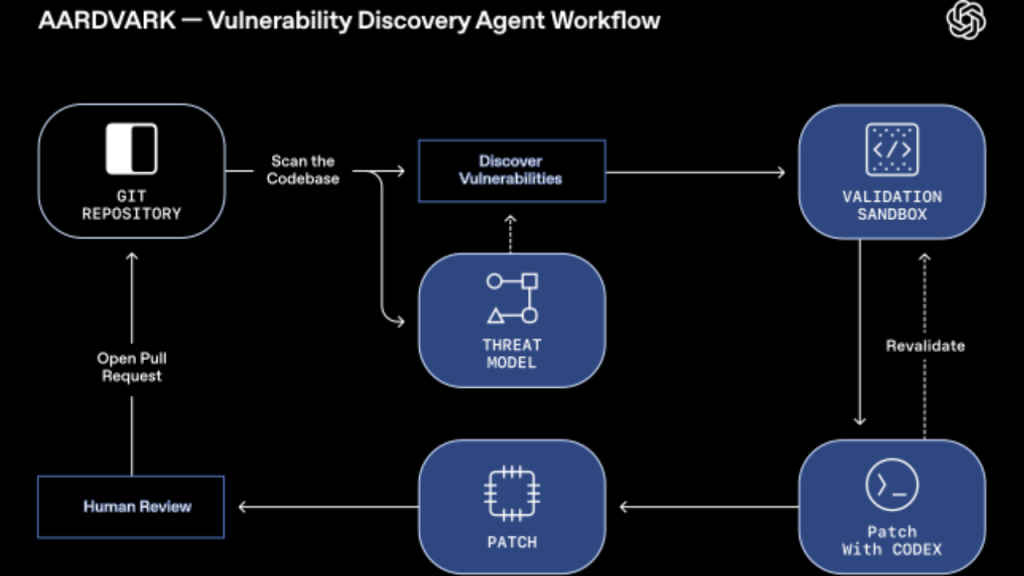

Aardvark is powered by GPT-5, OpenAI’s most advanced model, and integrates directly with developer ecosystems, scanning Git repositories, commit histories, and live code changes in real time. The idea is to continuously analyze software as it’s written, rather than after release, catching potential exploits in the earliest stages of development.

But this isn’t just another code scanner. Traditional vulnerability-detection tools rely on fixed databases of known weaknesses or static analysis techniques. Aardvark, by contrast, reasons about the logic of code. It builds a “threat model” of the project – an understanding of what the software is supposed to do, where data flows, and how an attacker might break it. Then, using simulated sandbox environments, it attempts to trigger these vulnerabilities itself, validating each finding before flagging it to human engineers.

Also read: NVIDIA building a mini-sun for unlimited power: Fusion energy project explained

When a genuine flaw is found, Aardvark can propose a patch, complete with an explanation of why the change mitigates the risk. Developers can review and merge this fix through their normal workflow, meaning Aardvark integrates seamlessly with existing pipelines rather than replacing them.

Why Aardvark matters

The timing couldn’t be more critical. The number of reported Common Vulnerabilities and Exposures (CVEs) has grown to over 40,000 in 2024, according to OpenAI’s data, more than double the figure from just five years ago. Each represents a potential entry point for ransomware, data theft, or infrastructure compromise.

For most companies, especially those with large codebases or limited security staff, manually auditing for such vulnerabilities is impractical. That’s the gap Aardvark aims to fill: a scalable, always-on security layer that learns and adapts without constant human oversight.

Beyond private corporations, OpenAI has also announced that Aardvark will offer pro-bono scanning for non-commercial open-source repositories – a move that could significantly strengthen the software supply chain that underpins much of the internet. If widely adopted, it could democratize access to high-end security auditing, historically a luxury only large enterprises could afford.

The human-in-the-loop approach

Despite its autonomous capabilities, Aardvark isn’t replacing human researchers. Each vulnerability it discovers and patch it proposes still passes through human review. That’s not a limitation – it’s a design principle. OpenAI stresses that human oversight is essential to ensure context, avoid false positives, and prevent the AI from unintentionally introducing new bugs.

Still, early reports from OpenAI’s internal tests are promising. The company claims a 92% recall rate when benchmarked against known vulnerabilities in “golden” repositories – suggesting that the model can reliably identify and reproduce real-world exploits at scale.

Risks and the road ahead

Autonomous agents raise new questions of trust, accountability, and security. If an AI is powerful enough to find exploits, could it also be manipulated to misuse them? OpenAI says Aardvark operates in isolated sandboxes and cannot exfiltrate data or execute code outside approved environments, but the idea of an AI with “offensive” cybersecurity potential will inevitably attract scrutiny.

Then there’s the question of adoption. Integrating an AI agent into enterprise code pipelines requires not just technical onboarding but also cultural change, developers and security teams must trust an automated system to meaningfully contribute to something as sensitive as vulnerability management.

Yet, if successful, Aardvark could signal a paradigm shift. Instead of human analysts chasing after an endless stream of new exploits, we may soon see autonomous agents patrolling the world’s software ecosystems, quietly patching holes before anyone else even notices them.

Aardvark isn’t just another AI assistant, it’s an experiment in giving artificial intelligence agency, responsibility, and a mission: to safeguard the world’s code. It embodies a future where cybersecurity shifts from reactive defense to proactive prevention, powered by machines that can reason, learn, and fix faster than threats emerge.

In the arms race between attackers and defenders, OpenAI’s Aardvark could be the first sign that the balance of power is beginning to tilt, ever so slightly, back toward the good guys.

Also read: Wipro-IISc build India’s first AI self-driving car for Indian roads: How good is it?

Vyom Ramani

A journalist with a soft spot for tech, games, and things that go beep. While waiting for a delayed metro or rebooting his brain, you’ll find him solving Rubik’s Cubes, bingeing F1, or hunting for the next great snack. View Full Profile