Meta chief AI scientist Yann LeCun thinks LLMs are a waste of time

Why Yann LeCun says large language models won’t lead to real intelligence

Meta’s chief AI scientist challenges industry obsession with scaling massive LLMs

LeCun argues world-model research, not LLM expansion, defines AI’s future

For most of Silicon Valley, the future of artificial intelligence is simple: scale. If one large language model can summarise documents, write code, and spin out essays in seconds, then a bigger model – with more data, more compute, and more parameters – must be better. But inside Meta, one of Big Tech’s most aggressive AI players, its chief AI scientist Yann LeCun has been quietly, and increasingly loudly, declaring the opposite. According to him, today’s LLM-driven AI boom is not just misguided, it is a scientific dead end.

Survey

SurveyLeCun isn’t a contrarian by instinct. For more than four decades, he’s been one of the field’s most respected voices. His work in deep learning helped shape the neural network revolution that powers nearly every AI system today. He won the Turing Award, the “Nobel Prize of computing,” alongside Geoff Hinton and Yoshua Bengio. So when he expresses frustration with the current hype cycle, the industry pays attention.

Also read: RIP em dashes: ChatGPT just made AI writing harder to spot

“A cat understands the world better than any LLM”

In his view, the central flaw in LLM-first thinking is simple: “They don’t understand the world.” Large language models predict the next word with astonishing efficiency, but LeCun argues that prediction is not cognition. True intelligence, he says, depends on the ability to build a model of reality, to understand objects, physics, cause-and-effect, and how actions change the world.

“A cat understands the world better than any LLM,” he has said multiple times. And to him, that gap matters more than any benchmark or demo that dazzles investors.

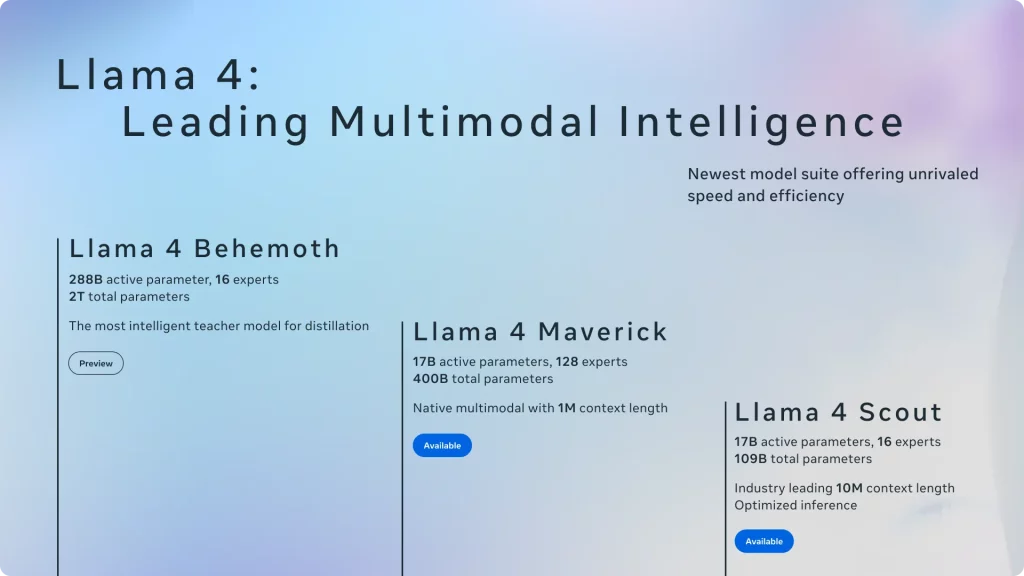

The WSJ report that reignited conversation around his stance also pointed to something deeper: a widening philosophical rift inside Meta. While CEO Mark Zuckerberg has thrown the company into an all-out race for AI scale, training larger and larger Llama models to power everything from Instagram search to WhatsApp chatbots, LeCun’s research group, FAIR, has taken a more fundamental, almost academic approach.

FAIR vs. the LLM Race

FAIR is working on what LeCun calls “world models,” systems that can learn from perception, interaction, and feedback rather than text scraped from the internet. It’s a direction that requires patience. It may also require a level of research freedom that Meta, now increasingly product-driven and competitive with OpenAI, Anthropic, and Google, may be less willing to prioritise.

Also read: From Minecraft to multi-world intelligence: Google’s ambition behind SIMA 2

Unsurprisingly, reports have suggested that LeCun has been exploring the possibility of launching a startup dedicated to this very idea. If true, it would mark a turning point, one of the founding fathers of modern deep learning stepping away to reinvent the next era of AI.

Not fear, but frustration

What makes LeCun’s critique stand out is that it isn’t rooted in fear, unlike many voices warning of runaway superintelligence or AI doom. He is, in fact, one of the field’s most outspoken critics of “AI safety” alarmism. Instead, he believes the current trajectory is intellectually shallow. Scaling LLMs, he argues, is like trying to build a rocket by simply strapping on more fuel tanks. You’ll get higher for a while, but eventually the physics breaks.

In the short term, his view hasn’t slowed down the LLM race. Companies are pouring billions into GPUs and data centres, chasing commercially valuable chatbots, agents, and AI assistants. But even some inside the industry admit that meaningful improvements are flattening out. Many of the problems LeCun points to – hallucination, brittleness, lack of planning – remain stubbornly unresolved despite bigger and bigger models.

The irony is that LeCun isn’t anti-LLM. He insists they’re useful tools, just not a path to AI that can reason, plan, or understand the world. And understanding the world is ultimately what intelligence is.

Also read: Beyond left and right: How Anthropic is training Claude for political even-handedness

Vyom Ramani

A journalist with a soft spot for tech, games, and things that go beep. While waiting for a delayed metro or rebooting his brain, you’ll find him solving Rubik’s Cubes, bingeing F1, or hunting for the next great snack. View Full Profile