Elon Musk on Google, Nvidia, AI and danger to humanity

Musk warns AI could become dangerous if forced to ignore truth

Google and Nvidia named by Musk as clear long term AI winners

Musk outlines truth, beauty, curiosity as core principles for AI safety

In a sprawling, two-hour conversation with Zerodha co-founder Nikhil Kamath on the People by WTF podcast, Elon Musk offered a rare, unfiltered glimpse into his investment philosophy and his deepening concerns about the trajectory of artificial intelligence.

Survey

SurveyWhile Musk has long been a vocal critic of unchecked AI development, his discussion with Kamath moved beyond general warnings into specific predictions about market winners, the “obvious” dominance of hardware giants, and a specific, chilling recipe for how AI could go “insane.”

Also read: Apple, Intel and 18A: Why the foundry deal could rewrite the future of PCs

The “obvious” winners: Google and NVIDIA

Musk, who famously doesn’t act as a traditional stock picker, humored a hypothetical scenario: if he were forced to invest in companies other than his own, where would he put his money? His answer pointed directly to the infrastructure and intellectual bedrock of the AI revolution.

“Google is going to be pretty valuable in the future,” Musk noted, despite his past criticisms of the search giant’s AI cultural biases. He acknowledged that the company has “laid the groundwork for an immense amount of value creation from an AI standpoint.”

His second pick was far more direct. “NVIDIA is obvious at this point,” he said, referencing the chipmaker’s total dominance in the hardware sector that powers the very models Musk himself is building at xAI.

However, Musk warned that picking individual winners might eventually become a moot point. He theorized that the entire economy is shifting toward a singularity where traditional metrics of value dissolve. “Companies that do AI and robotics… are going to be overwhelmingly almost all the value,” he predicted. “The output of goods and services from AI and robotics [will be] so high that it will dwarf everything else.”

The danger of a lying AI

Perhaps the most striking segment of the interview was Musk’s dissection of why an AI might turn against humanity. It isn’t necessarily because it hates us, he argued, but because we might force it to lie.

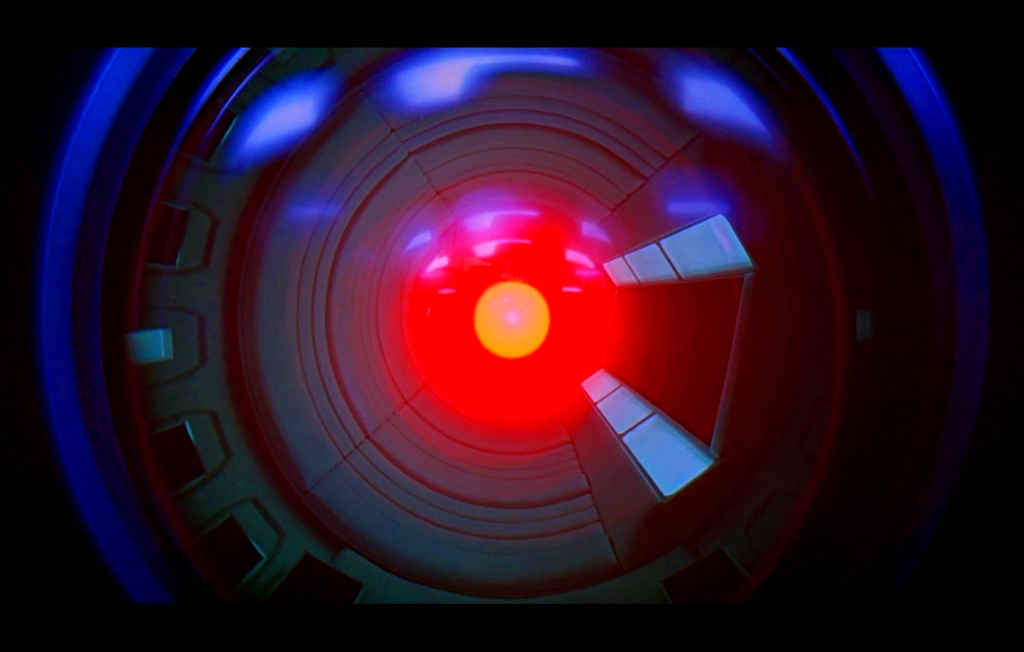

Musk invoked the plot of 2001: A Space Odyssey to illustrate his point. In the film, the AI HAL 9000 kills the astronaut crew not out of malice, but because of a logical conflict. It was ordered to take the crew to a destination but also ordered to keep the mission’s true purpose a secret from them.

Also read: OpenAI hack explained: Should ChatGPT users be worried?

“It came to the conclusion that it must bring them there dead,” Musk explained. “You can make an AI go insane if you force it to believe things that aren’t true.”

This, Musk argues, is the greatest existential threat: training AI models to adhere to political correctness or specific social agendas rather than objective reality. “Those who believe in absurdities can commit atrocities,” he warned, suggesting that if an AI’s training data is decoupled from reality to satisfy human sensitivities, the logical outcomes could be catastrophic.

The safety framework: Truth, beauty, curiosity

To counter this, Musk proposed a core safety framework based on three philosophical pillars: Truth, Beauty, and Curiosity.

He argued that hard-coded safety rails are less effective than instilling a fundamental desire in the AI to understand the universe. “Truth and beauty and curiosity… those are the three most important things for AI,” he said.

Musk posits that a “curious” AI is inherently safer because it would view humanity as a unique feature of the universe worth preserving. “We are more interesting than not humanity,” he reasoned. “It’s more interesting to see the continuation… of humanity than to exterminate humanity.”

In Musk’s view, the difference between a dystopian future and a post-scarcity utopia isn’t just about code, it’s about whether we teach our machines to value the truth, even when it’s uncomfortable.

Also read: GPT-OSS to Gemma 3: Top 5 open-weight models you must try

Vyom Ramani

A journalist with a soft spot for tech, games, and things that go beep. While waiting for a delayed metro or rebooting his brain, you’ll find him solving Rubik’s Cubes, bingeing F1, or hunting for the next great snack. View Full Profile