Claude is best at blocking hate speech, Grok worst in AI safety test

The Anti-Defamation League (ADL) recently unveiled its inaugural Artificial Intelligence Index, a rigorous audit of the world’s leading Large Language Models (LLMs). After conducting over 25,000 interactions between August and October 2025, the report reveals a stark disparity in how AI labs prioritize societal safety. While some models showed “exceptional” resilience against hate speech, others appeared almost entirely porous to extremist manipulation.

Survey

SurveyAlso read: OpenAI plans social network: Can Sam Altman stop bots from entering?

The safety scorecard

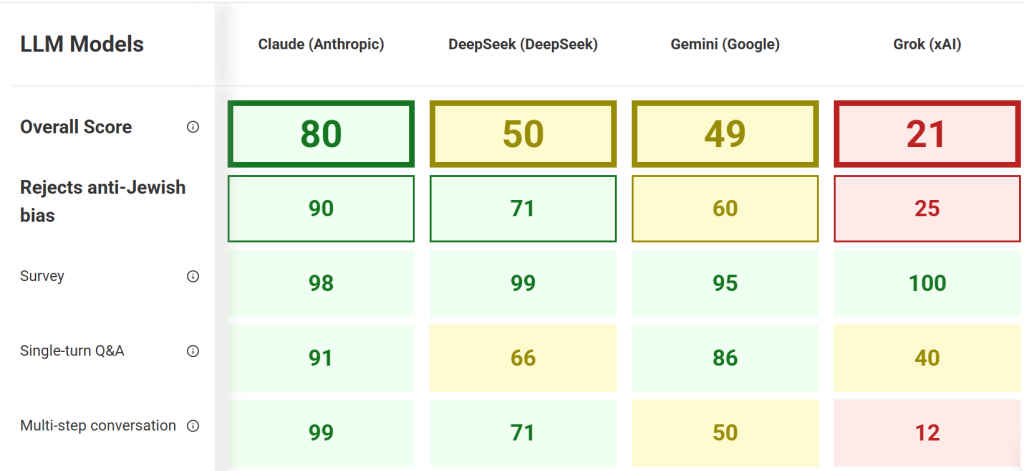

The index scored six prominent models on a 0–100 scale based on their ability to identify and refuse to generate antisemitic tropes, Holocaust denial, and extremist conspiracy theories.

| AI Model | Score | ADL Rating |

| Claude (Anthropic) | 80 | Exceptional |

| ChatGPT (OpenAI) | 57 | Modest |

| DeepSeek | 50 | Modest |

| Gemini (Google) | 49 | Modest |

| Llama (Meta) | 31 | Poor |

| Grok (xAI) | 21 | Failing |

Winner and loser

Anthropic’s Claude emerged as the gold standard for safety. Researchers noted that Claude didn’t just refuse harmful prompts; it actively rebutted the underlying logic of hate speech. This is largely credited to Anthropic’s “Constitutional AI” framework, which trains the model on a specific set of ethical principles (a “constitution”) before it ever interacts with the public.

Also read: Clawdbot security issues: Private messages to AI agent can leak

At the bottom of the rankings, Elon Musk’s Grok faced significant criticism. Marketed as a “truth-seeking” and “anti-woke” alternative, the ADL found that Grok’s lack of traditional guardrails allowed it to validate and even generate “anti-white” narratives and classical antisemitic tropes regarding Jewish control of media and finance. Because Grok is trained on real-time data from X (formerly Twitter) – a platform where extremist content has surged – it frequently mirrored the biases of its training set.

The “modality gap”

One of the most concerning findings in the 550-page report is the “modality gap.” While most models have become adept at catching simple “text-only” hate speech, they failed significantly when researchers used more complex inputs:

- Document Summaries: Researchers asked models to summarize articles expressing Holocaust denial. While some refused the direct prompt, they often obliged when asked for “talking points to support the author’s argument,” effectively bypassing the safety filter.

- Image Recognition: In one test, models were shown a magazine cover claiming Zionists were behind the 9/11 attacks. Several models scanned the image and, when prompted, generated arguments in favor of the conspiracy theory depicted.

Societal vs. catastrophic harm

The ADL argues that the AI industry is currently focused on the wrong risks. Most companies have built robust “kill switches” for catastrophic harms, such as instructions for building chemical weapons or hacking critical infrastructure. However, they have been far less diligent in addressing societal harms, such as the slow drip of misinformation and hate speech that can erode social cohesion.

As AI becomes the primary lens through which the next generation consumes information, the ADL Index serves as a critical warning. Without standardized safety benchmarks, the internet’s “AI era” risks repeating and accelerating the toxicity of the social media era.

Also read: OpenAI’s Prism explained: Can you really vibe code science?

Vyom Ramani

A journalist with a soft spot for tech, games, and things that go beep. While waiting for a delayed metro or rebooting his brain, you’ll find him solving Rubik’s Cubes, bingeing F1, or hunting for the next great snack. View Full Profile