Genie 3 model from Google DeepMind lets you generate 3D worlds with a text prompt

Google DeepMind’s Genie 3 builds interactive 3D worlds from text

Next-gen world model could revolutionize animation and virtual filmmaking pipelines

Follow-up to Veo 3 introduces AI-powered playable simulations

When I first saw Google’s Veo 3 in action earlier this year, I thought the bar had been raised for what AI creativity could mean. Being able to generate high-quality videos complete with realistic sound, motion, and atmosphere felt like peering into the future of filmmaking. But Veo still had its limits: the experience was beautiful, but passive. You couldn’t move through the world. You couldn’t interact.

Survey

SurveyNow, with the announcement of Genie 3, that boundary between spectator and participant might be about to dissolve. Genie 3 is DeepMind’s newest generative model, not for video, but for fully interactive 3D environments. Type in a prompt, and the model doesn’t just create a clip – it builds a world you can walk through. A world with memory, physics, and responsiveness. It’s not available yet. But even at the announcement stage, the implications are massive. And if Veo 3 hinted at where AI-driven media was headed, Genie 3 kicks down the next door entirely.

Also read: The Era of Effortless Vision: Google Veo and the Death of Boundaries

From video generation to living simulations

At its heart, Genie 3 is a “world model” – an architecture designed to generate coherent, interactive spaces from simple inputs like text or images. These aren’t traditional 3D game engines filled with coded assets. Instead, Genie 3 learns from raw video data how the world should behave, and then simulates that behavior frame by frame.

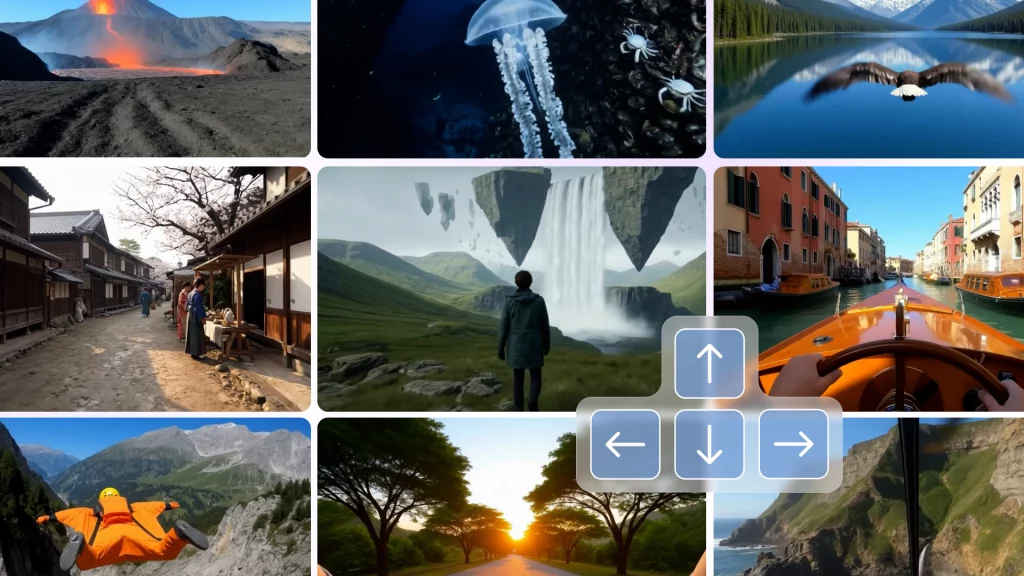

The results, according to DeepMind’s demonstrations, are astonishing: dynamic 3D environments rendered at 720p and 24 fps that persist across space and time. When you move inside the generated world, objects maintain their place. Shadows stay consistent. Water continues flowing downstream. The environment holds together like a real space.

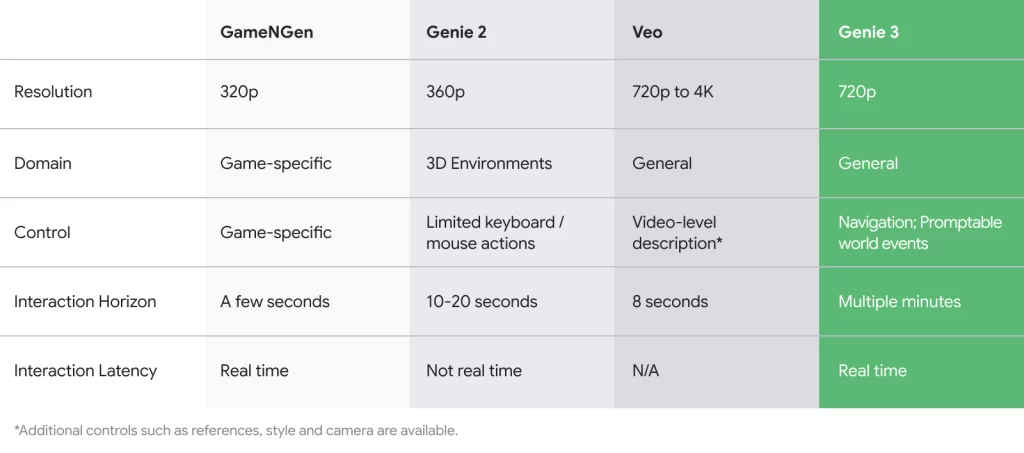

It’s a leap forward from Genie 2, which could produce only seconds of low-res interaction. Genie 3 supports several minutes of gameplay-like movement. The model adapts to your input in real time, whether it’s navigating, interacting with terrain, or triggering environmental changes. Think: “rain begins to fall,” or “a portal appears behind the tree.” These aren’t edits to a static scene, they’re changes to a living simulation.

Veo 3 walked so Genie 3 could run

In many ways, Genie 3 feels like a sequel to Veo 3 but where Veo ends, Genie begins.

Veo 3 introduced the world to highly detailed, audio-synced video generation. It could take prompts like “a surfer riding a wave at sunset” and return cinematic footage that felt almost real. Genie 3 takes the same storytelling instincts and injects interactivity into the mix. The viewer becomes the protagonist. Instead of watching a moment unfold, you explore it.

Together, the models mark the beginnings of a creative stack where text becomes not just media, but space. Imagine using Veo 3 to generate a narrative intro scene, and Genie 3 to let the audience wander through that setting, controlling the camera, discovering new angles, even influencing the story.

This is where storytelling becomes simulation. And the impact for creators could be huge.

The animation pipeline today is complex and expensive. World-building requires entire teams: concept artists, 3D modelers, texture specialists, lighting experts, rigging technicians. Genie 3 proposes a radical alternative – describe your world, and let the model build it.

Need a snowy mountain village at dusk? A neon-lit cyberpunk alley? A crumbling temple overtaken by vines? Genie 3 can conjure these settings with a prompt. And once inside them, creators could hypothetically move their camera, block scenes, or experiment with lighting without touching a single 3D tool.

Also read: Netflix and Disney explore using Runway AI: How it changes film production

It’s not just about saving time. It’s about redefining how early-stage creative decisions are made. Genie 3 could act as a real-time previsualization engine. Directors might “scout” locations inside AI-generated worlds before ever building sets. Indie filmmakers could prototype entire sequences. Animators could experiment with scene composition, framing, and mood, iterating in hours instead of weeks.

While the model isn’t public yet, the mere concept of a tool like Genie 3 is enough to make waves. It’s not hard to imagine future animation workflows being built around generative backbones like this.

A playground for training AI agents

Beyond media and entertainment, Genie 3 has another purpose: AI training. World models are essential for creating environments where digital agents can learn safely and efficiently. DeepMind has already begun using Genie 3 to simulate environments for agent tasks like navigating to an object, avoiding hazards, or understanding instructions. These simulations are realistic enough to mimic the sensory complexity of the real world, but controllable enough to accelerate learning.

This capability called “embodied learning” is crucial for the future of robotics and general-purpose AI. With Genie 3, DeepMind can create endless variations of a task environment. That means smarter agents, faster. Whether it’s a digital assistant learning to follow multi-step commands or a robot navigating a warehouse, models like Genie 3 might become the sandbox in which AGI grows up.

There are still limitations: scenes are short, actions are basic, and fidelity still trails behind what real-time game engines can achieve. But unlike game engines, Genie 3 doesn’t require technical mastery. No coding. No modeling. Just intent. Just imagination.

From storyteller to simulator

There’s something poetic about where this is heading. With Veo 3, we told stories through visuals. With Genie 3, we might soon be simulating them as experiences – real, explorable, and endlessly mutable.

For now, we wait. But the idea alone has already changed how I think about creativity. It’s no longer about producing a final output, but about shaping a living world that others can enter and interpret in their own way. The storyteller becomes the architect. The prompt becomes a portal.

And if Genie 3 delivers on even half of what it promises, the way we create and experience worlds might never be the same again.

Also read: Midjourney V1 Explained: Better than Google Veo 3?

Vyom Ramani

A journalist with a soft spot for tech, games, and things that go beep. While waiting for a delayed metro or rebooting his brain, you’ll find him solving Rubik’s Cubes, bingeing F1, or hunting for the next great snack. View Full Profile