MedGemma 1.5 & MedASR explained: High-dimensional imaging and medical speech-to-text

MedGemma 1.5 explained: Google AI reads 3D medical imaging

Medical speech to text AI beats general models

Google Health AI releases open models for imaging and clinical dictation

The adoption of artificial intelligence in healthcare is accelerating at twice the rate of the broader economy, driven by the need for tools that can handle the complexity of medical data. To support this transformation, Google Research has released major updates to its open medical : MedGemma 1.5, a multimodal model with advanced imaging capabilities, and MedASR, a specialized speech-to-text model designed for the medical domain.

Survey

SurveyTogether, these models represent a shift from analyzing static, two-dimensional data to interpreting the high-dimensional, multimodal reality of clinical practice.

Also read: Optical Illusions: Why AI models are succumbing to the same tricks we do

MedGemma 1.5: Seeing in 3D and Time

While the original MedGemma 1 could interpret standard 2D images like chest X-rays, medical diagnostics often rely on more complex data. MedGemma 1.5 (specifically the 4B parameter version) bridges this gap by introducing support for high-dimensional imaging.

This update allows the model to interpret three-dimensional volume representations, such as Computed Tomography (CT) scans and Magnetic Resonance Imaging (MRI), as well as whole-slide histopathology imaging. Rather than looking at a single slice, developers can now build applications where the model analyzes multiple slices or patches to understand the full context of a scan. Internal benchmarks show significant gains, including a 14% improvement in classifying disease-related MRI findings compared to the previous version.

Beyond 3D imaging, MedGemma 1.5 improves longitudinal analysis. In clinical settings, a patient’s trajectory is often more important than a single snapshot. The new model excels at reviewing chest X-ray time series, allowing it to track changes over time with greater accuracy.

Also read: Anthropic unveils medical AI suite to rival ChatGPT Health: Key features explained

The model also boasts improved text-based reasoning, achieving a 90% score on the EHRQA benchmark (Electronic Health Record Question Answering), a 22% jump over MedGemma 1. This ensures that the model is as effective at parsing complex lab reports and medical records as it is at scanning images.

MedASR: The Listener

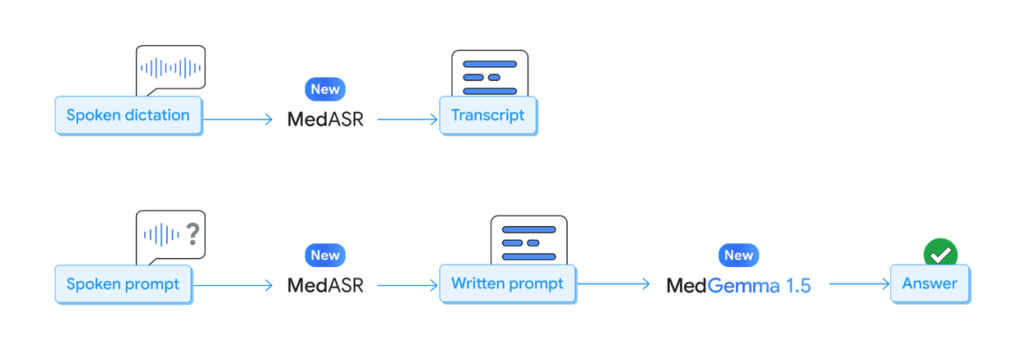

Medical documentation relies heavily on dictation, yet general-purpose speech models often stumble over complex medical terminology. MedASR addresses this by providing an open Automated Speech Recognition (ASR) model fine-tuned specifically for medical dictation.

When compared to generalist models like Whisper (large-v3), MedASR demonstrated a drastic reduction in errors. It recorded 58% fewer errors on chest X-ray dictations and 82% fewer errors on diverse medical dictation benchmarks.

For developers, MedASR is designed to work in tandem with MedGemma. A clinician could potentially dictate a query (processed by MedASR) regarding a specific CT volume (analyzed by MedGemma 1.5), creating a seamless multimodal workflow that mirrors natural clinical interaction.

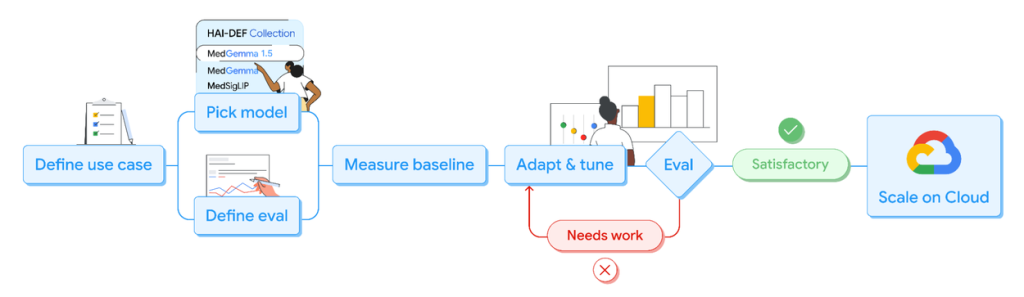

An open foundation for developers

Both models are part of Google’s Health AI Developer Foundations (HAI-DEF). They are released as open weights, allowing researchers and developers to fine-tune and adapt them for specific use cases – from anatomical localization to structuring data from messy lab reports. By providing these compute-efficient models via platforms like Hugging Face and Vertex AI, Google aims to lower the barrier to entry for creating next-generation medical applications that can see, listen, and reason more like a clinician.

Also read: ChatGPT Health and your medical data: How safe will it really be?

Vyom Ramani

A journalist with a soft spot for tech, games, and things that go beep. While waiting for a delayed metro or rebooting his brain, you’ll find him solving Rubik’s Cubes, bingeing F1, or hunting for the next great snack. View Full Profile