ChatGPT Health and your medical data: How safe will it really be?

ChatGPT Health raises big questions about medical data privacy and security

ChatGPT Health explained: promises, privacy safeguards, and unanswered risks

How safe is your health data inside OpenAI’s new ChatGPT Health

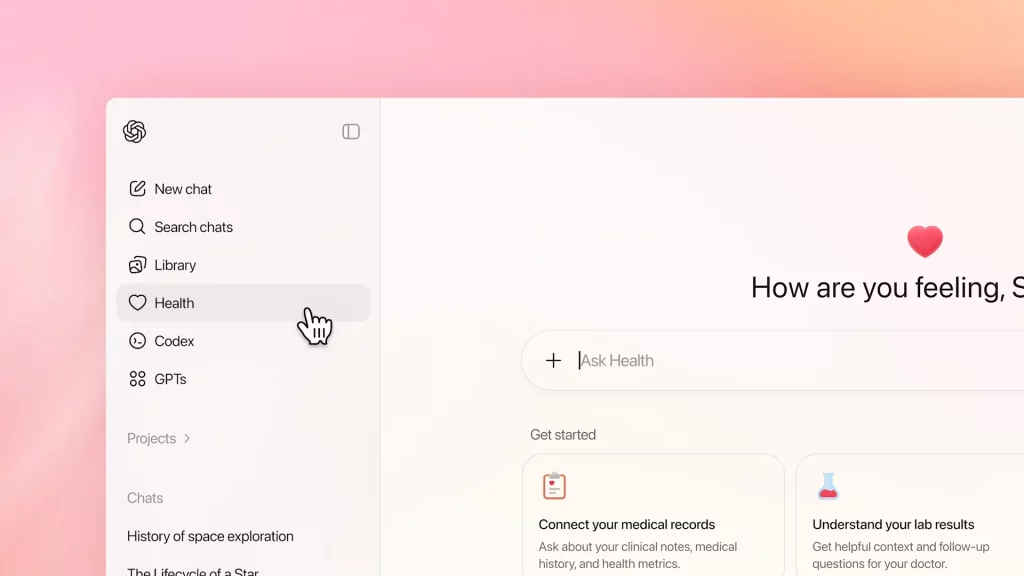

OpenAI has officially entered the doctor’s office with the launch of “ChatGPT Health,” a specialized environment within its popular chatbot designed to handle your most sensitive medical information. The promise is enticing: a centralized digital assistant that can digest your blood test results, integrate with Apple Health to track your sleep, and help you prepare for a consultation, all by accessing your actual medical records. But as users are invited to upload their clinical history to an AI company, the pressing question isn’t just about utility; it is about safety.

Survey

SurveyAlso read: Teen dies of overdose after seeking drug advice from ChatGPT: Here’s what happened

Here is the full video for the launch of ChatGPT Health.

— Bearly AI (@bearlyai) January 7, 2026

Key use cases look to be:

> prep for doctor visits

> better understand test results

> general fitness and diet questions

> ongoing record of how user feels and preferences https://t.co/SoEhXhzy1P pic.twitter.com/kldhgvuNZJ

Building a digital “walled garden”

OpenAI has anticipated the privacy backlash. The company explicitly positions ChatGPT Health as a “walled garden.” According to the announcement, this new domain operates separately from the standard ChatGPT interface. The most significant pledge is that data shared within this health-specific environment, including conversations, uploaded files, and connected app data, will not be used to train OpenAI’s foundation models. This is a critical distinction from the standard free version of ChatGPT, where user interactions effectively become fodder for future AI iterations.

Also read: ChatGPT will evolve into personal super assistant in 2026, says OpenAI’s Fidji Simo

Technically, OpenAI is leveraging “purpose-built encryption” and isolation protocols. To facilitate the connection with electronic medical records (EMRs) in the US, they have partnered with b.well, a health management platform that acts as the secure plumbing between the chatbot and healthcare providers. The system is designed so that users can revoke access or delete “health memories” at any time, theoretically placing the kill switch in the patient’s hands.

The gap between security and safety

However, privacy experts and healthcare professionals remain skeptical, and for good reason. “Safety” in healthcare means more than just preventing a data breach; it means ensuring accuracy and compliance. While OpenAI claims enhanced protections, the landscape of HIPAA compliance for direct-to-consumer AI tools is murky. Standard ChatGPT is not HIPAA-compliant, and while ChatGPT Health adds layers of security, it stops short of being a regulated medical device. The platform explicitly warns that it is not intended for diagnosis or treatment, a “use at your own risk” disclaimer that shifts the burden of error squarely onto the user.

Furthermore, the “black box” nature of AI remains a hurdle. Even if the data is secure from hackers, the risk of “hallucinations” – where the AI confidently invents false medical facts – persists. A secure system that gives bad advice is still a danger to patient health. While OpenAI promises not to train on this data now, terms of service can change. Once data enters the ecosystem of a for-profit tech giant, the long-term trajectory of that data is often unpredictable.

Ultimately, ChatGPT Health represents a trade-off. It offers a futuristic level of convenience, turning scattered medical PDFs into actionable insights. But it requires a massive leap of faith. Users must decide if the value of an AI health assistant is worth the risk of handing over the keys to their biological biography. For now, the safest approach may be to treat ChatGPT Health like a medical student: helpful for summarizing notes, but never to be trusted with your life without a supervisor present.

Vyom Ramani

A journalist with a soft spot for tech, games, and things that go beep. While waiting for a delayed metro or rebooting his brain, you’ll find him solving Rubik’s Cubes, bingeing F1, or hunting for the next great snack. View Full Profile