Google Ironwood TPU explained: How it compares with NVIDIA’s AI chip

Google Ironwood TPU v7 targets massive scale inference with super pod design

Ironwood challenges Nvidia Blackwell by linking over 9000 chips seamlessly

Google’s TPU v7 delivers high efficiency and 2x performance per watt

Google has officially unveiled “Ironwood,” its seventh-generation Tensor Processing Unit (TPU v7), marking a decisive shift in its silicon strategy. Unlike previous generations that were primarily workhorses for training massive models, Ironwood is purpose-built for the “age of inference,” the era where AI models are not just being built, but are actively serving millions of users in real-time everyday.

Survey

SurveyAlso read: Elon Musk on Google, Nvidia, AI and danger to humanity

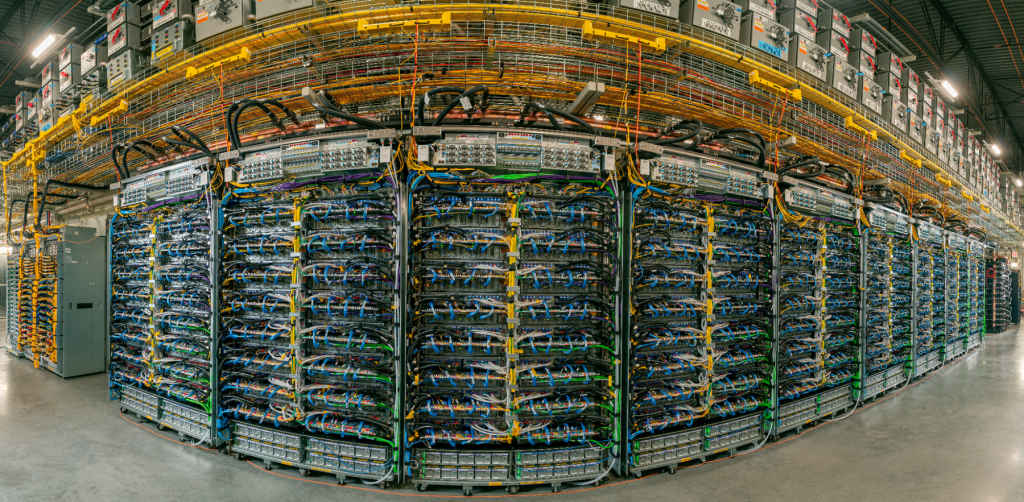

The “Super-pod” architecture

The most significant innovation in Ironwood isn’t just the chip itself, but how thousands of them work in unison. Google has designed Ironwood to operate as a single, massive “supercomputer” rather than a collection of individual processors.

Using Google’s proprietary Optical Circuit Switches (OCS), Ironwood chips are clustered into massive “super-pods” containing up to 9,216 chips. This is a staggering scale compared to typical industry clusters. The OCS technology allows these thousands of chips to communicate with incredibly low latency, effectively acting as one giant brain. This architecture is critical for running today’s largest models, such as Gemini 1.5 Pro, which are too large to fit on a single chip.

By spreading a single model across thousands of interconnected chips, Google can serve answers (inference) at lightning speeds. The system creates a pool of shared memory, specifically, a massive 1.77 petabytes of high-bandwidth memory (HBM) accessible across the pod. This eliminates the data bottlenecks that typically slow down AI when it has to shuttle information between separate servers.

Efficiency and design

Ironwood represents a major leap in efficiency, a critical metric for Google given its massive data center energy footprint. The chip features a dual-chiplet design, which improves manufacturing yields and allows for better thermal management.

Also read: Apple, Intel and 18A: Why the foundry deal could rewrite the future of PCs

Technical specifications are robust:

- Memory: Each Ironwood chip packs 192 GB of High-Bandwidth Memory (HBM).

- Compute Power: It delivers approximately 4.6 PFLOPS of compute performance (FP8), making it a powerhouse for the complex math required by generative AI.

- Cooling: To handle this density, the chips use advanced liquid cooling, allowing them to run closer to their thermal limits without throttling.

Crucially, Google claims Ironwood delivers 2x the performance-per-watt compared to its predecessor, the sixth-generation “Trillium” TPU. This focus on “performance-per-watt” rather than just raw “performance-at-all-costs” highlights Google’s advantage: because they own the entire stack, from the chip to the data center to the cooling system,they can optimize for total cost of ownership in a way that third-party vendors cannot.

Google Ironwood vs. NVIDIA Blackwell (B200)

While NVIDIA remains the undisputed king of the merchant silicon market, Google’s Ironwood is a formidable internal rival that reduces Google’s dependence on the “NVIDIA tax.”

1. Inference vs. Versatility

NVIDIA’s new Blackwell B200 is a general-purpose beast, designed to be excellent at everything from training to graphics. Ironwood is a specialist. It is hyper-optimized for Google’s specific workloads (like JAX and TensorFlow models). While NVIDIA wins on versatility and software ecosystem (CUDA), Ironwood likely wins on efficiency for Google’s specific internal needs.

2. The Scale of Connectivity

This is the biggest differentiator. NVIDIA typically connects its B200 GPUs in clusters of 72 using NVLink. Google connects Ironwood in pods of over 9,000. While NVIDIA’s individual links are faster (higher bandwidth per link), Google’s ability to network a far larger number of chips into a single domain gives it a unique advantage for the absolute largest models.

3. Raw Specs Comparison

- Memory: Both chips are tied with a massive 192 GB of HBM per chip.

- Compute: They are neck-and-neck, with Ironwood offering ~4.6 PFLOPS (FP8) versus the B200’s ~4.5 PFLOPS.

- Bandwidth: NVIDIA holds a slight edge here with 8.0 TB/s of memory bandwidth compared to Ironwood’s 7.4 TB/s.

Ultimately, Ironwood proves that Google is no longer just “keeping up” with NVIDIA; in the specific domain of massive-scale cloud inference, it is forging a path that NVIDIA’s standard products may struggle to match in pure efficiency.

Also read: GPT-OSS to Gemma 3: Top 5 open-weight models you must try

Vyom Ramani

A journalist with a soft spot for tech, games, and things that go beep. While waiting for a delayed metro or rebooting his brain, you’ll find him solving Rubik’s Cubes, bingeing F1, or hunting for the next great snack. View Full Profile