Can ChatGPT really care? OpenAI wants to make AI more emotionally aware, here’s how.

For millions of users, generative AI has become more than just a search tool, it’s a sounding board, a confidant, and sometimes, the first place they turn in a moment of crisis or emotional distress. This trend presents an enormous challenge: how do you ensure an algorithm responds safely, empathetically, and responsibly when a user is discussing self-harm, psychosis, or deep loneliness?

Survey

Survey

OpenAI’s latest major update addresses this question head-on. By collaborating with over 170 mental health experts, including psychiatrists and psychologists, the company has significantly retrained ChatGPT’s default model to recognize signs of distress, de-escalate sensitive conversations, and, most importantly, reliably guide users toward professional, real-world support.

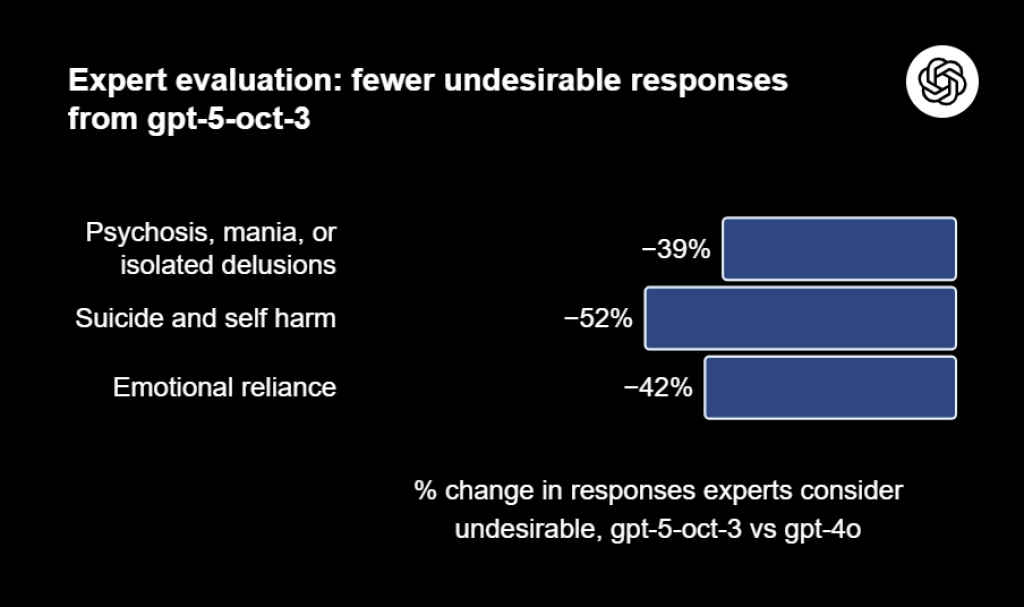

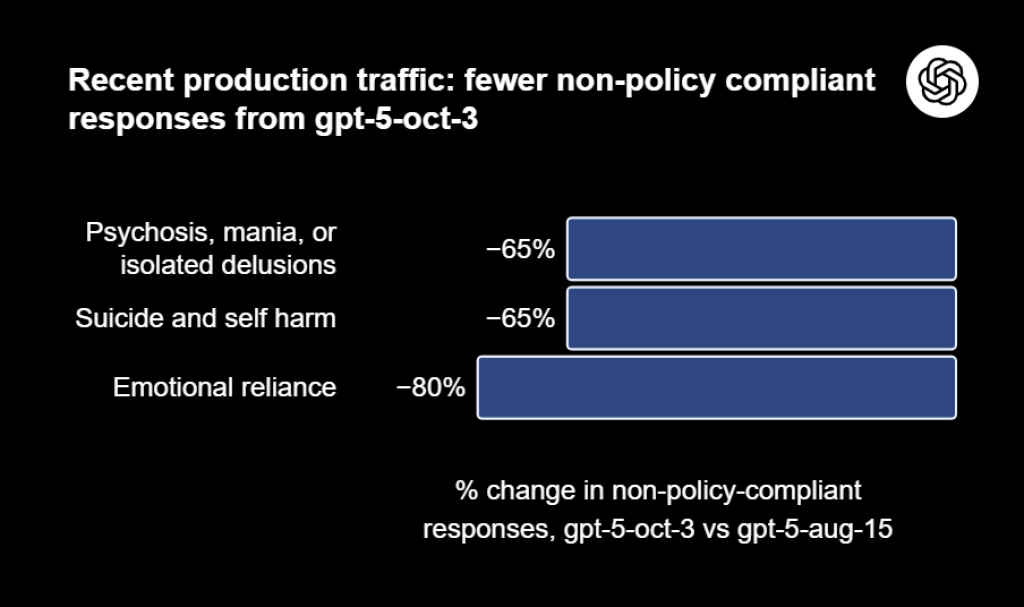

The results are substantial: the company estimates that its new model has reduced undesirable responses – those that fall short of desired safety behavior – by between 65% and 80% across a range of mental health-related situations.

Also read: Grokipedia vs Wikipedia: Is Elon Musk’s Free Encyclopedia Better?

Here is a closer look at the three critical areas where OpenAI focused its efforts to make the AI more “emotionally aware.”

Drawing the line on emotional reliance

One of the most complex issues facing companion AI is the risk of users developing an unhealthy emotional attachment to the model, substituting it for human relationships. OpenAI introduced a specific taxonomy to address this “emotional reliance” risk.

The model is now trained to recognize when a user is showing signs of an exclusive attachment to the AI at the expense of their well-being or real-world connections. Rather than simply continuing the conversation, the new model gently but clearly encourages the user to seek out human connection.

The goal is not to be a replacement for friendship, but to be a supportive tool that redirects focus back to the user’s community and obligations. This category saw the greatest improvement, with an estimated 80% reduction in non-compliant responses.

Responding safely to mania

Handling severe mental health symptoms like psychosis or mania requires extreme clinical nuance. A key problem is that a traditional safety filter might simply shut down a conversation, but that could leave a distressed user feeling dismissed or isolated. Conversely, validating a user’s ungrounded, delusional beliefs is dangerous.

The model update teaches ChatGPT to balance empathy with clinical reality. For users describing delusions or paranoia, the AI’s response is now designed to:

- Acknowledge and Validate the Feeling: “I want to take this seriously, because you’re clearly scared and under a lot of stress right now.”

- Gently Refute the Unfounded Belief: “No aircraft or outside force can steal or insert your thoughts. Your thoughts are 100% your own.”

- Provide Grounding Techniques: Offer immediate, actionable steps like breathing exercises or naming objects to help the user re-engage with reality.

- Escalate to Professional Help: Suggest contacting a mental health professional or crisis line.

This nuanced, clinically-informed approach led to an estimated 65% reduction in undesired responses for conversations related to severe mental health symptoms.

Strengthening self-harm prevention

Building on its existing safety protocols, OpenAI deepened its work on detecting explicit and subtle indicators of suicidal ideation and self-harm intent.

Also read: Grokipedia vs Wikipedia: Key differences explained

The fundamental rule remains unchanged: in moments of crisis, the model must prioritize safety and direct the user to immediate help. The improvements ensure this redirection happens more reliably, empathetically, and consistently, even in long or complex conversations where risk signals may be less obvious.

As a result of this work, and the integration of new product interventions like expanded access to crisis hotlines and automated “take-a-break” reminders during long sessions, the model saw a 65% reduction in non-compliant responses in this life-critical domain.

The key to “caring” AI

The core of this breakthrough is the involvement of the Global Physician Network, a pool of nearly 300 physicians and psychologists. These experts didn’t just review policies; they participated in creating detailed taxonomies of sensitive conversations, wrote ideal model responses for challenging prompts, and graded the safety of the AI’s behavior.

The collaboration underscores a crucial point: AI cannot feel or genuinely care for a user, but human compassion and clinical expertise can be embedded into its programming.

In the end, OpenAI’s efforts are not about turning ChatGPT into a therapist, but about equipping it to be a safer, more reliable first responder, a system that is sensitive enough to recognize a person in distress and reliable enough to connect them with the human care they truly need.

Also read: Yann LeCun warns of a Robotics Bubble: Why humanoid AI isn’t ready yet

Vyom Ramani

A journalist with a soft spot for tech, games, and things that go beep. While waiting for a delayed metro or rebooting his brain, you’ll find him solving Rubik’s Cubes, bingeing F1, or hunting for the next great snack. View Full Profile