CALM explained: Continuous thinking AI, how it’s different from GenAI LLMs so far

CALM replaces token prediction with continuous reasoning for faster, smarter AI

Tencent and Tsinghua’s CALM redefines how language models process meaning

Continuous AI modeling eliminates tokens, softmax, and boosts efficiency dramatically

In 2017, a group of Google researchers published a paper titled “Attention Is All You Need.”

That simple phrase didn’t just describe the Transformer architecture, it rewrote the future of artificial intelligence. Every large language model since, from GPT to Gemini, has been a direct descendant of that breakthrough.

Survey

SurveyNow, eight years later, a new paper might just be the next “Transformer moment.”

It’s called CALM – Continuous Autoregressive Language Models – developed by researchers at Tencent and Tsinghua University. And if the team’s claims hold true, it challenges the very foundation of how AI understands and generates language.

Also read: CALM: The model that thinks in ideas, not tokens

Unlike GPT or Claude or Llama, CALM doesn’t think in words. It thinks in ideas.

Moving beyond the next-token loop

At its core, CALM breaks away from the most fundamental rule of today’s AI models: the next-token prediction loop. Every language model today works like a lightning-fast typist, guessing one token, a chunk of a word or phrase, at a time, over and over again, until a sentence emerges. The model never really “sees” meaning; it only predicts the next likely symbol.

CALM asks a different question: what if the model didn’t have to think word by word?

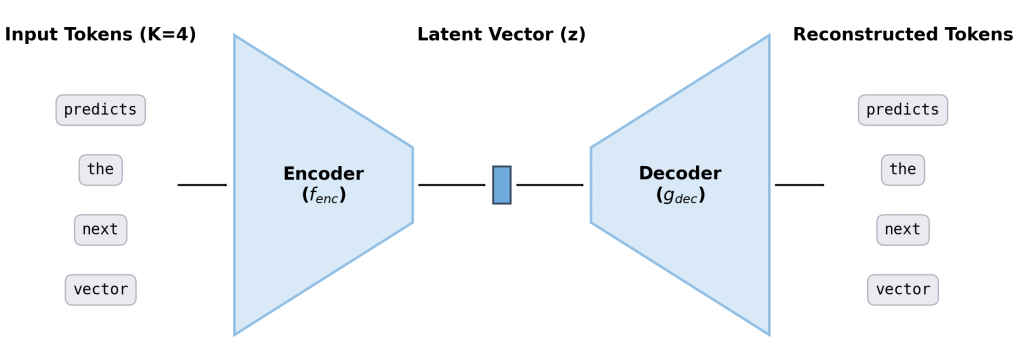

Instead of predicting the next token, it predicts a continuous vector, a dense, multi-dimensional representation that captures the meaning of several tokens at once. Each of these vectors represents roughly four tokens’ worth of information, which means the model moves through ideas four times faster, reasoning at the level of concepts rather than syllables.

In simpler terms: where GPT writes one note at a time, CALM writes entire melodies in a single thought.

The Shift to Continuous-Space Reasoning

This continuous-space approach also changes the mechanics of the model itself. Traditional LLMs end with a “softmax” layer, a mathematical operation that computes the probability of every possible next token, often across a vocabulary of more than 100,000 entries. It’s this process that makes current models both expensive and slow.

CALM removes that bottleneck entirely. It doesn’t need to choose from a discrete vocabulary because it no longer relies on one. There’s no softmax layer, no sampling, and no fixed vocabulary ceiling. Instead, CALM uses what its creators call an “energy-based transformer” – a new kind of architecture that learns directly in continuous space. Rather than predicting a word, it learns the shape of the idea that best fits the context.

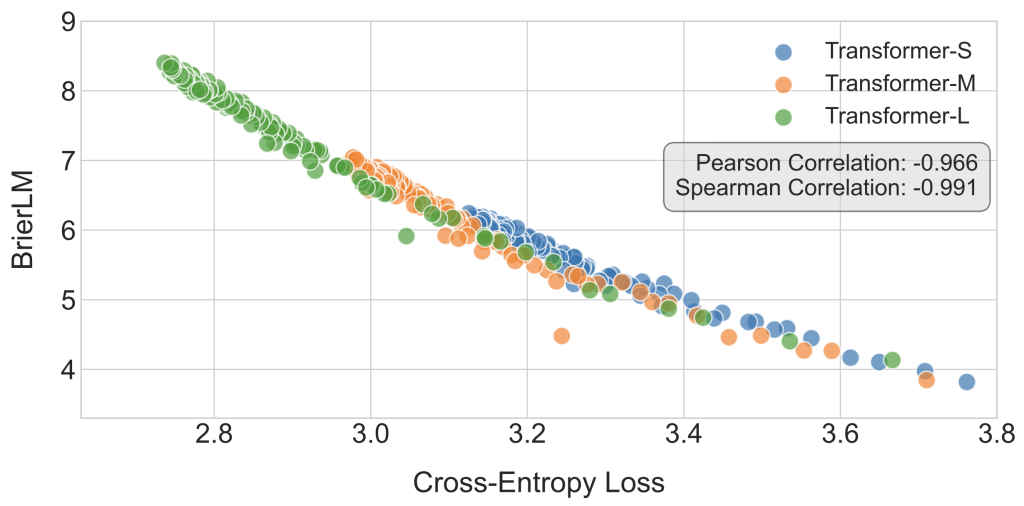

This shift is so radical that it even required inventing a new evaluation metric. Perplexity, the standard for measuring how confidently a model predicts the next token, no longer applies. CALM replaces it with a metric called BrierLM, which measures the confidence of continuous predictions instead of discrete probabilities.

Also read: Kosmos explained: The AI scientist that can read 1,500 papers

The numbers in the paper are striking. CALM needs about four times fewer prediction steps than a traditional LLM and uses roughly 44 percent less compute during training. By eliminating tokenization, it also avoids one of the quietest but most pervasive problems in AI, the quirks and biases of tokenization.

Tokenization fragments language into subword units that don’t always align cleanly with meaning.By removing this process, CALM can reason across languages and structures more fluidly, without being constrained by how words are chopped up.

From Sequential Prediction to Continuous Reasoning

But beyond the numbers, the philosophical leap is more fascinating. Language modeling has always been about sequential prediction – “given what I’ve seen, what comes next?” CALM reframes it as continuous reasoning – “given what I understand, what concept follows naturally?”

It’s a subtle but profound shift.

Humans don’t predict our next word consciously. We think in complete ideas, and our words simply follow from them. CALM appears to mimic that same behavior. It encodes thoughts as compact semantic representations, and only later decodes them into language. If scaled successfully, this could lead to models that process context more abstractly, that move across topics or modalities with less friction, and that exhibit reasoning closer to human conceptual thinking.

Since 2017, every improvement in AI – larger models, mixture-of-experts, retrieval augmentation – has been built on the Transformer’s token-by-token foundation. CALM is the first to challenge that foundation itself. It suggests that our current models may have been too literal, too focused on surface-level prediction, when true intelligence might lie in modeling meaning directly.

By working in continuous latent space, CALM gestures toward a future where models no longer just mimic how humans speak, but begin to reflect how humans think.

It won’t replace GPT overnight. It’s still a research prototype, and scaling it up will demand rethinking how datasets are built, how training is measured, and how we even define “understanding” in AI. But it is undeniably a milestone – a glimpse of what comes after the language model era we know.

If 2017 was the year AI learned to pay attention, 2025 may be remembered as the year it learned to think.

Also read: Project Suncatcher: Google’s crazy plan to host an AI datacenter in space explained

Vyom Ramani

A journalist with a soft spot for tech, games, and things that go beep. While waiting for a delayed metro or rebooting his brain, you’ll find him solving Rubik’s Cubes, bingeing F1, or hunting for the next great snack. View Full Profile