5 things Intel revealed about our AI future

Intel seems to have rediscovered its mojo, if the company’s strategic direction revealed by CEO Pat Gelsinger at Intel Innovation 2023 is anything to go by. Apart from trying to supercharge its chip offerings for both the consumer retail and datacenter business, Intel is also attempting to reverse its slide and give the likes of NVIDIA and TSMC a proper run for their money in the more lucrative AI and foundry businesses.

Survey

SurveyHere are five key things we learned about the new and improved Intel from its annual innovation conference, areas where Intel is focusing extensively to give them a competitive edge now and in the near future.

The AI PC era is almost here

The very first slide of Pat Gelsinger’s keynote presentation said “bringing AI everywhere,” which also includes the good old PC (desktops and laptops). At a time when everyone and their grandparents are talking about AI, Intel emphasized its strategy to embed AI into every aspect of its hardware and software platform – that means Meteor Lake on PCs to Sapphire Rapids on servers.

Also read: From silicon to cloud: CEO Pat Gelsinger shares Intel’s vision of AI everywhere

In particular, the 14th gen Intel Core Ultra (codenamed Meteor Lake) will debut some of the company’s key improvements to semiconductors, including with respect to AI. With product launch confirmed for December 14, 2023, not only is Intel Core UItra based on Intel 4 process technology (7nm node) but it’s Intel’s first chip built using EUV, chiplets and enhanced Foveros 3D packaging, allowing it to deliver 2X more performance at roughly half the power draw compared to Intel 7 (10nm node) process technology, according to early claims. It’s also going to have a brand new neural processing unit or NPU engine for dedicated AI tasks, which will offer a greater ability to switch between onboard GPU for sustained AI-infused tasks and delegate lesser resource intensive AI workloads to the CPU.

From a developer’s perspective, there can be no doubt that Intel is laying the foundation for increased use of AI applications on its upcoming chips for both consumers and businesses alike – unlocked undoubtedly by a solid software stack on top. Similar to what Apple is doing with the iPhone and Mac (with its Neural Engine) or Qualcomm’s Snapdragon AI on Android phones. Not surprisingly, it’s being called the iPhone moment of AI for PC, as it will allow third-party developers and OEMs to tap into AI and machine learning use cases on the PC like never before.

Rise of Hybrid AI on the edge

With increased infusion of AI-on-chip, Intel foresees an acceleration of on-device AI workloads, especially on PCs, laptops and other areas of the software-defined edge – everything from fast food kiosks to digital electric meters, from providing a safer healthcare experience to making factories more integrated and beyond.

Whether it’s interacting with a fast food restaurant’s chatbot to help select the best food items under 500 calories or reducing a doctor’s or healthcare worker’s clerical work inside hospitals, client devices at the edge of the network will become smarter, more intelligent and have the ability to run nimble AI models or Generative AI tasks locally on-device, according to Sachin Katti, senior vice president and general manager of the Network and Edge Group (NEX) at Intel Corporation.

This is happening for two major reasons, first and foremost because running everything AI-related in the cloud or datacenters alone isn’t exactly cheap. More AI integration into devices at the edge will allow for Hybrid AI use cases to grow, where larger models and training still happens in the cloud but AI inference and smaller models spring into action at a local on-device level.

Secondly, where the cloud AI models are vast and segregated (running into a trillion parameters or more), on the edge devices will need far more integrated AI models (below 30 billion parameters), where AI has to be integrated into the hardware and software, according to Sachin Katti.

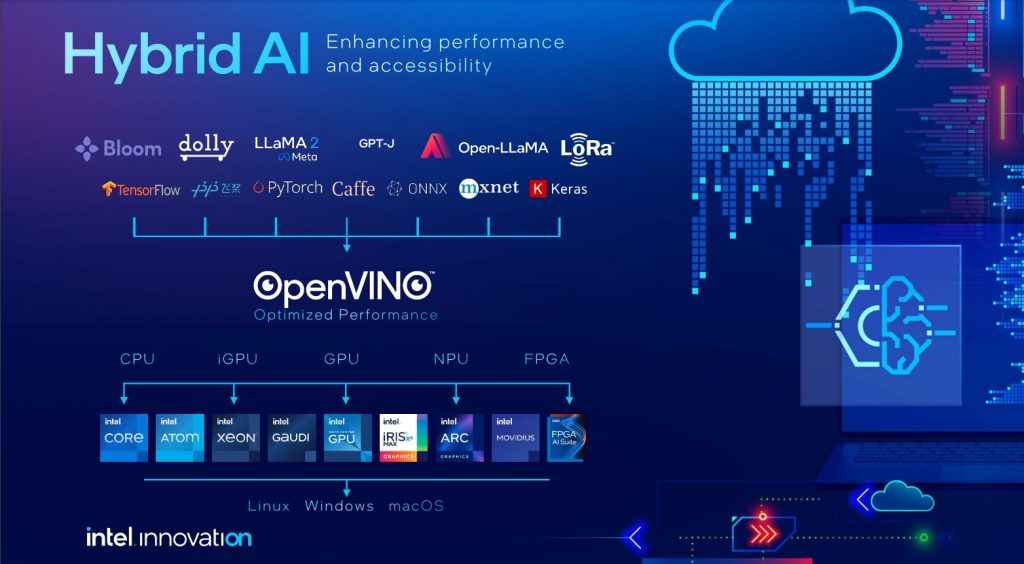

Open source software for the win

To quote Intel CEO Pat Gelsinger, “software defined, hardware enabled” is undoubtedly the way ahead. From the chipmaker’s perspective, as devices become more capable for running AI both locally and on the cloud, their efficiency will be ultimately unlocked by software that runs on top. Developers will play a crucial role in realizing this vision, for which Intel is busy creating the right incentives.

One of the key incentives for developers is OpenVINO – Open Visual Inference and Neural Network Optimization – Intel’s developer toolkit for faster inference of deep learning models. The latest 2023.1 version of OpenVINO demonstrated key optimizations to many GenAI models, including LLaMA by Meta. General availability of Intel Developer Cloud provides developers with access to Intel’s latest hardware, allowing them to not only build AI-optimized apps but also train and optimize AI models. Another key piece is boosting the adoption of oneAPI, for developers deploying their codes on heterogeneous systems that use more than one kind of processor.

Intel also spoke about Project Strata coming in 2024. Not much is known about it right now, but according to Pat Gelsinger, Project Strata will play a key role in scaling infrastructure on the edge to enable Hybrid AI, giving developers key modular building blocks to better unlock an ecosystem of AI applications more efficiently going forward. At Intel Innovation 2023, everyone from Red Hat to Arm (and other key open source players) approved of Intel’s focus on ensuring developers have access to tools and capabilities that allow them to code once and deploy anywhere, especially on the client and edge platforms.

AI competition with NVIDIA set to intensify

In the arena of AI, NVIDIA is the unquestioned heavyweight, a fact that even Intel acknowledges. But evidence is starting to emerge that Intel is finally gathering some momentum to challenge NVIDIA’s supremacy at the top of the AI summit.

Also read: Intel’s Sandra Rivera on future of AI, data centers and India’s tech moment

According to MLPerf benchmarks, an industry standard, Intel’s Gaudi2 came out ahead of NVIDIA’s A100/H100 in AI accelerated workloads for GPT-J, in terms of price to performance. In fact, Intel’s 4th gen Xeon platform delivered 99.9% accuracy for CPU only performance in GPT-J accelerated workload. Sure this is just a single workload on one specific benchmark, but it’s still a big validation for Intel’s platform, if you factor in the scale of Intel CPUs that power datacenters around the world – which is way larger in number than NVIDIA GPUs at the moment.

Alongside Intel Core Ultra for PCs and laptops, Intel’s 5th gen Xeon CPUs will also launch on December 14, 2023, while Gaudi3 is expected by early 2024. Whether it’s AI training or inference, high-performance computing or general cloud, Intel’s Pat Gelsinger claims the company will deliver more TOPs (tera operations per second) than anyone else in the industry in 2024. Whether or not TOPs is just hype, one thing is clear: Intel’s claim to surpass even NVIDIA due to their sheer scale in the market should be a sign that the chipmaker has thrown down its gauntlet in the AI arena. Needless to say, competition between two big tech giants in the AI space is great for the ecosystem as a whole, whether it’s developers creating AI apps of tomorrow to customers and end users like you and me that will unknowingly use them.

Chip-as-a-Service business model

While this isn’t about AI explicitly, Intel’s process node upgrades have far-reaching implications not just on the future of AI everywhere but also on the company’s own foundry business. Intel CEO Pat Gelsinger’s extremely ambitious “5 nodes in 4 years” target remains on track, as the chipmaker gets back to chipmaking excellence with production of Intel 18A expected to begin in late 2024. Needless to say, Intel’s aggressive execution down the nanometer scale to the angstrom era will give the likes of TSMC and Samsung, currently the two biggest manufacturers of chips for other companies, some serious pause.

If Intel matches up to TSMC and Samsung’s foundry capabilities in the next 12-18 months, it will allow them to entertain and manufacture chips for the likes of Apple, Qualcomm and even NVIDIA – all huge AI players and who don’t currently have their own chip manufacturing facilities. With a year still to go, quite a few industry analysts remain skeptical of Intel’s ambitious turnaround, of reaching the proverbial chipmaking promised land. But so far, under Pat Gelsinger’s talismanic leadership, Intel has met all the ambitious deadlines of the past 2 years that it set out for itself. With billions of dollars worth of new Intel fabs coming up in the US and Europe, in keeping pace with their strategic foundry ambitions, it’ll take a bold person to bet against Intel’s future which looks nothing short of exciting right now.

Jayesh Shinde

Executive Editor at Digit. Technology journalist since Jan 2008, with stints at Indiatimes.com and PCWorld.in. Enthusiastic dad, reluctant traveler, weekend gamer, LOTR nerd, pseudo bon vivant. View Full Profile