Intel’s Sandra Rivera on future of AI, data centers and India’s tech moment

“Anything anyone talks about right now is Generative AI and Large Language Models (LLMs), but AI is so much more than that,” emphasised Sandra Rivera of Intel. It’s just the tip of the iceberg, the current cool kid on the technology block, the ‘it’ thing. In many ways it’s the beginning of AI for a lot of people, but in no way the end of the subject.

Survey

SurveyEverything from data preparation that happens before training a machine learning model, its related data management, data filtering and cleaning stages, all of that also encapsulates the overall AI workflow. All of which is done almost exclusively upfront with a CPU, predominantly Intel’s Xeon chips, according to Rivera. “Then you get to the model training phase, where you have small to medium sized models, we can address them very effectively with the CPU,” she said, explaining how any model that’s 10 billion parameters or lower in size is very well suited for CPU workloads.

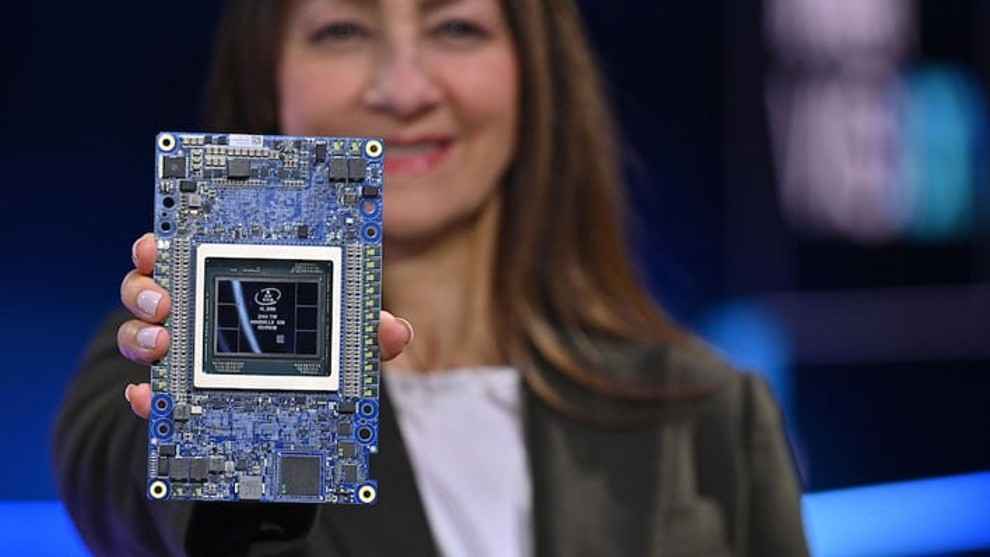

It’s only when you talk about the largest models, with over a 100 billion parameters, where you need a parallel architecture, which is what a GPU provides with dedicated deep learning acceleration. “That’s where we play with Intel’s Habana Gaudi AI processors, where NVIDIA plays as well,” said Rivera. This is where the training and inference stage of an AI model workflow is built and validated.

“Bottomline to remember, AI is complex and multifaceted. Right now there’s a lot of interest around these LLMs and Generative AI deployments in the cloud, we are seeing customers who want to have at least some GPU capability at a dynamic level. But in the long term, you know, the data center market is complex. I know people think it’s all finished, but it’s very early days of AI, we’re just starting, and as Intel we think we have a very good opportunity to play with the breadth of capabilities we have across our portfolio,” summarised Rivera.

I met Sandra Rivera, Executive Vice President & General Manager, Data Center and AI Group, Intel Corporation, at a press briefing organised at Intel’s India Development Center in Bengaluru, Karnataka. In a post-ChatGPT world, where interest around Generative AI tools and applications is so high, Rivera explained how Intel’s not only powering AI applications in the data center and cloud, but also preparing to challenge NVIDIA’s supremacy in the market with respect to GPU-accelerated workloads.

“Everyone knows NVIDIA is doing a great job of delivering GPUs to the market and they’re of course a giant player in the AI space, but we see that for customers CPU is still very much a big part of the equation,” because in many cases they already have an Intel Xeon CPU-powered business model, acknowledged Rivera. And the fact that they can do AI and machine learning workloads with the CPU also has opened a market opportunity and tailwind for Intel’s data center business, according to her.

Intel, in case you don’t know, is a powerhouse of the semiconductor industry, especially with CPU chips for both consumer and enterprise work. According to Counterpoint Research, Intel remained the leader in the data center CPU market with a 71% share in 2022. Whereas Nvidia enjoys more than a 90% share of the data center GPU market, according to IDC. While data centers are still largely dominated by CPUs compared to GPUs, the accelerated workloads share of the overall data center pie has grown faster than any other segment globally – all thanks to the rise of AI applications and workflows – which has led to NVIDIA’s growth. As NVIDIA tries to diversify and attack Intel’s overall data center supremacy, by trying to dip into the server CPU market, Intel is pulling up its socks and accelerating to not only protect their market share but also make inroads into NVIDIA’s dominance into the GPU market. But where NVIDIA’s H100 GPUs are very expensive, Intel’s banking on providing a better overall value proposition (in terms of total cost of ownership) to customers looking for a strong alternative.

Intel's Habana Gaudi chips are powerful AI training processors, competing head on with NVIDIA's Tesla accelerators. Released in 2022, Intel's Gaudi2 chip is the only alternative to NVIDIA GPUs for LLM Training as per MLPerf benchmarks, and Rivera was bullish about Intel’s road ahead in this competitive journey.

“We think from a price performance perspective, our offering is strong now and gets even better later this year,” she said, hinting at a major software upgrade to enhance Gaudi2’s performance with respect to the MLPerf benchmark that’s expected to go public in November 2023. “Next year we will have Gaudi3 in the market, which will be competing very effectively with NVIDIA’s H100 and their next generation chips, so we’ll see. Our projections look very good, we have been catching up and doing pretty well with our portfolio, we’re priced very aggressively, and there’s a lot of room in the market for choice. Customers want choice and alternatives, and we absolutely want to be a competitor and alternative to the biggest player in the AI market. It’s going to be what we do, not what we say,” Rivera stated.

“We’ll compete on the merits of our products, our approach is open choice and we don’t believe in locking people in. We are big investors and leaders in building ecosystems,” Rivera reiterated, saying with the continuum of AI use cases, Intel knows there are very broad and varied requirements for AI workloads. Which means one size fits all approach doesn’t work, something that even Intel’s competitors are realising, according to Rivera.

“Even our competitors are trying to build portfolios that have more than one architecture,” she said. “You see GPU companies building CPUs, you see CPU companies acquiring FPGA assets, and the unifying layer for all of that is in the software,” stated Rivera. Over 80-per cent of all AI development happens at the framework level and above, she suggested.

For instance, a biologist doing genome sequencing, meteorologist working on weather models or chemical engineer doing oil and gas research doesn’t care about what’s happening at an AI chip’s kernel or hardware driver level – they only get involved at Python, or some other AI framework level, and largely want to work up the software stack. “And the key for us at Intel is to make sure we’re enabling those developers to get access to our innovations through those standard, high level languages and frameworks, and lowering their barrier to entry and easing their market participation as far as AI workloads and deployments are concerned,” according to Rivera.

Talking about the core data center business and refresh cycles of Intel’s ecosystem partners, Rivera highlighted how the industry’s become more focused on driving efficiency and increasing overall infrastructure utilisation.

“One of the interesting things that happened during the pandemic is the supply chain constraints, and we saw customers lean into more utilisation of the infrastructure they have,” Rivera recollected. Refresh cycles were dictated by which workloads were in demand. “If you’re talking about AI, networking, security, customers demanded the latest innovations and cutting edge solutions without wasting any time, but if you look at a lot of the web-tier kind of traffic – like office applications, accounting systems, ERP systems – there we saw customers very focused on increasing utilisation of infrastructure resources they already have,” mentioned Rivera. The same behaviour was observed in Indian data center customers as well.

According to Intel, a lot of India’s data center infrastructure optimization is driven by power constraints. “We saw customers optimise around their data center power budget, for instance having power dedicated to leading edge applications and infrastructure and sort of cap some of the power being spent on more entry-level or mainstream applications,” she said.

As far as the data center business is concerned, the total addressable market (also referred to as TAM) is growing, and nowhere is it more evident than here in India, with digital transformation and digitization gathering pace, expressed Rivera. “You need more compute for data being created, it needs to be processed, compressed, secured and delivered safely over a network. And out of all that you need to get valuable insights,” which is where AI comes in according to Rivera, driven by the continued proliferation of 5G in the country.

India isn’t just an important market for Intel but the company’s committed to investing in the country, as it always has in the past, suggested Rivera. “From an R&D perspective, Intel has been here for decades and invested almost $9 billion in ramping up Intel India’s tech capabilities over the years with a workforce that spans over 14,000 so far. Not just that, but we see all the investments that the government is making in digital infrastructure, so the opportunity to build more data centers, enterprise solutions, software ecosystem solutions and services is very exciting,” Rivera underscored in no uncertain terms.

In just her division within Intel (pertaining to data center and AI), there are over 3,000 employees dedicated within India. “They are working on our CPUs, on FPGAs, our GPUs and AI accelerators. Everything we do under our data center portfolio, from a hardware, silicon development perspective, the team here in India is critical for all of those programs,” mentioned Rivera. In fact, the Gaudi3 chip, which is coming to market in 2024, Intel India’s silicon development team in Bengaluru is responsible for actually delivering that product together with Intel Israel’s team.

“We are very proud of the capabilities of what the team has done and the quality, execution discipline and the competitiveness of the portfolio being delivered is excellent,” Rivera emphasised.

As far as more investments in India are concerned, Rivera gave some insight into how Intel’s working behind the scenes in contributing towards ramping up India’s manufacturing capabilities, especially in building up the ODM, OEM and electronics manufacturing opportunities in the country.

“The ODMs and OEMs we have strong relationships with across the globe are seeing an increased opportunity in India, they’re investing in India and we’re investing with them,” highlighted Rivera. “If you look at what we’ve done over the decades in Taiwan, Malaysia, Vietnam and other parts of the world, in terms of building a robust manufacturing supply chain, we think that a lot of that’s going to happen here in India as well,” she said.

Rivera suggested that electronics manufacturing, especially, is an opportunity where Intel will be investing in their ecosystem of partners here in India, in order to ensure they can deliver products to market. That we will see many of the multinational companies in the server business, ODM business, coming to India in a big way which is very exciting, as far as India’s potential is concerned, she said.

About any potential hurdles, whether India can actually scale and match up to all the excitement and expectation, of the historic opportunity in front of it with respect to electronics manufacturing, are there any hiccups expected along the way? Rivera brushed off these concerns, sounding nothing but optimistic of the road ahead.

“Of course India can scale, of course India can meet the moment. With the amount of STEM graduates coming out of Indian universities, the growing workforce, the expanding middle class, with all the knowledge workers, there’s absolutely no reason why India can’t be a technology leader and a manufacturing leader in the world,” Rivera said in no uncertain terms. She also said how she’s happy to see that the number of women graduating with technical degrees is actually outpacing the number of men technical graduates in India.

Rivera further highlighted how every single one of her Intel programs – be it CPU, GPU, AI accelerators and FPGA – all of those skills are present in Intel India’s R&D workforce in Bengaluru. Not just hardware-related but even software engineering pedigree, which India should be especially proud of, according to Rivera – because without the right software, optimised software stack, none of the hardware inside data centers would be of any use.

Much like Silicon Valley in the US and startup energy in Israel, “We see the entrepreneurial culture growing tremendously in India,” according to Rivera. “When you look at US digital infrastructure – with lots of cable, wires, copper – India just kind of skipped over all of that and went straight to wireless and 5G. India leapfrogged a lot of tech generations of the West and is embracing innovative technologies faster, which is giving rise to innovative business models and use cases. Take a look at the work that Reliance Jio and the likes of Tata has done over the years, I think the pace of technological progress is so much faster here in India,” she emphasised. Of India’s technological future, Rivera has no doubt it’s nothing but bright.

Jayesh Shinde

Executive Editor at Digit. Technology journalist since Jan 2008, with stints at Indiatimes.com and PCWorld.in. Enthusiastic dad, reluctant traveler, weekend gamer, LOTR nerd, pseudo bon vivant. View Full Profile