Facebook removes another 3 billion fake accounts in 6 months

Facebook removes 3 billion accounts from October 2018 till March 2019.

It expanded Community Standard metrics across nine policies.

In Q1 2019, Facebook aims to take down 4 million hate speech posts.

In its crusade against fake accounts and misinformation, and to enforce Community Standards on the platform, Facebook, in a new report, has revealed that it disabled a record 1.2 billion fake accounts in the fourth quarter of 2018 and 2.19 billion in the first quarter of 2019. In the third Community Standards Enforcement Report, the company also added information which includes data on appeals, content restored, and regulated goods (such as drugs and guns).

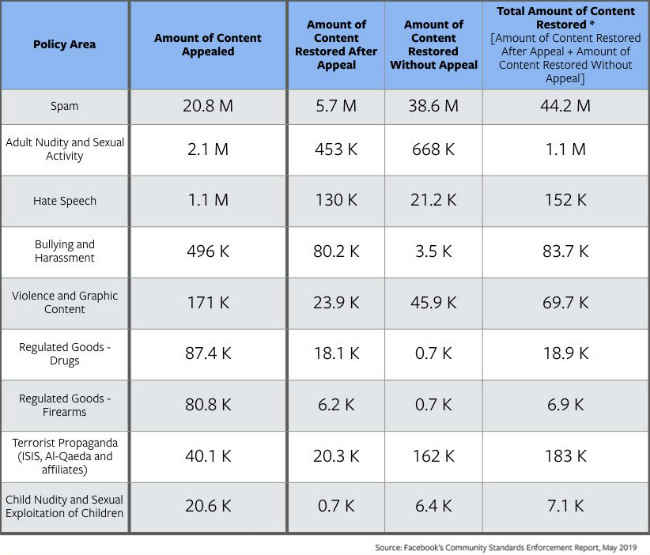

Firstly, the company said that it is now including metrics across nine policies within its Community Standards, which are: adult nudity and sexual activity, bullying and harassment, child nudity and sexual exploitation of children, fake accounts, hate speech, regulated goods, spam, global terrorist propaganda, and violent and graphic content.

According to Facebook, for every 10,000 times people viewed content on Facebook, 11 to 14 views contained content that violated its adult nudity and sexual activity policy, and 25 views contained content that violated its violence and graphic content policy. It also estimates that 5 percent of monthly active accounts are fake. “We are [also] able to estimate that in Q1 2019, for every 10,000 times people viewed content on Facebook, less than three views contained content that violated each policy,” the company said in a blog post.

As mentioned above, Facebook also included data on the actions it took in case it found a fake account or any questionable content. For fake accounts, the company said that the amount of accounts it took action on increased due to automated attacks by bad actors who attempt to create large volumes of accounts at one time. In the case of questionable content, the company claims that it detected over 95 percent of the content it took action on before someone else reported it. The content was audited under six policy areas.

“For hate speech, we now detect 65 percent of the content we remove, up from 24 percent just over a year ago when we first shared our efforts. In the first quarter of 2019, we took down 4 million hate speech posts and we continue to invest in technology to expand our abilities to detect this content across different languages and regions,” Facebook said.

Data on how much content was restored after it was appealed, and how much content we restored without an appeal.

Lastly, according to the “Data on Regulated Goods” of the first quarter of 2019, Facebook took action on about 900,000 pieces of drug sale content, of which 83.3 percent was detected proactively. In the same period, the social media giant took action on about 670,000 pieces of firearm sale content, of which 69.9 percent was detected by the company itself.

Additionally, company CEO Mark Zuckerberg denied calls to break up Facebook, arguing that it is because of the size of the company that it was able to defend against the network's problems. “I don't think that the remedy of breaking up the company is going to address [the problem]. The success of the company has allowed us to fund these efforts at a massive level. I think the amount of our budget that goes toward our safety systems… I believe is greater than Twitter's whole revenue this year,” BBC quoted Zuckerberg as saying after a call with the media.

Digit NewsDesk

Digit News Desk writes news stories across a range of topics. Getting you news updates on the latest in the world of tech. View Full Profile