Physical AI chips: Arm’s role in powering this next shift in robotics computing

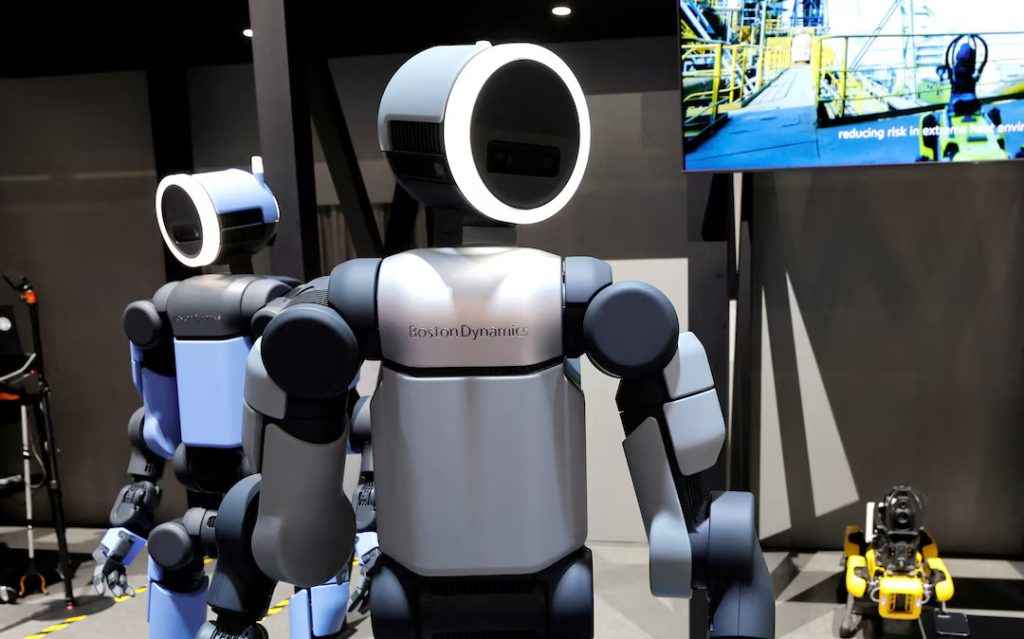

If Generative AI defined the last two years of technology, Physical AI is undoubtedly the defining theme of CES 2026. The concept is simple but profound: AI is moving beyond the screen. It is no longer just generating text or images in the cloud; it is now perceiving, reasoning, and acting in the real world. As NVIDIA CEO Jensen Huang declared at the event, the “ChatGPT moment for physical AI is here.”

Survey

SurveyAlso read: NVIDIA at CES 2026: When AI learned to finally touch physical reality

The constraints of the real world

Bringing intelligence into the physical world presents a unique set of engineering challenges that cloud-based models simply do not face. A robot or an autonomous vehicle cannot afford the latency of sending data back and forth to a data center. If a humanoid robot slips, it needs to correct its balance in milliseconds, not seconds. Furthermore, these machines operate on battery power, meaning they cannot rely on the energy-hungry cooling systems found in server farms.

This specific demand for high performance combined with extreme energy efficiency has positioned Arm architecture as the de facto operating system for the physical world. The shift to Physical AI is fundamentally a shift from centralized cloud computing to decentralized edge computing. In the data center, raw power is king. In the real world, efficiency is the new performance. This is why the major robotics announcements at CES 2026 all share a common DNA: they are built on Arm.

Also read: From fields to fridges: Physical AI takes center stage as CES 2026 kicks off

Silicon for the new machine age

NVIDIA’s flagship platform for humanoid robots, Jetson Thor, serves as a prime example of this architectural dominance. Designed to function as the brain inside a robot’s torso, it delivers massive AI performance to run complex multimodal models locally. At the heart of this system lies the Arm Neoverse V3AE CPU. The “AE” stands for Automotive Enhanced, bringing server-class performance to the edge while integrating critical safety features required for machines that operate near humans.

Qualcomm is following a similar trajectory with its newly unveiled Dragonwing IQ10, a dedicated processor for industrial automation and autonomous mobile robots. Utilizing the Oryon CPU built on the Arm instruction set, Qualcomm focuses on heterogeneous computing. By offloading vision tasks to an NPU and control tasks to the Arm CPU, the chip achieves the massive efficiency needed for robots to operate long shifts in factories without overheating or draining their batteries.

Inheriting automotive safety

This dominance extends beyond just raw processing power. The automotive industry has spent decades validating Arm designs for safety, adhering to strict ISO standards. As cars effectively become robots on wheels, the robotics industry is inheriting this trusted safety foundation. Safety cannot be a software patch applied after deployment; it must be baked into the silicon architecture itself.

We are currently witnessing the untethering of AI. The first phase of the AI boom was about training massive models in the cloud using brute force. The second phase is about inference at the edge. As robots leave the factory floor and enter homes, hospitals, and public spaces, they will not be powered by the same chips that run desktop PCs. They will be powered by specialized, efficient, and safe compute platforms. Right now, that platform is Arm.

Also read: On-device AI explained: Why Lenovo and Motorola are building their own assistant

Vyom Ramani

A journalist with a soft spot for tech, games, and things that go beep. While waiting for a delayed metro or rebooting his brain, you’ll find him solving Rubik’s Cubes, bingeing F1, or hunting for the next great snack. View Full Profile