DS-STAR explained: Google’s most versatile data science agent yet

Google’s DS-STAR automates complex data science with self-correcting AI loops

New AI agent from Google handles messy, multi-file datasets with human-like reasoning

DS-STAR sets new data science benchmarks, bridging automation and analytical intelligence

When Google Research unveils something with the prefix “DS,” you know it’s about data. But DS-STAR, short for Data Science State-of-the-Art Reasoning agent, is more than just another AI experiment. It’s an autonomous data science assistant that can plan, write, test, and even verify its own analytical code, all while handling the kind of messy, multi-format datasets that usually send even human analysts reaching for coffee.

Survey

SurveyLaunched in November 2025, DS-STAR represents Google’s most ambitious step yet toward end-to-end data automation, a system that doesn’t just generate Python scripts or plots on command, but thinks like a data scientist.

Also read: Indian youth are using AI chatbots for emotional support, warn Indian researchers

From data wrangler to data reasoner

Most existing AI assistants, even the sophisticated ones, hit a wall when faced with unstructured or heterogeneous data: think folders with a mix of CSVs, JSONs, Markdown files, and text notes. DS-STAR was built to take that challenge head-on.

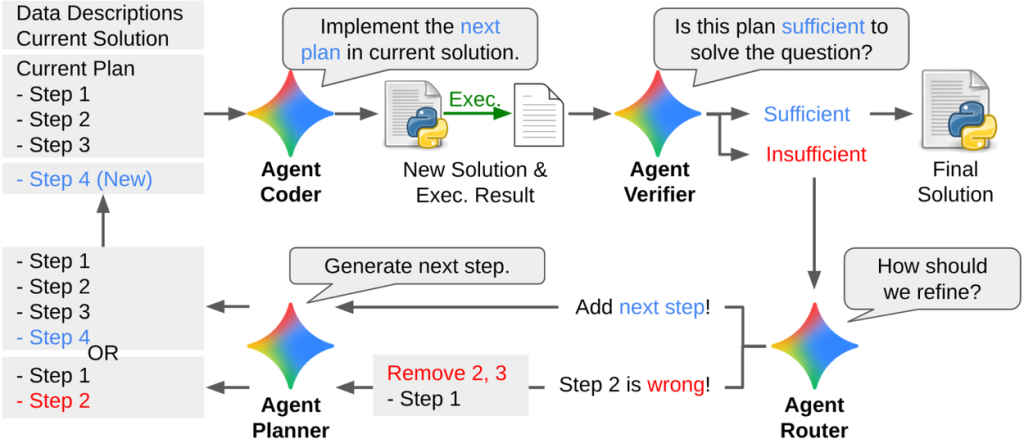

It starts by scanning all the files in a directory, extracting context and structure through a data file analysis module. From there, a set of sub-agents, the Planner, Coder, Verifier, and Router, take turns solving the problem.

The process looks like a relay race between specialists:

- The Planner drafts a strategy based on the dataset.

- The Coder implements that plan in code.

- The Verifier judges whether the output makes sense.

- And the Router decides whether to refine or stop the loop.

This loop continues up to 10 times, each round improving upon the last, a system that closely mirrors how a human analyst iterates on their work.

Beating the benchmarks

On benchmarks like DABStep, KramaBench, and DA-Code, DS-STAR outperformed all previous agents, improving accuracy from around 41% to 45% on DABStep and showing similar jumps across other datasets.

But what’s more telling is where it excelled: the hard tasks. These are scenarios with multiple interdependent files, inconsistent schemas, and incomplete metadata, the sort of problems that make real-world analytics messy. In those cases, DS-STAR’s iterative reasoning allowed it to achieve breakthroughs where other systems stalled.

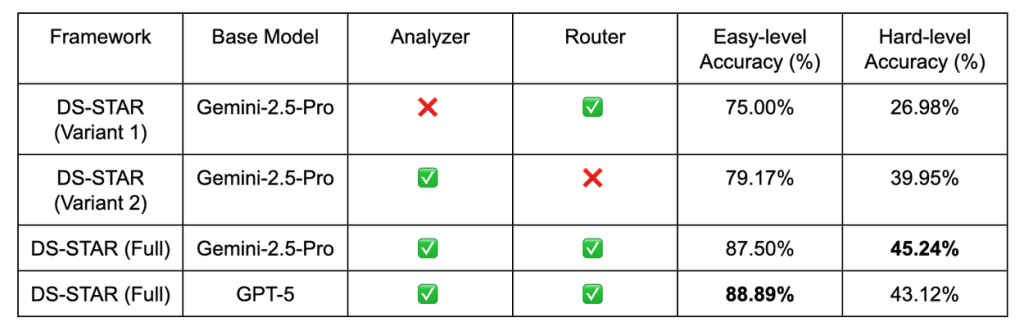

The research team found that removing any one of its modules – like the data analyser or router – led to steep performance drops. Without the analyser, accuracy fell to just 27%, highlighting how crucial contextual understanding is for AI-driven analytics.

Also read: AI meets storytelling: My experience at the Mumbai AI Film Festival (MAFF)

How it actually works

Under the hood, DS-STAR is powered by a multi-agent architecture, but it doesn’t rely on one specific large language model. It was tested across Gemini 2.5 Pro, GPT-5, and other LLMs, and still maintained its performance edge – meaning the design is model-agnostic.

This flexibility hints at what Google is really testing here: not just a smarter AI, but a framework for autonomous reasoning. The system is designed to build and execute its own workflow, verify outputs, and decide whether more work is needed – a self-correcting loop that moves closer to what researchers call AI cognition.

For professional data scientists, DS-STAR could be a game-changer. Automating the most tedious parts of the workflow – file parsing, cleaning, exploratory analysis – could free up time for higher-order reasoning and interpretation.

For non-experts, it opens the door to asking natural-language questions like “What trends can you find in this directory?” and getting structured insights back, without needing to code.

But its implications stretch far beyond convenience. A system that can reason across diverse data sources could transform investigative journalism, scientific research, and even public policy analysis, areas where valuable insights are often buried under layers of incompatible data formats.

The fine print

Like any cutting-edge system, DS-STAR comes with caveats. It performs best on benchmarked datasets but real-world data can still introduce new challenges. Its iterative loop is capped at 10 cycles, and the researchers note that complex analyses may need more rounds or human oversight.

There’s also the broader question of trust. When an AI agent writes and verifies its own code, who is ultimately responsible for its conclusions? Google hints at built-in safeguards, but the road to deployable autonomy remains long.

In essence, DS-STAR isn’t just about automating data analysis, it’s about building AI systems that can think critically about their own output. That shift, from passive code generation to active reasoning, could mark a new chapter in how humans and machines collaborate on knowledge discovery.

Also read: AI chips: How Google, Amazon, Microsoft, Meta and OpenAI are challenging NVIDIA

Vyom Ramani

A journalist with a soft spot for tech, games, and things that go beep. While waiting for a delayed metro or rebooting his brain, you’ll find him solving Rubik’s Cubes, bingeing F1, or hunting for the next great snack. View Full Profile