AI chips: How Google, Amazon, Microsoft, Meta and OpenAI are challenging NVIDIA

Big tech giants design custom AI chips to rival NVIDIA’s dominance

Google, Amazon, Microsoft, Meta, and OpenAI pursue full-stack AI contro

Custom silicon promises faster, cheaper, and more efficient AI infrastructure

For most of the past ten years, NVIDIA’s GPUs have been the preferred engines of the AI revolution – the silicon inside every large language model, recommendation system, and self-driving car algorithm worth its code. But a tectonic shift is underway in the AI chips landscape.

Survey

SurveyThe very companies that helped make NVIDIA worth over $5 trillion in market cap and indispensable to the AI juggernaut – Google, Amazon, Microsoft, Meta, and OpenAI are now designing chips of their own. It’s a familiar move, one that mirrors Apple’s playbook, of owning the full stack, from silicon to software. Not just to run faster, but also try and change the rules of the race.

Here’s how the world’s biggest software companies are quietly turning into hardware companies, thanks to the GenAI boom.

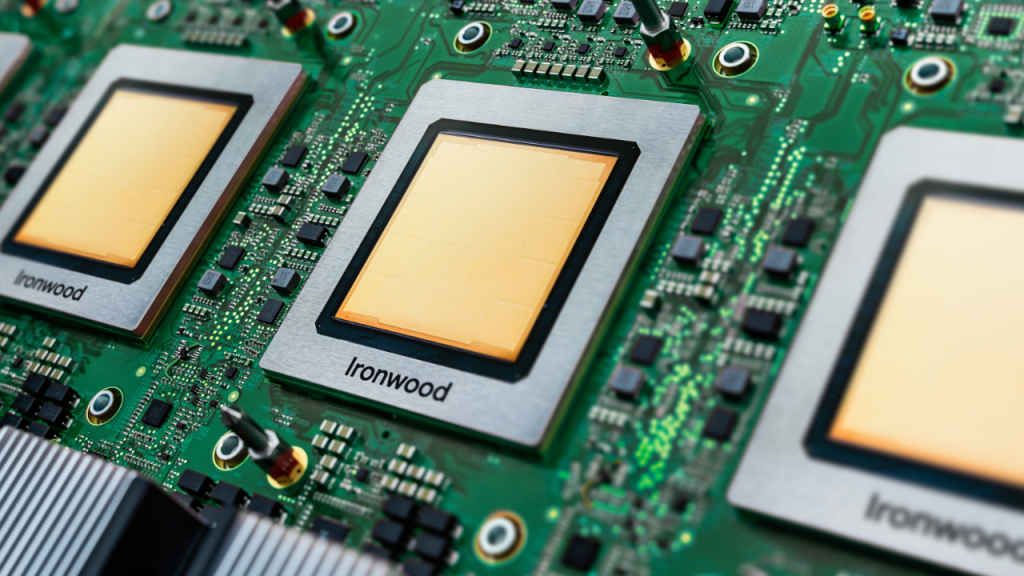

1) Google: Ironwood TPU

Google’s latest custom chip, the Ironwood Tensor Processing Unit (TPU), is the company’s seventh-generation AI processor – and its most ambitious yet. It delivers four times the performance of its predecessor and can scale into vast “superclusters” of up to 9,216 interconnected chips. This isn’t just for bragging rights, because Google’s responding to the bottlenecks that plague distributed AI training, trying to reduce latency and communication overhead that slows down large-scale models.

Ironwood is designed with today’s massive workloads in mind – everything from large language models to multimodal AI systems. You’d be mistaken to dismiss Google’s TPU in a hurry, as Anthropic has already committed to deploying up to one million of these TPUs – an investment that signals how Google intends to keep its AI ecosystem vertically integrated, from TensorFlow to Gemini.

2) Amazon: Trainium and Project Rainier

Amazon’s approach to AI hardware is quite bold. Under the Trainium brand, AWS has been quietly iterating on its own chips for both training and inference. The newest, Trainium2, powers Project Rainier – a sprawling supercomputer built on nearly 500,000 of these chips. You read that right, half a million processors forming the backbone of Amazon’s generative AI ambitions.

Also read: China going to win AI race thanks to cheaper electricity, warns NVIDIA CEO

While Trainium2 may not yet match NVIDIA’s top-end GPUs in sheer performance, it’s been designed to do something arguably more important – offer predictable, cost-efficient scaling inside AWS. Customers building AI models on Amazon’s cloud get the tightest integration possible with other AWS services, without paying NVIDIA’s premium pricing.

Internally, Amazon engineers are reportedly still ironing out stability and latency issues, but the direction is clear – less dependency on external vendors, more control over the silicon that powers the world’s largest cloud.

3) Microsoft: Cobalt and Maia for Azure

Microsoft, too, has been playing its long game in silicon. The company’s Cobalt CPU and Maia AI chips are now running in Azure data centers, powering both its own AI workloads and those of customers like OpenAI. Where Google and Amazon emphasize performance, Microsoft’s focus has been efficiency – not just computational but thermal.

That’s why Redmond’s engineers have been experimenting with microfluidic cooling – a technology that circulates liquid directly over chip surfaces, maintaining optimal temperatures while slashing energy consumption.

Also read: Big tech layoff mantra 2025: Less employees, more money for AI bets

Combined with its colossal capital expenditure (tens of billions per quarter on infrastructure), Microsoft’s chip strategy is about sustaining the rapid expansion of AI services like Copilot without being held hostage by supply shortages. In the long run, expect Cobalt and Maia to quietly underpin the Azure AI ecosystem, giving Microsoft tighter margins and greater sustainability credibility.

4) Meta: MTIA And Artemis for AI Metaverse

For all the talk of social networking, Meta has quietly become one of the most sophisticated AI hardware players in the world. Its Meta Training and Inference Accelerator (MTIA) chips, now in their second generation, are optimized for the company’s massive internal workloads – from recommendation algorithms to generative AI and the metaverse’s 3D models. The next project on deck, Artemis, aims to push performance and efficiency even further.

Meta’s strategy is aggressively vertical. The company recently acquired chip startup Rivos, a move that signals its intent to go deeper into custom silicon design. It’s a hedge against NVIDIA’s skyrocketing GPU prices, but also a philosophical shift: Mark Zuckerberg’s engineers are tailoring silicon for Meta’s own flavour of AI – multimodal, social, and real-time. As Meta builds toward its vision of embodied AI (virtual assistants and AR experiences that feel alive), these chips could become as foundational to its future as the News Feed once was.

5) OpenAI: Custom AI chip with Broadcom

Perhaps the most intriguing new entrant in the chip wars is OpenAI itself. The company has partnered with Broadcom to co-design custom AI accelerators – hardware purpose-built to run OpenAI’s models with maximum efficiency. These chips will tightly integrate compute, memory, and networking at the rack level, effectively creating a “model-first” data center rather than a “hardware-first” one.

Deployment isn’t expected before late 2026, but the implications are massive. For OpenAI, this move is about control – owning the entire AI stack, from the algorithms to the datacenter floor. It’s also about economics. With training runs of models like GPT costing hundreds of millions of dollars, even single-digit percentage gains in performance per watt translate into astronomical savings. Think of it as OpenAI’s equivalent of Tesla building its own batteries: a vertical play to future-proof the business.

Why is AI forcing new chip makers

It’s an unmistakable pattern. Big tech companies like Google, Amazon, Microsoft, Meta and OpenAI aren’t content to merely use AI chips anymore – it wants to own them. The same way Apple’s M-series chips let it control everything from app performance to battery life, these AI superpowers are realizing that software innovation alone isn’t enough when hardware is the constraint. NVIDIA may still reign supreme, but its crown is being challenged not by chip startups, but by its biggest customers.

Also read: Satya Nadella says Microsoft has GPUs, but no electricity for AI datacenters

Jayesh Shinde

Executive Editor at Digit. Technology journalist since Jan 2008, with stints at Indiatimes.com and PCWorld.in. Enthusiastic dad, reluctant traveler, weekend gamer, LOTR nerd, pseudo bon vivant. View Full Profile