DGX Spark, NVLink Fusion, RTX PRO Servers – NVIDIA’s Full AI Stack Revealed

At COMPUTEX 2025, NVIDIA CEO Jensen Huang returned to Taipei with his trademark leather jacket and an arsenal of announcements that reinforce the company’s vision of a world driven by AI factories. From partnerships with Foxconn and TSMC to rack-scale systems, semi-custom silicon, and desktop AI workstations, this year’s keynote was less about launching a single chip and more about building the infrastructure for AI at every level — from cloud to desktop. Here’s a breakdown of everything NVIDIA revealed.

Survey

SurveyRTX PRO Servers and Enterprise AI Factory

Not all AI factories need 10,000 GPUs. For enterprises looking to deploy AI on-premises, NVIDIA introduced its new RTX PRO Servers — built around the Blackwell-based RTX PRO 6000 Server Edition GPUs.

These servers support up to 8 GPUs and come bundled with BlueField DPUs, Spectrum-X networking, and NVIDIA AI Enterprise software. The emphasis here is on universal acceleration — allowing enterprises to run everything from multimodal AI inference and graphics to simulation and design workloads.

NVIDIA also introduced a validated reference design called the “Enterprise AI Factory.” Major OEMs such as Dell, HPE, Cisco, Lenovo, and Supermicro will be shipping servers based on this spec. Consulting giants including TCS, Accenture, Infosys and Wipro are already gearing up to help enterprises transition to Blackwell-based AI infrastructures.

DGX Spark and DGX Station: Personal AI Workstations

One of the most interesting announcements for the AI developer community was the introduction of DGX Spark and DGX Station — high-end personal computing systems built for local AI workloads.

The DGX Spark is powered by the GB10 Grace Blackwell Superchip and delivers 1 petaflop of AI performance. With 128GB of unified memory, it’s designed for developers and researchers working on generative AI models who need data locality and privacy.

The DGX Station takes it up several notches. Featuring the GB300 Grace Blackwell Ultra chip, it can reach 20 petaflops and 784GB of unified system memory. It supports up to seven GPU instances thanks to NVIDIA’s Multi-Instance GPU tech and offers 800Gb/s connectivity via ConnectX-8 SuperNICs.

Both systems are software-aligned with NVIDIA’s DGX Cloud infrastructure and include support for industry-standard tools like PyTorch, Ollama, and Jupyter — making it seamless to move workloads from local to cloud or hybrid environments. ASUS, Dell, GIGABYTE, HP, MSI, and others are set to ship these systems starting July.

The Foxconn-NVIDIA-Taiwan AI Factory

The biggest announcement centred around a new AI supercomputing factory being built in Taiwan. In partnership with Foxconn’s Big Innovation Company, NVIDIA will provide Blackwell-powered infrastructure — specifically 10,000 Blackwell GPUs — to form the heart of a national AI cloud system.

The AI factory will serve as the backbone for Taiwanese R&D efforts, with stakeholders including the Taiwan National Science and Technology Council and TSMC. The latter plans to leverage this infrastructure to dramatically accelerate semiconductor development.

This system isn’t just about compute — it’s a symbol of sovereignty. The goal: make Taiwan a “smart AI island” filled with smart cities, smart factories, and AI-native industries.

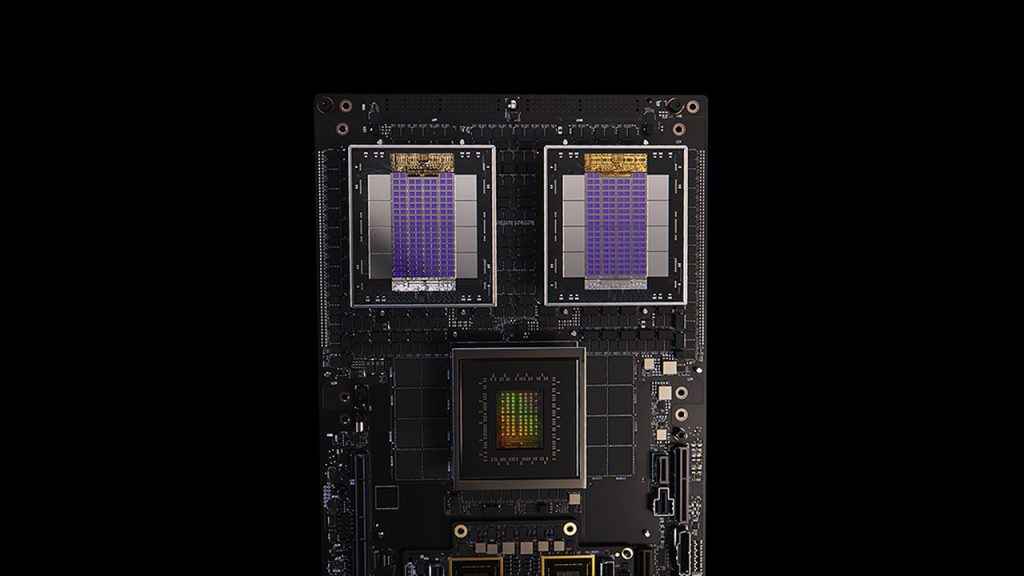

NVLink Fusion: Semi-Custom Silicon for AI Factories

NVIDIA also announced NVLink Fusion — a new silicon interconnect platform that lets partners like MediaTek, Marvell, Alchip, and Qualcomm pair their own CPUs or custom silicon with NVIDIA GPUs. In essence, it enables industries to build semi-custom AI infrastructure by combining their compute IP with NVIDIA’s scale-out capabilities.

Partners including Fujitsu and Qualcomm plan to integrate their CPUs into NVIDIA’s architecture, enabling a new class of sovereign, power-efficient AI data centres.

NVLink Fusion supports throughput up to 800Gb/s and connects GPUs across racks with low latency. NVIDIA sees this as a necessary evolution for AI — one where hyperscalers and sovereign cloud providers can build their own systems tailored for specific national or enterprise needs.

DGX Cloud Lepton: A Global GPU Marketplace

For developers and enterprises that don’t want to build in-house infrastructure, NVIDIA launched DGX Cloud Lepton — a global compute marketplace that connects developers to GPU capacity offered by cloud partners.

Participating vendors include CoreWeave, Foxconn, Lambda, and SoftBank, among others. Lepton allows developers to reserve GPU capacity — from Blackwell to Hopper — in specific regions, offering flexibility for sovereignty, low latency, or long-term workloads.

Lepton is tightly integrated with NVIDIA’s AI stack, including NIM inference microservices, NeMo, and Blueprints. For cloud providers, it also provides real-time GPU health monitoring and root-cause diagnostics — reducing operational complexity.

In parallel, NVIDIA also introduced “Exemplar Clouds” — a reference framework for cloud partners to ensure security, resiliency, and performance, using NVIDIA’s best practices.

Quantum Gets a Boost: ABCI-Q Supercomputer

Though not a front-and-centre announcement, NVIDIA also revealed it is powering the world’s largest quantum research supercomputer — ABCI-Q — located at the G-QuAT centre in Japan. The system uses over 2,000 H100 GPUs and supports hybrid workloads that combine quantum processors from Fujitsu, QuEra, and OptQC with GPU-accelerated AI.

NVIDIA’s CUDA-Q platform provides the orchestration layer here, allowing researchers to simulate and run quantum-classical hybrid applications. The hope is that this system will help fast-track quantum error correction, algorithm testing, and broader commercial use cases.

The Era of AI Factories

This year’s COMPUTEX keynote was less about flashy product launches and more about NVIDIA’s continued evolution into an AI infrastructure company. While the Blackwell architecture continues to power most of the announcements, the bigger story is how NVIDIA is positioning itself as the backbone of AI — whether that’s sovereign clouds in Taiwan, semi-custom silicon via NVLink Fusion, or personal AI machines for developers.

From hyperscale to desktop, NVIDIA wants to be the platform on which the next decade of AI innovation is built — and at COMPUTEX 2025, Jensen Huang made it clear that the AI factory era is no longer aspirational. It’s already here.

Computex 2025 is running from Tuesday, May 20 to Friday, May 23, 2025 and Digit will be on ground covering COMPUTEX 2025. Head to Digit’s Computex 2025 Hub to see the latest announcements from COMPUTEX as they happen.

Mithun Mohandas

Mithun Mohandas is an Indian technology journalist with 14 years of experience covering consumer technology. He is currently employed at Digit in the capacity of a Managing Editor. Mithun has a background in Computer Engineering and was an active member of the IEEE during his college days. He has a penchant for digging deep into unravelling what makes a device tick. If there's a transistor in it, Mithun's probably going to rip it apart till he finds it. At Digit, he covers processors, graphics cards, storage media, displays and networking devices aside from anything developer related. As an avid PC gamer, he prefers RTS and FPS titles, and can be quite competitive in a race to the finish line. He only gets consoles for the exclusives. He can be seen playing Valorant, World of Tanks, HITMAN and the occasional Age of Empires or being the voice behind hundreds of Digit videos. View Full Profile