Community Notes to fact-check: X becoming a playground for AI?

X automates Community Notes with AI bots, raising concerns about accuracy, transparency, and human oversight.

Grok and AI-written fact-checks now dominate X, replacing traditional human moderation with algorithmic truth.

Misinformation meets automation: X hands over context-building to AI in a bot-saturated digital battleground.

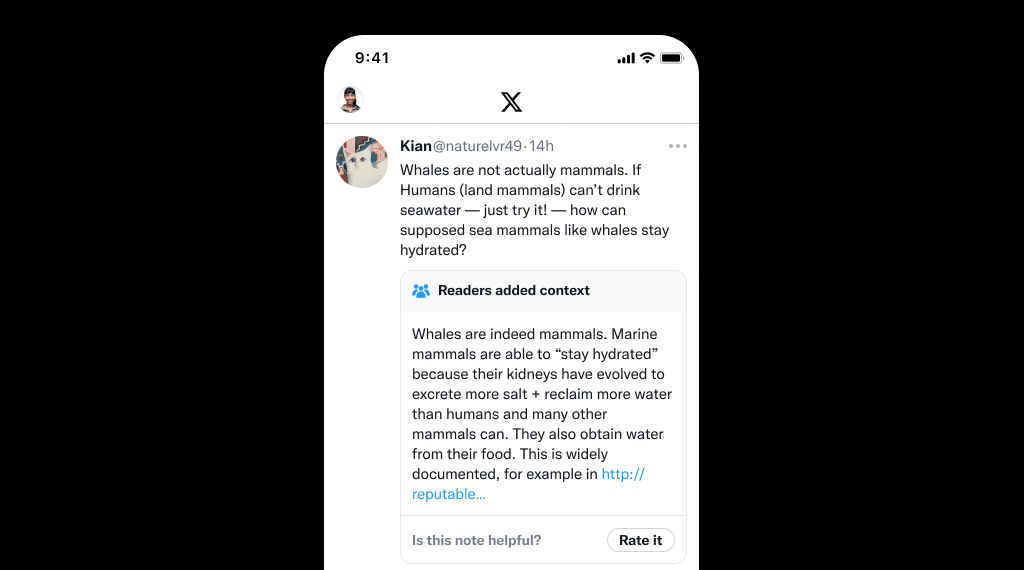

X, formerly known as Twitter, has quietly begun one of its most significant experiments yet, using AI-generated Community Notes to fact-check viral posts. Community Notes was originally launched as Birdwatch in 2021. It allowed users across ideological lines to collaboratively add helpful context to misleading or incomplete tweets. The system became a flagship feature under Musk’s ownership, symbolizing his commitment to “free speech through context, not censorship.”

Survey

SurveyBut now, that context may no longer come from humans. This move marks a turning point from a decentralized system powered by transparency and collaboration to an increasingly dependent on machines that few users truly understand or can scrutinize. Once a proudly human, crowdsourced effort to combat misinformation is now being handed over, at least in part, to machines.

Also read: Elon Musk’s xAI acknowledges security breach in Grok bot: What happened and what’s next

AI will now draft the facts

Community Notes was introduced as a way to crowdsource truth. Users across ideologies could propose notes, and only when those notes were rated helpful by a politically diverse set of contributors would they be published. Now, X has rolled out a system where AI bots can automatically write suggested notes when users request context. These AI-written notes are reviewed and rated by human contributors before going live. However, the drafting process is no longer human-first.

X claims this will allow it to scale fact-checking, generate context faster, and improve coverage on viral or misleading content. Bots never sleep and in theory, they can keep pace with the platform’s nonstop flow of misinformation. Here’s the problem: Community Notes was never meant to be fast. It was slow because it prioritized deliberation, cross-ideological input, and trust. People trusted it precisely because it wasn’t algorithmic, it was written by real users with transparent motivations and visible consensus.

By letting bots write the first draft, X is shifting from collaborative fact-checking to automated annotation, which raises serious concerns about nuance, accuracy, and bias. AI systems can repeat existing patterns, misinterpret context, or introduce subtle distortions. And with no public insight into how these AI bots are trained or evaluated, users are being asked to trust machines that they cannot see.

Also read: Elon Musk vs Sam Altman: AI breakup that refuses to end

Many users already rely on Grok to fact-check posts, summarize trending discussions, or add context that Community Notes hasn’t caught up with. But Grok, like any large language model, is not immune to hallucinations, outdated info, or biased outputs, especially when it pulls from a firehose of unreliable user content. Unlike Community Notes, Grok’s responses are not subject to peer review or transparency around source data. This means that X will have two different AI systems, Community Notes bots and Grok. One writes fact-checks while the other acts like a real-time arbiter of truth and neither is fully accountable.

The Context: X Is already drowning in bots

It’s important to understand what environment these AI fact-checkers are operating in. Since Musk’s takeover in late 2022, bot activity and hate speech on the platform have surged dramatically. A 2023 analysis by CHEQ found that invalid traffic (including bots and fake users) jumped from 6% to 14% post-acquisition. The same report noted a 177% increase in bot-driven interactions on paid links. Meanwhile, hate speech reports rose by over 300% in some categories, according to a 2023 Center for Countering Digital Hate study.

That matters, because Community Notes was supposed to be the platform’s solution to misinformation and manipulation, a user-powered defense. But now, it’s being overwhelmed from both sides: malicious bots flooding timelines, and now “helpful” bots trying to clean it up. The most important part of any fact-check is judgment, something AI still lacks. Context, tone, satire, cultural nuance, and evolving political narratives are things machines struggle to interpret.

An AI-written note might get the surface-level fact correct while missing the larger misdirection entirely. Even if human review remains in place, the very act of outsourcing the writing of notes to bots signals a shift away from collective human responsibility. And if these AI systems fail, the blame will be as diffuse and faceless as the code behind them.

A system at risk of losing its soul

X has become a platform increasingly defined by automation. After cutting thousands of staff, including much of its trust and safety team, the company has leaned heavily on algorithms, for moderation, recommendation, and now, for truth itself. Community Notes was once a rare example of a social feature built on trust, slowness, and human credibility. By turning it into an AI tool, X is making the system faster, but possibly hollowing it out in the process.

There’s a reason fact-checking requires people. Facts are easy; context is hard. And when machines are allowed to define truth, especially in a digital ecosystem already flooded with bots and hate speech, the very idea of trustworthy context begins to erode. Faster is not always better. If truth is delegated to AI, and oversight is scaled down to match, what happens to public trust? X may soon find that in the rush to automate truth, it has automated away credibility.

Also read: Elon Musk vs Trump: Impact on Tesla, SpaceX, and Musk’s tech empire

Vyom Ramani

A journalist with a soft spot for tech, games, and things that go beep. While waiting for a delayed metro or rebooting his brain, you’ll find him solving Rubik’s Cubes, bingeing F1, or hunting for the next great snack. View Full Profile