ROCm 7: AMD’s big open-source bet on the future of AI

If 2023 and 2024 were the years NVIDIA set the pace for AI acceleration, 2025 is shaping up to be the year AMD answers back with more than just hardware. At its flagship “Advancing AI” event, AMD not only launched the Instinct MI350 series accelerators but also introduced ROCm 7, its latest iteration of the open AI software platform that’s quietly become a centrepiece of its AI ambitions.

Survey

SurveyWhile silicon often steals the limelight, software is where most of the AI magic actually happens. And for AMD, ROCm 7 is a substantial evolution that aims to close the gap with NVIDIA’s CUDA ecosystem, expand AI software support to a wider range of devices, and finally position AMD as a true end-to-end AI player, from cloud datacentres all the way to client PCs.

What is ROCm 7?

ROCm, short for Radeon Open Compute, is AMD’s open-source software platform designed to enable high-performance computing (HPC) and AI workloads on its GPUs. Launched in 2016, it was originally aimed at data scientists and developers working on scientific simulations and neural networks.

With ROCm 7, AMD is clearly pivoting towards a full-stack AI platform that supports both training and inference at scale. And this isn’t just a tweak under the hood. ROCm 7 brings a generational leap, supporting newer low-precision formats, enabling better distributed computing, and rolling out for the first time with Windows support. Yes, including WSL.

From improved developer tools to new model-serving frameworks, ROCm 7 represents a significant effort to open up AI development to more platforms and more people.

What’s new in ROCm 7?

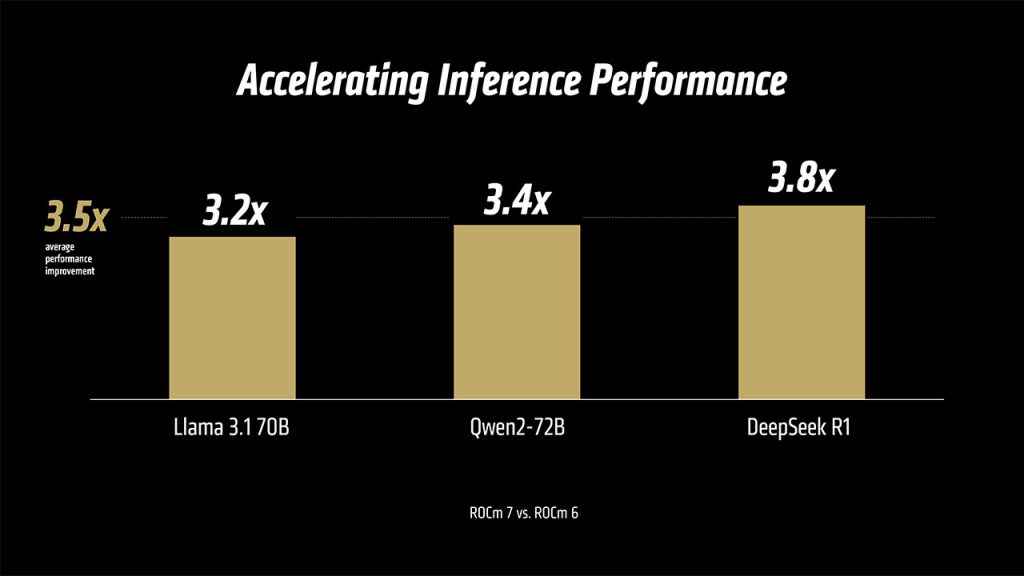

Performance uplift: At the heart of ROCm 7’s improvements is raw speed. AMD claims up to 3.5× improvement in inference workloads and 3× gains in training performance compared to ROCm 6. That’s not just theoretical marketing fluff, the company showcased several benchmarked models including Meta’s Llama 3.1-70B, Alibaba’s Qwen2-72B, and DeepSeek R1. For developers working with transformer-heavy LLMs, these aren’t minor boosts. The optimisations in ROCm 7 come from both better memory utilisation and new support for low-precision data types, which can significantly reduce compute overhead.

Support for FP4 and FP6 Low-Precision Formats: One of the marquee features in ROCm 7 is support for FP4 and FP6, two ultra-low-precision data formats designed for high-throughput inference. These formats are particularly relevant to AMD’s new Instinct MI350X and MI355X accelerators, which are built on the CDNA 4 architecture and have native support for FP4 and FP6 matrix operations.

For comparison, NVIDIA’s TensorRT-LLM and Hopper architecture lean heavily into FP8 and INT4, so AMD’s adoption of FP4/FP6 keeps them competitive on the efficiency front. These formats reduce memory bandwidth and power consumption while maintaining acceptable accuracy for many AI inference tasks.

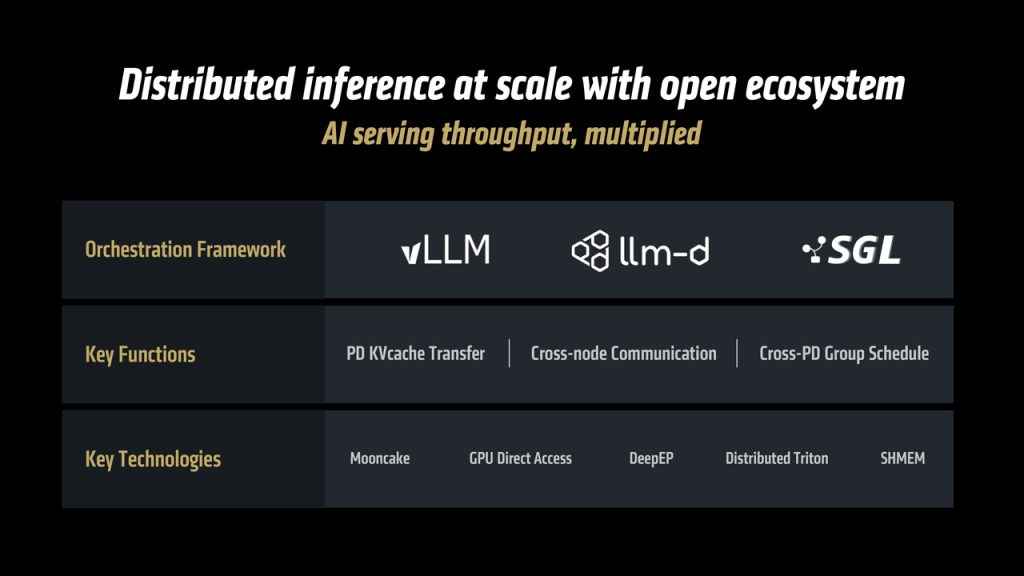

Distributed Inference and Training Support: Large model deployment isn’t just about one GPU anymore. ROCm 7 brings native support for distributed inference and training, with full integration into frameworks like vLLM, SGLang, and llm-d. These are the backbones for production-grade LLM serving pipelines, enabling efficient attention caching, dynamic batching, and multi-node scaling. This is especially relevant for developers deploying LLM chatbots, search engines, and recommendation systems, where latency and throughput are critical.

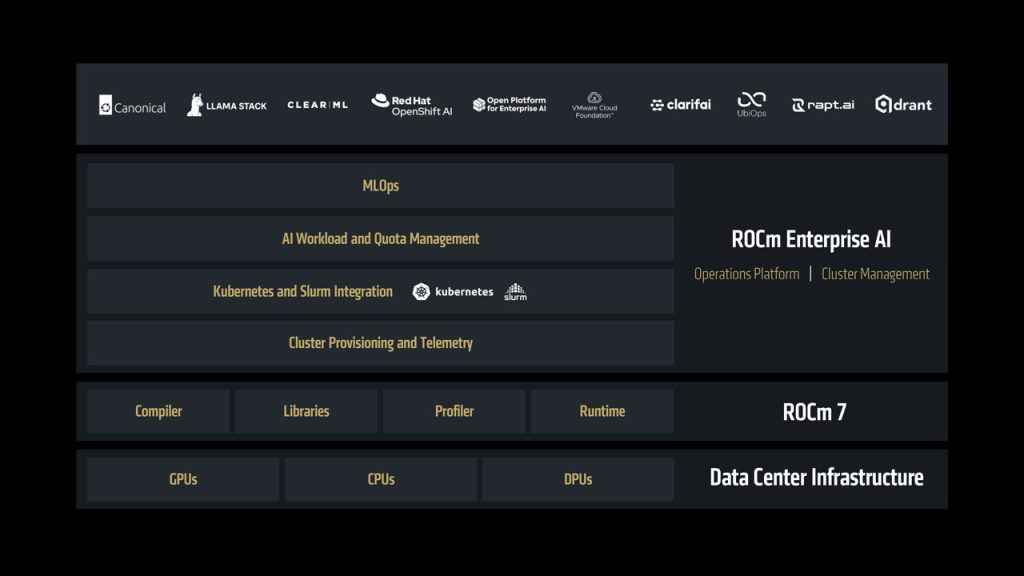

Enterprise AI Solutions and MLOps: ROCm 7 is also introducing a dedicated Enterprise AI layer that’s targeted at production deployment. This includes a ROCm MLOps stack with model fine-tuning, secure scaling, logging, and integration with tools like Kubernetes, MLFlow, and Prometheus. In other words, ROCm is positioning itself as a viable AI operations backbone. There’s also built-in support for AI workload security policies, role-based access control, and encrypted model storage which are all necessary features for deployment in regulated industries.

Expanded Hardware and OS Support

Radeon and Ryzen AI Support: One of the most noticeable changes with ROCm 7 is its expanded hardware compatibility. Developers can now tap into ROCm on select Radeon GPUs and Ryzen AI processors. While performance on Radeon cards won’t match Instinct-class accelerators, it gives students, hobbyists, and prosumers a more affordable way to experiment with ROCm and AI workloads.

This shift could be key to driving grassroots developer adoption, an area where NVIDIA has historically held a significant edge with CUDA.

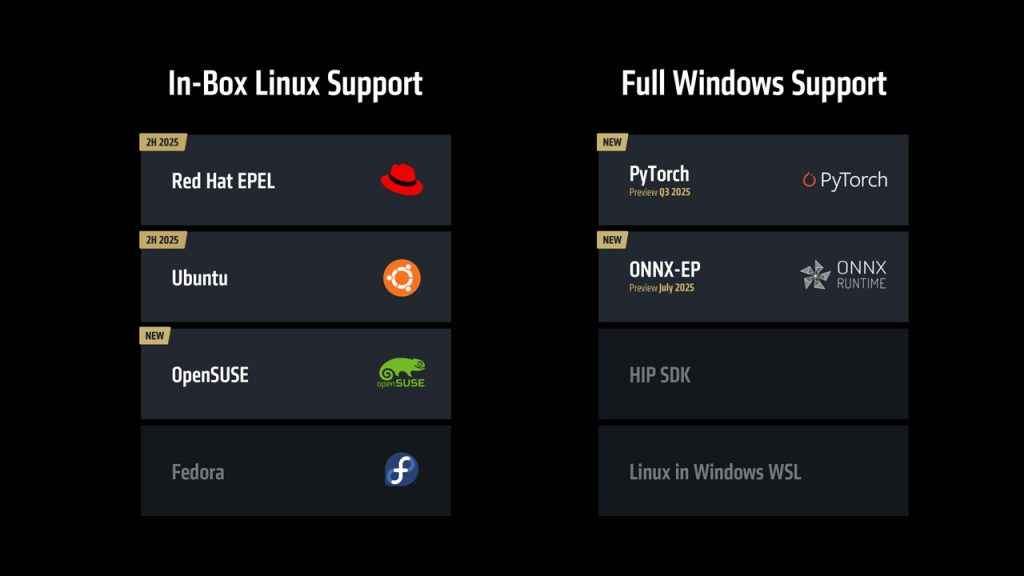

Windows Support (and WSL):Arguably the most overdue feature, ROCm 7 now supports Windows. Official support includes both native Windows and WSL2 environments, which means data scientists who primarily use Visual Studio Code or prefer Windows-based workstations can now join the ROCm ecosystem.

This is a strategic move. With so many AI developers on Windows (especially in enterprise and education), AMD is finally opening the gates to a massive user base that previously relied on dual-booting Linux or using Docker containers.

Unified Dev Experience: Cloud to Client: The goal here is simple—build once, deploy anywhere. Whether you’re training an LLM on a MI300X cluster in the cloud or prototyping on a Radeon-equipped laptop, ROCm 7 maintains a consistent API and developer experience. Combined with HIP (Heterogeneous-Compute Interface for Portability), this enables seamless code portability across AMD’s full stack.

Developer Experience and Ecosystem

Bi-Weekly Updates and Day-Zero Fixes: AMD is adopting a more modern software delivery cadence with ROCm 7. Instead of large, infrequent releases, it will now push bi-weekly updates with day-zero bug fixes and patch notes. This brings ROCm closer to modern devops expectations and matches the velocity of open-source machine learning tooling.

AMD Developer Cloud Access: If you don’t have access to MI300X or MI350-class hardware, the AMD Developer Cloud now offers instant, browser-based access to ROCm environments pre-loaded with Hugging Face Transformers, PyTorch, TensorFlow, and vLLM. This is AMD’s version of NVIDIA’s DGX Cloud or Google Colab but with full ROCm integration. Developers can test LLMs, fine-tune models, and benchmark inference, all without needing to invest in physical hardware.

HIPIFY: CUDA Porting Made Easier: ROCm 7 continues to improve HIPIFY, the tool AMD provides to convert CUDA code into ROCm-compatible HIP code. With better documentation, auto-detection of common CUDA idioms, and integrated error-checking, HIPIFY is becoming more reliable as a porting tool, especially for teams with large legacy CUDA projects. It’s still not 100% plug-and-play, but for many open-source models, the porting barrier is significantly lower now.

Industry and Partner Collaborations

ROCm 7 is launching with broad partner support. AMD announced collaborations with Meta, Microsoft, Red Hat, and Cohere which are all focused on LLM inference, training optimisation, and hyperscale infrastructure design.

For example, Meta’s Llama models are already optimised for ROCm 7 and have been benchmarked on MI350X clusters. Microsoft is deploying ROCm instances on Azure, while Red Hat is integrating ROCm support into OpenShift for enterprise-grade containerised AI workloads. AMD also laid out a vision for open rack-scale AI infrastructure, highlighting Helios (a future reference design) and support for MI300X/MI350X clusters in OCP-compliant datacentres.

CUDA vs ROCm

The elephant in the room is, of course, CUDA. For years, NVIDIA’s developer ecosystem has been the benchmark in AI tooling boasting extensive libraries, active community support, and rock-solid driver integration. With ROCm 7, AMD is attempting to reframe the game. By embracing open standards and offering better upstream support for PyTorch and TensorFlow, AMD is chipping away at CUDA’s moat.

The inclusion of low-precision data types, Windows support, cloud access, and distributed inference all mark meaningful responses to CUDA’s dominance. It’s not about replacing CUDA tomorrow but about building a second viable ecosystem that developers and enterprises can trust.

ROCm 7 is just one part of AMD’s broader AI roadmap. The company reaffirmed its goal to deliver a 100× improvement in AI performance-per-watt by 2030 and is betting big on rack-scale efficiency through better hardware-software co-design.

Upcoming hardware like the MI400X and MI500X series is already being tested, and future ROCm updates will add support for next-gen memory hierarchies, spatial sparsity optimisations, and real-time inference pipelines.

The ROCm roadmap suggests a pivot from just performance optimisation to deeper developer-centric features like collaborative debugging, mixed-precision simulation environments, and automated model graph partitioning.

It’s all about adoption

AMD is no longer content playing second fiddle in the AI acceleration race. With a strong focus on performance, platform openness, developer tooling, and ecosystem integration, ROCm 7 represents a substantial leap forward.

For enterprises, it offers a viable alternative to CUDA. For developers, it lowers the barrier to entry with better tools and broader hardware support. And for AMD, it cements the vision of a truly open AI ecosystem that can scale from laptops to hyperscale datacentres.

If you’ve been sitting on the sidelines waiting for ROCm to become “ready”, this might be the moment to dive in. And with the AMD Developer Cloud now live, there’s really no reason not to try ROCm 7 for yourself.

Mithun Mohandas

Mithun Mohandas is an Indian technology journalist with 14 years of experience covering consumer technology. He is currently employed at Digit in the capacity of a Managing Editor. Mithun has a background in Computer Engineering and was an active member of the IEEE during his college days. He has a penchant for digging deep into unravelling what makes a device tick. If there's a transistor in it, Mithun's probably going to rip it apart till he finds it. At Digit, he covers processors, graphics cards, storage media, displays and networking devices aside from anything developer related. As an avid PC gamer, he prefers RTS and FPS titles, and can be quite competitive in a race to the finish line. He only gets consoles for the exclusives. He can be seen playing Valorant, World of Tanks, HITMAN and the occasional Age of Empires or being the voice behind hundreds of Digit videos. View Full Profile