Mustafa Suleyman’s AI plan for Microsoft beyond OpenAI: What it means

Mustafa Suleyman redefines Microsoft’s AI vision with Humanist Superintelligence

Microsoft charts independent AI path beyond OpenAI under Mustafa Suleyman

Human-centered AI: Suleyman’s plan to balance innovation and ethics

When Mustafa Suleyman took charge of Microsoft AI in early 2024, it marked more than just another high-profile tech hire. The DeepMind and Inflection AI co-founder brought with him a deep philosophical stance on what artificial intelligence should become. Now, with his latest essay titled “Towards Humanist Superintelligence,” published on Microsoft’s official AI portal, Suleyman has outlined a vision that could redefine how Microsoft approaches AI – one that moves beyond its collaboration with OpenAI and towards a more values-driven, human-centric model of progress.

Survey

SurveyAlso read: CALM explained: Continuous thinking AI, how it’s different from GenAI LLMs so far

The vision: What is Humanist Superintelligence?

Suleyman’s concept of Humanist Superintelligence (HSI) reframes the traditional narrative around artificial general intelligence. Instead of machines surpassing human cognition and autonomy, he envisions highly advanced systems that remain fundamentally tethered to human purpose. In his words, “HSI offers an alternative vision anchored on a non-negotiable human-centrism and a commitment to accelerating technological innovation, but in that order.”

The essay stresses that the goal is not to build a single, omniscient artificial mind, but rather multiple specialised superintelligences, each designed for domains like medicine, energy, and education. This approach, Suleyman argues, ensures both safety and alignment. The challenge of containment and alignment – keeping systems perpetually in check even as they surpass human understanding – is one of his central preoccupations. “We need to contain and align it, not just once, but constantly, in perpetuity,” he writes.

This is a subtle but significant shift. Where the global AI race has often been framed as a competition for dominance, Suleyman’s vision seeks to establish a framework for coexistence, AI as a collaborator, not a conqueror.

Microsoft’s next chapter beyond OpenAI

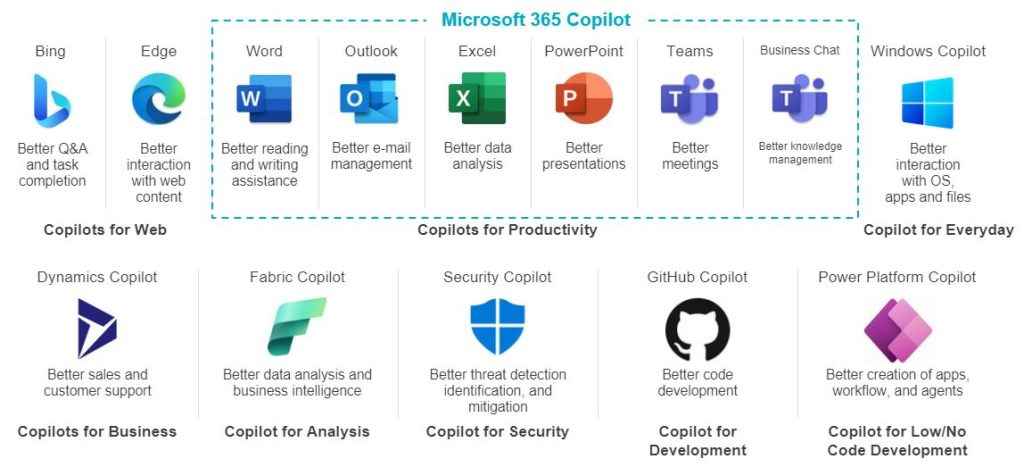

The timing of this announcement is deliberate. Microsoft remains OpenAI’s largest investor and closest partner, but the emergence of Suleyman’s division, Microsoft AI, marks a step toward independence. Over the past year, the company has consolidated its consumer and enterprise AI operations under this new unit, overseeing Copilot, Bing Chat, and other generative products.

Also read: OpenAI introduces IndQA: A new benchmark for AI’s multilingual intelligence

Suleyman’s essay signals that Microsoft is no longer content with being a patron of OpenAI’s technology. It now wants to develop its own models, research pipelines, and long-term vision for artificial intelligence. Microsoft is increasingly capable of pursuing advanced AI and perhaps even AGI on its own terms.

That autonomy comes with strategic value. It allows Microsoft to align AI development with its broader corporate identity, a company that builds tools, not threats. By emphasizing humanist rather than general superintelligence, Suleyman reframes the company’s goals away from a “race to AGI” and toward a more pragmatic mission: creating AI systems that demonstrably improve quality of life.

Inside the plan: Building intelligence with a purpose

Central to Suleyman’s proposal is a focus on high-impact applications rather than abstract capability. One of the most striking promises in his essay is that “everyone who wants one will have a perfect and cheap AI companion helping you learn, act, be productive and feel supported.” These AI companions are envisioned as personal assistants that adapt to each user’s needs, offering cognitive support without replacing human connection.

Another pillar of the plan is what he calls medical superintelligence. Suleyman claims Microsoft’s internal systems have already shown “expert-level performance” on difficult diagnostic challenges, far exceeding human averages. The goal is to make world-class medical knowledge universally available, regardless of geography or income.

The third domain of focus is clean energy and scientific innovation. “Energy drives the cost of everything,” he writes, predicting that by 2040, abundant renewable generation and storage will be within reach – with AI playing a pivotal role in accelerating discovery. From new battery materials to more efficient grids, AI could help solve the engineering bottlenecks that have long slowed sustainability transitions.

Taken together, these three pillars – companionship, health, and energy – sketch a roadmap for Microsoft’s next decade of AI. They represent a fusion of idealism and pragmatism, grounded in technologies that can both uplift society and sustain the company’s business model.

Rethinking the Microsoft–OpenAI relationship

Microsoft’s partnership with OpenAI remains one of the most consequential alliances in tech. Yet, Suleyman’s essay underscores a subtle decoupling. He rejects the “race to AGI” narrative that OpenAI helped popularize, calling instead for a long-term, coordinated approach among governments, labs, and startups.

For Microsoft, this philosophical shift doubles as a business strategy. By championing its own “humanist” model of AI, the company positions itself not as a follower of OpenAI’s breakthroughs but as a parallel innovator with distinct goals. That also gives Microsoft flexibility to explore new architectures, training paradigms, and applications that may not align perfectly with OpenAI’s roadmap.

In effect, Suleyman is crafting a new identity for Microsoft AI – one that retains the resources of a trillion-dollar corporation but borrows the moral language of an academic movement. It is both a branding exercise and a re-orientation of purpose.

Challenges ahead

Still, Suleyman’s vision leaves tough questions unanswered. The notion of “perpetual alignment” sounds noble, but maintaining ethical and technical control over self-improving systems remains an unresolved research problem. Commercial viability will also matter: while AI companions and medical tools are socially compelling, their monetization paths are unclear.

Then there is the matter of credibility. Suleyman’s leadership style has drawn scrutiny in the past, and his dismissal of certain research directions, like exploring AI consciousness, may clash with broader scientific curiosity. Critics may see “humanist superintelligence” as more branding than blueprint.

And finally, there is the question of cooperation. Suleyman calls for “every commercial lab, every startup, every government” to coordinate on alignment. But global AI development has always been competitive, fragmented, and secretive. Building consensus on how to contain intelligence that continuously improves is, for now, more aspiration than policy.

For India and other emerging economies, Suleyman’s plan could have tangible ripple effects. An AI-powered healthcare system could democratize diagnostics in regions short on doctors. Personalised educational companions could support students across languages and learning levels. AI-enhanced energy infrastructure could help nations like India balance sustainability with growth.

But beyond national interest, Humanist Superintelligence reframes what progress in AI should look like. It asks the industry to stop equating intelligence with autonomy and instead measure it by its service to humanity. That redefinition could mark the beginning of a new chapter in the story of artificial intelligence – one where power is measured not in computation, but in compassion.

Also read: Project Suncatcher: Google’s crazy plan to host an AI datacenter in space explained

Vyom Ramani

A journalist with a soft spot for tech, games, and things that go beep. While waiting for a delayed metro or rebooting his brain, you’ll find him solving Rubik’s Cubes, bingeing F1, or hunting for the next great snack. View Full Profile