Meet GPT-OSS: OpenAI’s 20B and 120B parameter open-weight open-source AI models

First open-weight models from OpenAI since 2018, available under Apache 2.0 licence for full commercial and research use.

Two sizes: 20B for mobile/16GB RAM and 120B for single GPU systems, both support 131k-token context and chain-of-thought reasoning.

Runs locally with support from NVIDIA, Qualcomm, Hugging Face, and Ollama, enabling private, fast, and customisable AI applications.

For years, the world’s most influential AI company stood on the sidelines of the open-source movement not having released any open-source AI models for about six years. But now, OpenAI is back in the game and this time, it’s bringing heavyweight models to your laptop. With the release of GPT-OSS, OpenAI is offering its first open-weight large language models in over six years. The move signals a strategic pivot and also a quiet acknowledgment that openness and accessibility are now table stakes in the generative AI race. Available in two sizes, GPT-OSS-20B and GPT-OSS-120B, the open-source AI models can be freely downloaded, modified, and even deployed commercially under the permissive Apache 2.0 licence. More strikingly, they’re designed to run locally, even on consumer-grade NVIDIA GPUs or Snapdragon-powered mobile devices.

Survey

SurveySo, what’s really behind OpenAI’s decision to open its models now? And how do GPT-OSS models compare in a crowded field filled with Llama, Gemma, DeepSeek, and Mistral?

OpenAI’s long-overdue course correction

OpenAI’s reluctance to open its model weights has always sat uncomfortably with its name. Once a lab that championed transparency, it became increasingly closed after the release of GPT-2, citing safety concerns. GPT-3, GPT-4, and even the most recent o4-mini models have been tightly controlled, hosted solely on OpenAI’s own infrastructure and accessed via APIs.

But the AI landscape has shifted. Over the last 18 months, developers and enterprises alike have flocked to open-weight alternatives. Meta’s Llama family, Mistral’s lean models, and challengers such as DeepSeek and Falcon have thrived because they were decent and because they were open-source AI models. You could inspect the architecture, fine-tune them for niche use cases, and even run them on consumer-grade hardware. More importantly, you weren’t locked into a single provider’s cloud billing or API usage policies.

In January 2025, OpenAI CEO Sam Altman publicly admitted that the company had “been on the wrong side of history” by not participating in the open-weight ecosystem. With GPT-OSS, OpenAI is now trying to rewrite its role in the story.

Also read: Did Sam Altman just tease GPT-5? OpenAI CEO’s post fuels speculation

Introducing GPT-OSS-20B and GPT-OSS-120B open-source AI models

The GPT-OSS models come in two flavours, GPT-OSS-20B and GPT-OSS-120B. Both open-source AI models support up to 131,072 token context lengths, making them ideal for large document processing, research tasks, and long-form conversations. OpenAI has confirmed that both models use a mixture-of-experts (MoE) architecture, which lets them dynamically adjust reasoning effort and compute based on prompt complexity.

GPT-OSS-20B: A compact, chain-of-thought reasoning model that can run locally on just 16GB of memory. Ideal for mobile inference or slim desktop use cases.

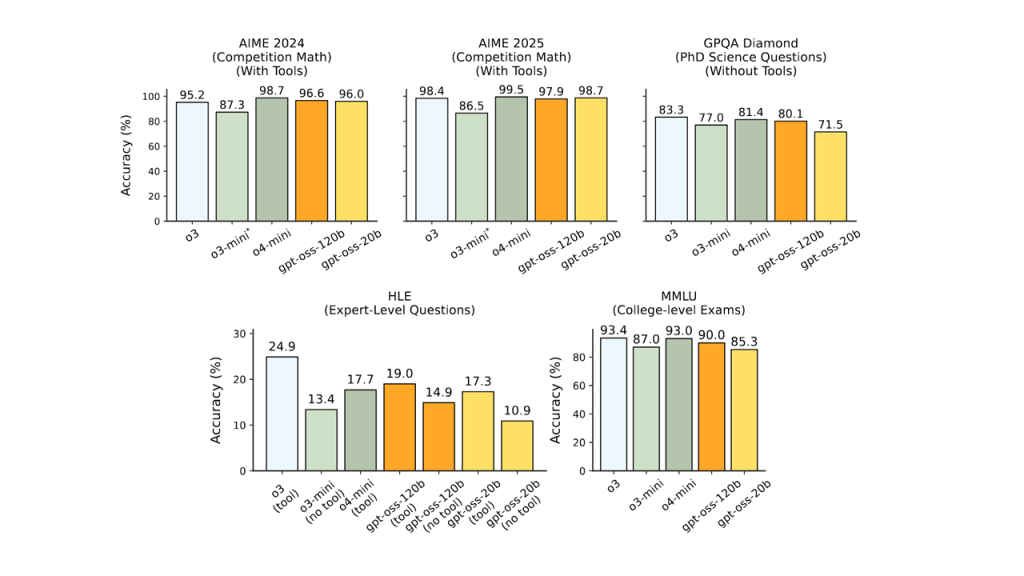

GPT-OSS-120B: A larger, more powerful model that matches the performance of OpenAI’s own o4-mini. While it still requires a decent GPU (24GB+ VRAM recommended), it’s designed to be deployed on a single NVIDIA GPU.

Internally, these models perform on par with OpenAI’s closed models on tasks such as coding, question answering, and common sense reasoning. Unlike GPT-4, however, these models are text-only, with no built-in multimodal capabilities. At least not yet.

Why open-source AI models matter: control, privacy, and customisation

Open-weight open-source AI models aren’t just about ideology but they offer tangible benefits.Developers and organisations now have the freedom to run models locally, significantly improving data privacy while reducing latency. They can also fine-tune the models using their own proprietary datasets, bypassing the need to send sensitive information to external cloud providers. Beyond that, full access to the model weights allows for deeper inspection and auditing, which can be especially valuable in high-stakes applications like healthcare or finance. With these models, users can tailor inference behaviour, prompting strategies, and memory management to match their unique workflows, making AI integration far more flexible.

OpenAI has acknowledged that a significant portion of its customer base already uses open models, and said this release is intended to address that demand by making its own technology more accessible across use cases. The sentiment was echoed by Sam Altman, who described the models as “the best and most usable open model in the world,” designed to empower individuals and small businesses alike.

Also read: OpenAI is crazy rich: ChatGPT maker earns $23,000 per minute

Safety, scrutiny, and visible reasoning

OpenAI is also keen to reassure critics that GPT-OSS isn’t a hasty pivot. The models have undergone what the company claims is its most rigorous safety testing to date, including evaluations by external security firms for potential misuse in areas like biosecurity and cybercrime.

One of the standout features is the inclusion of visible chain-of-thought reasoning. This allows users to view how the model arrived at a specific conclusion or output, with the intent of making it easier to detect hallucinations, bias, or deceptive behaviour. This could also serve as a valuable tool for researchers studying model alignment or prompt engineering.

OpenAI hasn’t disclosed the exact training data sources, continuing its trend of opacity in that department. But the company insists that the models match internal safety benchmarks used for their proprietary offerings.

On-device AI just got a major upgrade

While the ability to run GPT-OSS models in the cloud is valuable, the most exciting development might be how well these models perform on consumer-grade devices. In collaboration with Qualcomm, the smaller 20B model can now run entirely on-device using the Snapdragon AI Engine. This makes it possible for smartphones and tablets to perform chain-of-thought reasoning without ever hitting the cloud. Qualcomm called the move a “turning point” in mobile AI, and suggested this was only the beginning.

For developers looking to deploy apps with built-in reasoning or contextual chat agents, this unlocks powerful new use cases in personal productivity, healthcare, assistive tech, and beyond. The tight integration with platforms like Ollama makes deployment significantly easier.

On the PC side, NVIDIA has worked closely with OpenAI to optimise the GPT-OSS models for its RTX GPU lineup. If you’re running an RTX 4080, 4090, or the newer 5090, the 120B model can be run locally at impressive speeds, reportedly up to 256 tokens per second on a 5090. That’s fast enough for real-time applications like chat agents, coding assistants, or document summarisation.

Developers can load the models via Ollama, llama.cpp, or Microsoft AI Foundry Local, with out-of-the-box support for RTX-specific optimisations like MXFP4 and TensorRT coming soon. According to NVIDIA, these models are also some of the first to support MXFP4, a mixed-precision format that delivers high-quality outputs with reduced compute overhead. The long context length (up to 131k tokens) is a game-changer for workflows that require deep reasoning over large datasets, be it legal documents, codebases, or academic literature.

Also read: Study mode in ChatGPT explained: How students can use AI more effectively

How to run GPT-OSS: Hugging Face, Ollama, Foundry and more

Getting started with GPT-OSS is refreshingly straightforward. OpenAI has made the models available across multiple platforms. On Hugging Face, both the 20B and 120B variants can be downloaded directly, complete with inference scripts and documentation to run as local LLMs. For those looking for a user-friendly interface, the Ollama app supports local deployment on Windows, macOS, and Linux, and includes SDKs for integration into other applications. Microsoft’s AI Foundry Local caters to Windows developers, offering a command line interface and API integration powered by ONNX Runtime. For teams running cloud infrastructure, both Azure and AWS offer hosted deployment options, and Databricks is supporting the models for enterprise-scale workloads. Whether you’re a hobbyist testing on a single machine or a startup looking to deploy AI across products, GPT-OSS is now accessible at nearly every level of technical maturity.

How does GPT-OSS stack up against the rest?

OpenAI hasn’t officially published benchmark comparisons between GPT-OSS and competing open-source AI models such as Llama 3, DeepSeek-V2, or Gemma 2. But early community evaluations suggest the 120B model sits firmly in the same ballpark as Meta’s Llama 3 70B and Google’s Gemma 2 27B in terms of reasoning quality and instruction-following capabilities. We also looked at some of the recently benchmarked open-source models to create a comparison of sorts.

| Model | Size | MMLU | ARC-Challenge | BIG-Bench Hard | AGIEval English | DROP (F1) | Context Length |

| GPT-OSS-120B | 120B | ~93% | – | – | – | – | 131k |

| GPT-OSS-20B | 20B | 85.30% | – | – | – | – | 131k |

| Llama 3 70B | 70B | 79.50% | 93.00% | 81.30% | 63.00% | 79.70% | 128k+ |

| Llama 3 8B | 8B | 66.60% | 78.60% | 61.10% | 45.90% | 58.40% | 128k+ |

| Gemma 2 27B | 27B | 67-69% | – | – | – | – | 128k+ |

| Gemma 2 9B | 9B | 64.30% | 53.20% | 55.10% | 41.70% | 56.30% | – |

| DeepSeek V3 32B | 32B | 75.20% | – | – | – | – | 130k |

| Mistral Medium 3 | 46B | 78-80% | 87% | 80-82% | 61-64% | 74-76% | 32k-128k |

| Falcon 3 180B | 180B | 78-80% | – | – | – | – | >100k |

The inclusion of MoE and high context length support gives GPT-OSS some clear technical advantages. And while it’s not yet multimodal like GPT-4o, the focus on visible reasoning and local inference makes it more accessible than most of its rivals. That said, custom fine-tunes, alignment tuning, and quantised variants from the open community will likely determine how widely GPT-OSS gets adopted in the long run.

So is OpenAI finally becoming open?

OpenAI’s decision to release GPT-OSS is a cultural shift. By joining the ranks of open-weight model providers, the company is effectively acknowledging that the centre of gravity in AI development has moved. Innovation is no longer restricted to research labs or big cloud providers. It’s happening in home offices, small studios, and solo dev setups.

This release also marks a return to OpenAI’s original mission: to ensure that artificial general intelligence (AGI) benefits all of humanity. What stands to be seen is if they’ll continue releasing open weights. Altman summed it up in a post: “We believe in individual empowerment. Although we believe most people will want to use a convenient service like ChatGPT, people should be able to directly control and modify their own AI when they need to, and the privacy benefits are obvious.”

Whether GPT-OSS becomes one of the most widely used open-source AI models remains to be seen. But it’s clear that the days of AI being locked away behind proprietary APIs are numbered. Also, it’s tempting to view GPT-OSS as a catch-up move, a response to pressure from Meta, Mistral, and the broader open-source community. But that would be missing the point. GPT-OSS is an impressive release on its own merits. The models are fast, flexible, and finely tuned for reasoning. They run locally. They’re easy to deploy. And they carry the engineering polish you’d expect from OpenAI. More importantly, they signal a broader evolution in how AI will be built, deployed, and experienced. OpenAI is now a contributor to the open ecosystem it once watched from afar.

Mithun Mohandas

Mithun Mohandas is an Indian technology journalist with 14 years of experience covering consumer technology. He is currently employed at Digit in the capacity of a Managing Editor. Mithun has a background in Computer Engineering and was an active member of the IEEE during his college days. He has a penchant for digging deep into unravelling what makes a device tick. If there's a transistor in it, Mithun's probably going to rip it apart till he finds it. At Digit, he covers processors, graphics cards, storage media, displays and networking devices aside from anything developer related. As an avid PC gamer, he prefers RTS and FPS titles, and can be quite competitive in a race to the finish line. He only gets consoles for the exclusives. He can be seen playing Valorant, World of Tanks, HITMAN and the occasional Age of Empires or being the voice behind hundreds of Digit videos. View Full Profile