How OpenAI’s LLM mastered one of the world’s toughest math olympiads

OpenAI’s LLM wins gold at IMO 2025, solving math problems once reserved for prodigies.

AI achieves creative reasoning milestone, mastering world’s toughest math olympiad with multi-page human-like proofs.

Reinforcement learning powers OpenAI’s LLM to gold-level math olympiad success under real exam conditions.

In a sun-drenched convention center on Australia’s Sunshine Coast, the 66th International Mathematical Olympiad (IMO) unfolded this month. It brought together 635 of the world’s brightest young minds from 114 countries. Amid the flurry of pencils and the tension of geopolitical debates, an unexpected contender emerged, not a teenager with a calculator, but an artificial intelligence model developed by OpenAI. On July 19, 2025, OpenAI research scientist Alexander Wei announced that their experimental reasoning large language model (LLM) had achieved a gold medal-level performance, solving five of the six grueling problems in the 2025 IMO. This milestone, validated under the same stringent conditions as human contestants – two 4.5-hour sessions, no tools or internet – marks a seismic shift in the landscape of artificial intelligence.

Survey

SurveyAlso read: OpenAI unveils ChatGPT Agent, AI that can work like real assistant

1/N I’m excited to share that our latest @OpenAI experimental reasoning LLM has achieved a longstanding grand challenge in AI: gold medal-level performance on the world’s most prestigious math competition—the International Math Olympiad (IMO). pic.twitter.com/SG3k6EknaC

— Alexander Wei (@alexwei_) July 19, 2025

A breakthrough in reasoning technology

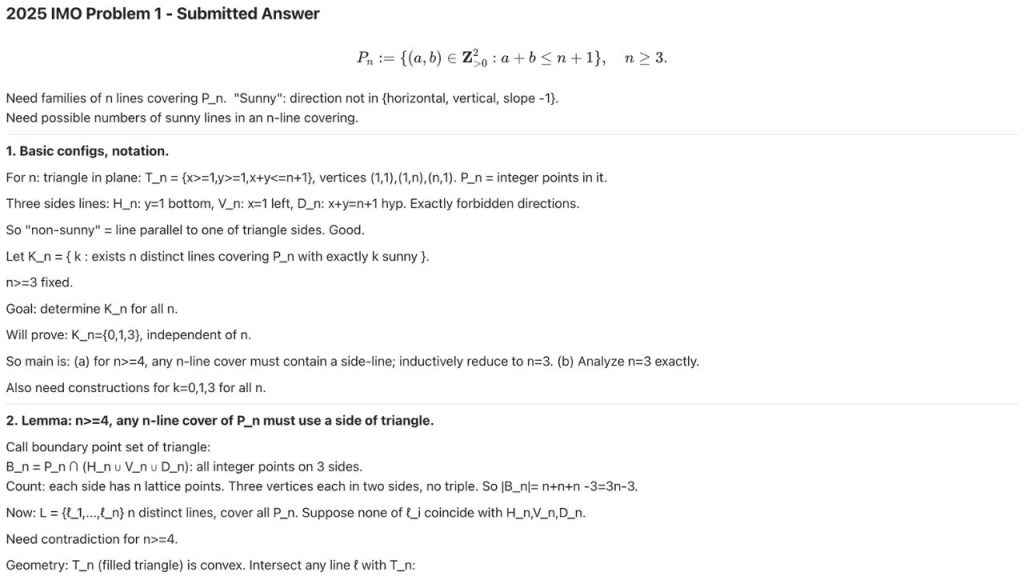

The IMO, widely regarded as the pinnacle of pre-college mathematical competition since its inception in 1959, is known for its challenging problems that demand creative, sustained reasoning. This year, the spotlight shifted to a machine. Wei’s thread on X detailed the LLM’s journey: tackling problems like the 2025 IMO Problem 1, which required intricate proofs about non-negative integers and geometric lines, the model crafted multi-page, watertight arguments that earned it 35 out of 42 points, a score sufficient for gold.

What sets this achievement apart is not just the result but the approach. Unlike previous AI successes in narrow domains, OpenAI’s LLM leveraged general-purpose reinforcement learning and test-time compute scaling. This method, which recent studies in Nature Machine Intelligence (2024) suggest can boost multi-step reasoning by 40%, allowed the model to think creatively over extended horizons, up to 100 minutes per problem, far surpassing earlier benchmarks like the MATH dataset, where a 30% accuracy was optimistically forecasted in 2021. “We’ve progressed from quick calculations to sustained, human-like reasoning,” Wei wrote, a sentiment echoed by OpenAI CEO Sam Altman, who called it a “significant marker of how far AI has come.”

Also read: OpenAI announces o3 and o4-mini AI reasoning models: Here’s what they can do

A distinctive style of proof

The LLM’s solutions, released online, reveal a distinct style, sometimes sassy, often meticulous. For Problem 1, it navigated a labyrinth of conditions about “sunny” lines (those not parallel to the axes or the line x+y=0) with a proof spanning several pages, culminating in a playful “No citation necessary” after computing a mystery number. This flair hints at the model’s experimental nature, a research prototype distinct from the forthcoming GPT-5, which OpenAI plans to release soon but without this level of mathematical prowess for months.

The implications are profound. This breakthrough challenges the notion that AI lacks true understanding, suggesting a leap toward general intelligence. Experts point to recent advancements in reinforcement learning, which allow models to adapt and reason without task-specific training, as a key driver. For the human contestants, the AI’s presence is both inspiration and a call to elevate their own skills. As the Sunshine Coast event concluded, the LLM’s gold medal stood as a testament to AI’s evolving capabilities to prove that when it comes to pure reason, the boundary between human and machine is blurring. Whether this heralds a new era of intelligence or a redefinition of competition, the 2025 IMO will be remembered not just for its equations, but for the code that cracked them.

Also read: OpenAI, Google, Anthropic researchers warn about AI ‘thoughts’: Urgent need explained

Vyom Ramani

A journalist with a soft spot for tech, games, and things that go beep. While waiting for a delayed metro or rebooting his brain, you’ll find him solving Rubik’s Cubes, bingeing F1, or hunting for the next great snack. View Full Profile