Meta AI app marks private chats as public, raising privacy concerns

Meta AI app’s “Discover” feature publicly shares private prompts with least user awareness

Meta AI logs all interactions for model training, with no simple opt-out or complete deletion

Privacy advocates and EU regulators demanding stricter controls or temporary suspension

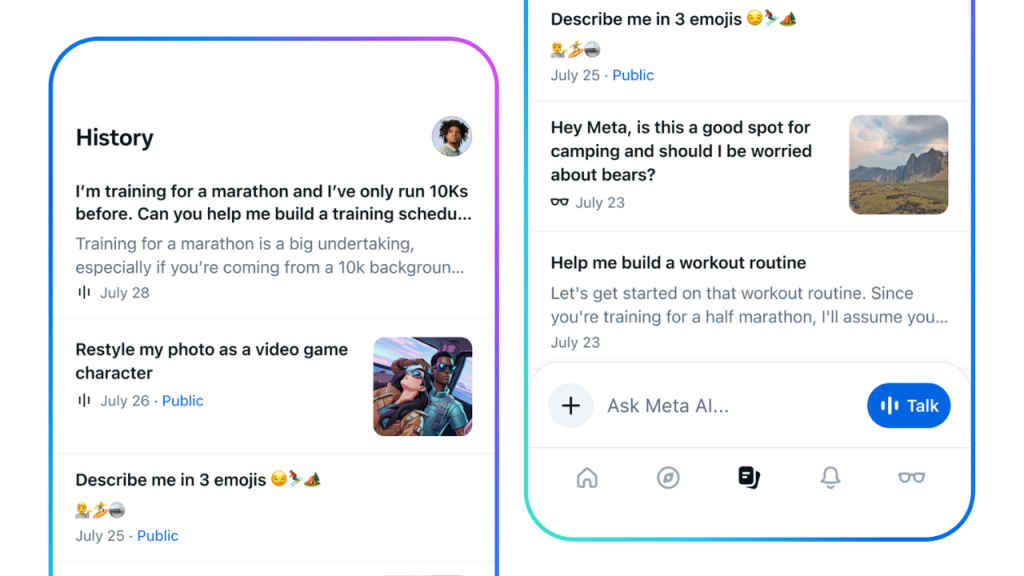

At first glance, Meta AI presents itself as a digital confidant. Offering a private chat window where you can brainstorm tomorrow’s meeting, troubleshoot tech woes or even hash out sensitive health questions. Meta AI app’s clean interface suggests a personal space for exploration, yet recent investigations reveal that what feels like an enclosed conversation can, in fact, end up exposed on a public feed.

Survey

SurveyMeta AI app’s Discover feed, in particular, has been laying bare users’ most private AI conversations for anyone to see. It wasn’t a one-off glitch. Over the past few weeks, privacy alarms have been blaring around Meta AI’s new app, and the leaks cut deeper than just an over-eager “share” button.

Sharing an AI conversation is “opt-in” according to Meta, but as reported by TechCrunch, users are finding the opposite to be true. A single tap on a button in the AI interface will seemingly catapult your chat – medical concerns, legal questions, even your grocery list – into a public forum. No pop-up warning. No double-check. Suddenly, intimate chats are front-and-centre on a “public” tab, traceable to your real name and face. Scary stuff.

Also read: Meta AI is getting video editing capabilities: All you need to know

It’s a privacy design flaw of epic proportions. That’s what WIRED’s report uncovered, revealing multiple instances of deeply personal prompts surfacing alongside celebrity chatter and viral memes, all under a banner that screams “Discover.” In practice, it appeared to be an “Exposé.”

It gets more confusing. As reported by TechCrunch, there are no prominent labels indicating whether your prompt is private, shared only with friends, or laid out for the world. Log in with a public Instagram account, and guess what? Your AI queries default to that same public audience – often without you realizing it. This ambiguity was big enough to blind-side even social media pros, forget about the average social media user.

You’d think Meta would at least let you opt-out of mining your data on the Meta AI app, conversations you were having with the chatbot, but no dice. Unlike competitors such as ChatGPT or Google’s Gemini, Meta AI records every interaction by default and stores it for “training purposes.”

Sure, there’s a labyrinthine menu where you can supposedly delete or privatize your prompts – “Data & Privacy” > “Manage your information”> “Make all your prompts visible only to you” – but users report incomplete deletions and remnants of their chats.

If you take a step back and look at the bigger picture, every time you share a personal thought on Meta AI app, you’re inevitably feeding the black box, fueling Meta’s personalization algorithms – and possibly the next ad campaign.

Now here’s the twist and what’s confusing for me to wrap my head around. Meta’s AI integration in WhatsApp has unfolded with barely a ripple. The rollout – delivered as an optional feature in a familiar chat interface – has seen thoughtful prompts, clear “ask for permission” notices, and a simple toggle for AI suggestions. No public leaks. No privacy rabbit holes. Users can summon quick translations, draft messages, or brainstorm gift ideas all within a private channel that behaves exactly like the WhatsApp we’ve trusted for years.

Also read: WhatsApp will soon let you summarise long chats instantly using Meta AI: Here’s how it will work

So why couldn’t the standalone Meta AI app learn from WhatsApp’s playbook? How did the same engineering powerhouse that thought through end-to-end encryption and seamless UI in WhatsApp allow such a glaring privacy oversight to fester elsewhere?

Regulators aren’t taking this sitting down, obviously. The Mozilla Foundation has demanded Meta pull the Discover feed until robust privacy defaults and user notifications are in place. Norway has balked at Meta’s plan to train AI models on Europeans’ public posts, citing GDPR concerns, according to reports. Even some Meta employees – who one NPR story hints are uneasy about replacing human reviewers with AI risk-assessment tools – are worried that automation may weaken, not strengthen, user protections.

It all circles back to trust – or the severe lack of it, in this case. Meta’s track record is studded with privacy missteps, from Cambridge Analytica to repeated GDPR fines. So when the company touts a personalized AI assistant, you can’t help but wonder if it’s a helpful concierge or a data-mining machine?

Until Meta overhauls its default settings – making private chats truly private, bolting on transparent visibility indicators, and streamlining data-deletion tools – privacy-minded users will continue to treat Meta AI with suspicion. Because in the race to ship AI features, Meta forgot to ask the most basic question of all: Are we safeguarding our users’ trust?

Also read: Mark Zuckerberg says AI will write most of Meta’s AI code by 2026

Jayesh Shinde

Executive Editor at Digit. Technology journalist since Jan 2008, with stints at Indiatimes.com and PCWorld.in. Enthusiastic dad, reluctant traveler, weekend gamer, LOTR nerd, pseudo bon vivant. View Full Profile