Disaster Relief using Satellite Imagery and Machine Learning

Background:

Survey

SurveyMy project is "Disaster Relief using Satellite Imagery" by helping computers to get better at recognizing objects in satellite maps and these satellite maps are provided to us by the UN agency UNOSAT.

UNOSAT is part of United Nations Institute for Technology and Research. Since 2001, UNOSAT has been based at CERN and is supported by the IT department of CERN in the work it does. This partnership allows UNOSAT to take advantage of CERN's IT infrastructure whenever the situation requires enabling the UNOSAT to be at the forefront of satellite analysis technology.

Problem:

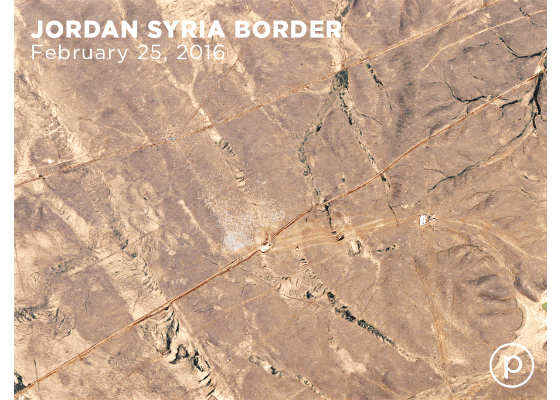

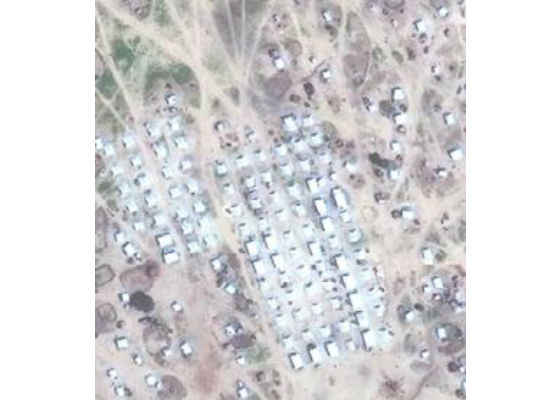

We have satellite images of disaster stricken areas such as refugee camps taken on different dates. We want to analyze or compare these images to see the progress of disaster relief operations or we want to measure level of severity in that particular area over a period of time. For example, if we have satellite images of refugee camp as shown below, we want to count the number of shelters to see if the number of shelters is increasing or decreasing.

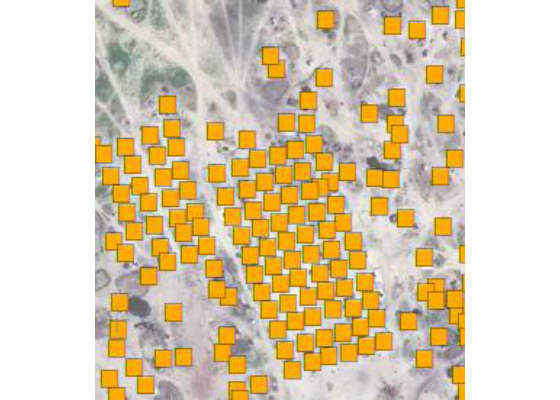

The problem is, in these days, UNOSAT has staff dedicated to analyze and then classify objects in these images manually. In the images shown below, objects are segmented out and marked manually by people.

This is an arduous task, taking a lot of time, inhibiting quick reaction from relief organizations.

Solution:

The solution we are proposing is "Automated Feature Classification using Machine Learning". Now a question can come into your mind:

Why are we not using Conventional Image Processing methods?

Conventionally, objects in images are identified by using color or shape features. In color based image segmentation, pixels with similar color range in the image corresponds to separate clusters and hence meaningful objects in the image.

In our case, for example, we have satellite images of a refugee camp on the Syria-Jordan border, as shown above. All objects such as shelters are arbitrary in shapes and color. Sometimes the background surface has the same color as a shelter. So it is not a good approach to employ any conventional color or shape based image segmentation method.

My work:

My team at CERN openlab with UNOSAT are currently evaluating different machine learning algorithms. For this, I had to read research articles to understand architectures and implementations of various state of the art machine learning based feature classification algorithms. Few of them are Facebook's DeepMask and SharpMask object proposal alogirthms and Conditional Adverserial Network based pix2pix image-image translation approach.

A brief overview of these algorithms according to their research articles are given below:

DeepMask:

In particular, as compared to other approaches DeepMask obtains substantially higher object recall using fewer proposals. It is also able to generalize to unseen categories more efficiently and unlike other approaches for generating object mask, it does not rely on edges, super pixels or any other form of low level segmentation. Superpixel is a group of connected pixels with similar color or grey levels.

SharpMask:

SharpMask is simple, fast, and effective. Building on the recent DeepMask network for generating object proposals, it showed accuracy improvements of 10-20% in average recall for various setups. Additionally, by optimizing the overall network architecture, it is 50% faster than the original DeepMask network.

Pix2Pix:

Pix2Pix uses conditional adversarial networks as a general-purpose solution to image-to-image translation problems. These networks not only learn the mapping from input image to output image, but also learn a loss function to train this mapping. This makes it possible to apply the same generic approach to problems that traditionally would require very different loss formulations.

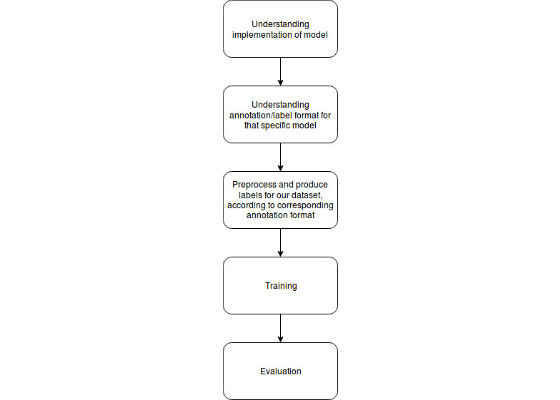

Overview of my whole approach is shown below:

In the coming blogs, I will describe each step in detail i.e. the architecture of model I am using, annotation/label format for that particular model, format of provided labels and dataset and how did I preprocess my dataset according to the annotation format of corresponding model. Further, I will also explain the problems with their solutions that I faced during training and evaluation phase.

For more such intel IoT resources and tools from Intel, please visit the Intel® Developer Zone

Source:https://software.intel.com/en-us/blogs/2017/08/19/disaster-relief-using-satellite-imagery-and-machine-learning