Designing Artificial Intelligence for Games (part 2)

Perceptions and Path Finding

Survey

SurveyIn the last article (part 1), I discussed ways to govern the basic decisions that an intelligent agent-as artificial intelligence (AI) research refers to entities that use AI-may make. In this article, I give our hero (or monster or any type of game entity) some context to the decisions that will be made. Intelligent agents need to identify points of interest in the game world, then figure out how to get there.

Finally, this article shows how to optimize these methods and provides ways of organizing them to account for multithreading.

This article gets dangerously close to real artificial intelligence (AI). All intelligent agents need to have a basic ability to perceive their environment and some means of navigating and moving within the world around them-be it real or otherwise. Your entities will need to do the same, although with a much different approach. You can also cheat-which you will to make sure everything runs nice and fast.

How AI Perceives

Having your agents make arbitrary decisions is fine for some games, but what if you need more? If your agent is going to make decent decisions, it is going to need to know what's going on around it. In the robotic application of AI, a lot of research is being done on computer vision, giving robots the ability to perceive the world around them in true, stereo three-dimensional (3D) vision, just like humans do. That level of sophistication is complete overkill for our purposes.

The virtual worlds in which most games take place have a huge advantage over real-world AI robots. Everything in our world is a known quantity: There is a list somewhere in the game with everything that exists in it. You can just search that list for any criteria, and then immediately have information that your agent can use to make more meaningful decisions.

Sight

Faking entity sight is the most basic level of giving an agent perceptive ability. You can do this by searching your list of entities for anything within a set range. You can either get the first thing that interests your agent or you can get a list of things in range so that your agent can make the optimal decision about its surroundings.

This setup works well for simple games, but when you have a more complex style of game-such as a spying game or a tactical first-person shooter (FPS)-your agents will need to be a bit more selective in what they "see." If you don't want your agents to have eyes in the back of their heads, you can cull the list of potentials of anything outside the entity's sight range. You can do this quickly with a bit of math:

1. Calculate the vector between the agent and the entity in question by subtracting the position of the target from the agent's position.

2. Calculate the angle between that vector and the direction in which your agent is looking. (If it's not already a vector, you can calculate that value, too.)

3. If the absolute value of the angle is greater than the agent's preset view angle, your agent does not see the entity.

In more complex games, you may need to account for the player or other entities being hidden by some sort of cover. For this type of game, you may need to perform a ray trace (sometimes referred to as a ray cast) to see whether something has blocked the potential target. A ray trace is a mathematical way of checking if a ray intersects anything, starting from a single point and going in a set direction.

The game engine provides ray tracing functionality, but if you want to see how it's done, check out Two Brains Are Better Than One.

The previous method tells you whether something has obscured the center of the target, but it might not be enough to deter your agent.

It's possible that the center of the agent is obscured but its head is poking out conveniently above the cover. Using multiple ray traces to specific points of interest on the target may help determine not only if the target can be hit but where the target can be hit.

Sound

At first blush, it may seem like sound is no different than sight. If you can see an entity, certainly you can hear it, too. It's true that if your agent has spotted an entity, the agent can actively detect anything that entity does until it is no longer in sight. However, adding an extra level of hearing to your agents can help make sight work more effectively. Tracking the noise that entities make as a level of perception is key to any stealth-based game.

Just as in sight, you're going to need to get a list of nearby entities to check against. You can do this again with a simple distance check, but what you do to cull this list is different.

Every action that an entity can perform needs to have some sound levels associated with it. You can preset sound levels (to optimize game balance) or base them on the actual energy of the sound effects being played for the action (nice for realism but unnecessary). If the sound being generated is within a threshold, your agent will perceive that entity.

If you want to account for obstacles, you can once again cull this list by performing ray traces with your environment to see whether anything would be in the way of the sound. Because very few materials are completely soundproof, you need to be more creative in how you remove entities from your list.

Other Senses

The basic functionality needed to give your agents sight and hearing can easily be applied to simulate other senses. Here is a list of other potential senses that you can add to a game if the designs call for it:

- Smell. The idea of having intelligent agents tracking players by smell has been added to such recent games as Call of Duty 4*. Adding the sense of smell to a game is relatively easy: Give each entity in the game a distinct smell number and strength. The strength of the smell determines two factors: the radius of the smell and the size of the scent trail left behind. Active player entities often keep track of their last few positions for a number of reasons (more on trails later in this article). One reason could be to help entities with smell. As the player entity updates the trail, the strength of the smell diminishes as the trail grows "cold." When an agent with smell is updated, it needs to check for smells like it would check for sound: radius and check for walls. With smell, the factor for success is then based on the power of the smell and the agent's sense of smell, which are checked against the entity and the entity's trail.

- Radar. Some games give players individual radar, which makes sensing even easier. All that is required is a simple radius check. The AI can then verify the results for interest. For team games, the radar itself can become more interesting. To put together a team-based AI, each team needs a radar list of entities that have been found by radar. Each member of the team can then perform the radius check against just the list of known entities to determine whether the team should react. The team can add to the list by using radar equipment (such as in games like Enemy Territory: Quake Wars*) and by each team member adding anything that is "seen." This behavior helps entities working as a unit, as each alerts others of what it sees.

- Touch. This sense is kind of a "gimme," as the collision system of the game engine covers it automatically. You just need to make sure that your intelligent agents react to damage and collision events.

- Taste. I'm not sure how this one would work. It would probably be a property of smell but would require agents to actively "taste" things that they find.

Being able to sense the world around us is all well and good, but what is it that the agents are supposed to be sensing? You need to specify and be able to identify things that can be observed through your agents' settings. When you recognize what it is you're seeing, your agents can react to it based on the rules that govern the entity.

Temporary Entities

Sometimes called particles, decals, or special effects, temporary entities are visual effects in the game world. They are similar to entities in that one overall class structure defines any potential temporary entity, but they are distinct from entities in that they do not think, react to, or interact with the game world's entities or each other. Their sole purpose is to look pretty, providing additional detail to the world for a while, then die. Temporary entities are used for things like bullet trails, smoke, sparks, blood spurts, and even footprints.

The nature of temporary entities implies very little processing and no collision detection (beyond very simple world collisions). The problem is that some temporary entities give players a visual clue about things that may have transpired (bullet holes and burn marks indicate a recent battle, footprints in the snow can lead to a potential target), so why can't your intelligent agents use that, too?

There are two ways to solve this problem: You can either enhance your temporary entity system to allow for ray tracing (which would break the whole point of a temporary entity system), or you can drop an empty entity in the general vicinity of your temporary entities. This blank entity would have no ability to think and no graphic associated with it, but your agents would be able to detect it, and the temporary entity would have associated information to provide "intel" to your agent. So, when you drop a pool of blood decals on the floor, you can also drop an invisible entity there to let your agents know something is up. For the problem of footprints, you already have a trail to cover that. Cover

In many shooting-based games, it would be nice if your agents could be smart enough to duck behind cover when it is available instead of just standing out in the open, getting shot at. This problem is a bit more specialized than other problems that I have been covering so far. So, how do your agents determine whether there is any viable cover to duck behind?

Penny Arcade* satirizes the problem of enemy AI and cover.

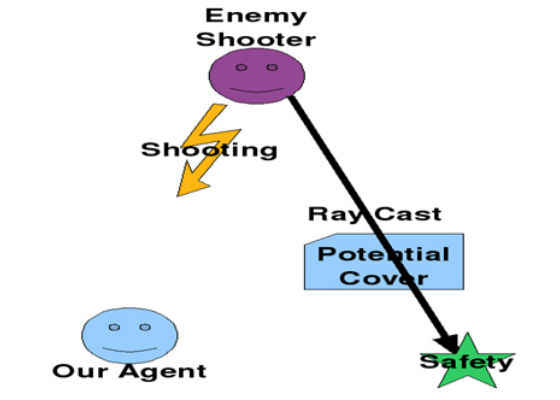

This dilemma is really two problems: How do you determine cover from the world geometry, and how do you determine cover from the world entities (as the comic above suggests). To determine whether an entity is able to block attacks, you can perform a simple check comparing the size of the bounding box of the potential cover. You then need to check whether your entity can get behind it. To do that, create a ray from the differences in positions of your shooter and your cover. Use that ray to determine whether the spot past your cover (from your shooter) is free, then mark that space as your agent's next target.

Here, your agent has determined that the green star is a safe spot to hide from damage.

AI Navigation

So far, I've talked a lot about how AI makes decisions and how it can know what's going on (in order to make better decisions). Now, let's look at how your AI can carry out those decisions. The next step is to figure out how to get from point A to point B. You can use several different approaches depending on the nature of the game and level of demand for performance.

Crash and Turn

Crash and turn is one of the most basic ways of generating movement for an entity. Here is how it works:

1. Move in the direction of your target.

2. If you hit a wall, turn the direction that puts you closest to the target. If no choice is obviously better, pick one at random.

This approach works fairly well for simple games. More games than I can count have used this method to govern how monsters hunt the player. Crash and turn results in entities getting stuck behind concave walls or corners as they hunt the player, so for games with zombies or without that land feature, this method is ideal.

If the game requires a bit more finesse from its agents, however, you can expand upon the simple crash and turn and give your agents some memory. If the agents can keep track of where they have been already, they can begin to make more meaningful decisions about how to turn. When all turns have been exhausted, your agents can backtrack and make different choices. Thus, your agent will make a systematic search for a path to a target. Here is how it works:

1. Move toward the target.

2. Make a choice when presented with a fork.

3. When dead ends are discovered, backtrack to the last choice and make another choice.

4. If all possible paths are explored, give up.

This method has the benefit of being lightweight in the processing category, which means that you can support large numbers of these guys without slowing the game down. This method can also gain an optimal benefit from multithreading. The drawback is a huge waste of space, as each agent can potentially keep track of an entire map of possible paths.

Fortunately, you can avoid this waste by having your agents keep track of paths in a shared memory. A problem can then arise with threading conflicts, so keep your entity paths in a separate module that all agents can then send requests to as they move and send updates to as they discover new paths. The Path Map Module can then parse the findings to avoid conflict.

Path Finding

Crash and turn-generated path maps are a great way to adapt to changing maps. In strategy games, players cannot wait around for their units to find their bearings. Also, these path maps can become gigantic, and searching through them for the correct path becomes the bottleneck. Path finding to the rescue.

Path finding is essentially a solved problem in game development. Games as old as the original Starcraft* (Blizzard Entertainment*) handled large numbers of game entities that were all able to find their way in large, intricate maps.

The core algorithm for path finding has been 'A*' (pronounced Eh-Star), which can be used to discover an optimal path from any two points in a graph (in this case, the map). A simple online search will discover a clean algorithm that uses such descriptive terms as F, G, and H. Allow me to explain it more plainly.

First, you must set up two lists: a list of nodes that have not been checked (Unchecked) and a list of nodes that have been checked (Checked). Each list is composed of a position node, the estimated distance to the goal, and a reference to its parent (the node that put it in the list). The lists are initially empty.

Next, add your starting position to the Unchecked list and nothing as the parent. Then, you enter the algorithm:

- Select the best-looking node from the list.

- If this node is the goal, you're done.

- If this node is not the goal, add it to the Checked list.

- For each node adjacent to this node:

- If the node is non-walkable, ignore it.

- If the node is already in a list (Checked or Unchecked), ignore it.

- All else, add it to the Unchecked list, setting this node as the parent and estimating the distance to the goal (a raw distance check is good enough).

When entity reaches the goal tile, the path can be constructed by tracing the parents back to the node that does not have a parent (the starting node). Doing so provides an optimal path that the entity can then follow. Because this process needs to occur only when an agent is either given an order or decides on its own to move, it can really benefit from multithreading. An agent can send a request to the path thread get back a path when it has been discovered without affecting the performance of the AI. For the most part, the system can get results quickly; but in the case of large loads of path requests, the agent can either wait around or just start heading in the right direction (maybe it can use the crash and turn method). In extremely large maps, the system can be broken down into regions, and all possible paths between the regions (or way points) can be pre-computed. In that case, the path finder can just look up the best path and return the results immediately. The path map thread can just keep a watch on changes in the map-such as when a player builds a wall-then re-run the path checks as needed. With it being in its own thread, the algorithm can adapt without affecting the performance of the rest of the game.

Within the path finding subsystem, multithreading can also improve the performance of the system. This is well utilized in any real-time strategy (RTS) game or a system with a large number of entities each seeking a potentially unique path. Multiple paths can be found simultaneously within different threads. Of course, the system will need to keep track of which paths are being discovered. The same path does not need to be discovered more than once.

Code Sample

Here is a sample of A* implemented simply in C. I've left out support functions in the sample for simplicity's sake, and because they need to be tailored for different implementation styles. The sample is based on a simple tile grid where each tile can either be walked on or not. This only allows for movement into an adjacent tile, but with minor modifications can be adjusted to allow diagonal movement or even a hex style game layout.

/*Get Path will return -1 on failure or a number on distance to path

if a path is found, the array pointed to by path will be set with the path in Points*/

int GetPath(int sx,int sy,int gx,int gy,int team,Point *path,int pathlen)

{

int u,i,p;

memset(&Checked,0,sizeof(Checked));

memset(&Unchecked,0,sizeof(Unchecked));

Unchecked[0].s.x = sx;

Unchecked[0].s.y = sy;

Unchecked[0].d = abs(sx – gx) + abs(sy – gy);

Unchecked[0].p.x = -1;

Unchecked[0].p.y = -1;

Unchecked[0].used = 1;

Unchecked[0].steps = 0;

The above section handles initialization of the checked and unchecked list and puts the starting node into the unchecked list. With this set, the rest of the algorithm can be run in loop.

do

{

u = GetBestUnchecked();

/*add */

AddtoList(Checked,Unchecked[u]);

if((Unchecked[u].s.x == gx)&&(Unchecked[u].s.y == gy))

{

break;

}

The above section takes a look at the node from the Unchecked list that is closest to the goal.GetBestUnchecked() is a function that checks each node's estimate distance to the goal. If the tile is the goal, the loop breaks and the process is complete.

How the distance is calculated is seen below by taking the estimated distance to the goal in both the X and Y directions and adding them together. On first thought, you may be tempted to use the Pythagorean theorem (a^2 + b^2 = c^2 ), but it's completely unnecessary. You only need a relative distance, not an exact distance. Processors can handle adding and subtracting many times faster than they can handle multiplication, which in turn, is much faster than division. Because this section of the code will be run many times a frame, optimization is key.

/*tile to the left*/

if((Unchecked[u].s.x – 1) >= 0)/*first, make sure we're on the map*/

{

if((IsInList(Unchecked,Unchecked[u].s.x – 1,Unchecked[u].s.y,NULL) == 0)&&(IsInList(Checked,Unchecked[u].s.x – 1,Unchecked[u].s.y,NULL) == 0))

/*make sure we don't repeat a search*/

{

if(TileValid(Unchecked[u].s.x – 1,Unchecked[u].s.y,team))

NewtoList(Unchecked,Unchecked[u].s.x – 1,Unchecked[u].s.y, Unchecked[u].s.x, Unchecked[u].s.y, abs((Unchecked[u].s.x – 1) – gx) + abs(Unchecked[u].s.y – gy), Unchecked[u].steps + 1);

}

}

In the section above, the function looks at the tile to the left of the current node. If it is not already in the list as Checked or Unchecked, then it will attempt to add it to the list. TileValid() is another function that needs to be tailored to fit your game. If it passes the TileValid() check then it will call NewToList(), which adds a new tile to the unchecked list. The following three sections carry out the same process, but cover the other directions: right, up, and down.

/*tile to the right*/

if((Unchecked[u].s.x + 1) < WIDTH)/*first, make sure we're on the map*/

{

if((IsInList(Unchecked,Unchecked[u].s.x + 1,Unchecked[u].s.y,NULL) == 0)&&(IsInList(Checked,Unchecked[u].s.x + 1,Unchecked[u].s.y,NULL) == 0))

/*make sure we don't repeat a search*/

{

if(TileValid(Unchecked[u].s.x + 1,Unchecked[u].s.y,team))

NewtoList(Unchecked,Unchecked[u].s.x + 1,Unchecked[u].s.y, Unchecked[u].s.x, Unchecked[u].s.y, abs((Unchecked[u].s.x + 1) – gx) + abs(Unchecked[u].s.y – gy), Unchecked[u].steps + 1);

}

}

/*tile below*/

if((Unchecked[u].s.y + 1) < HEIGHT)/*first, make sure we're on the map*/

{

if((IsInList(Unchecked,Unchecked[u].s.x ,Unchecked[u].s.y + 1,NULL) == 0)&&(IsInList(Checked,Unchecked[u].s.x,Unchecked[u].s.y + 1,NULL) == 0))

/*make sure we don't repeat a search*/

{

if(TileValid(Unchecked[u].s.x,Unchecked[u].s.y + 1,team))

NewtoList(Unchecked,Unchecked[u].s.x,Unchecked[u].s.y + 1, Unchecked[u].s.x, Unchecked[u].s.y, abs(Unchecked[u].s.x – gx) + abs((Unchecked[u].s.y + 1) – gy), Unchecked[u].steps + 1);

}

}

/*tile above*/

if((Unchecked[u].s.y – 1) >= 0)/*first, make sure we're on the map*/

{

if((IsInList(Unchecked,Unchecked[u].s.x ,Unchecked[u].s.y – 1,NULL) == 0)&&(IsInList(Checked,Unchecked[u].s.x,Unchecked[u].s.y – 1,NULL) == 0))

/*make sure we don't repeat a search*/

{

if(TileValid(Unchecked[u].s.x,Unchecked[u].s.y – 1,team))

NewtoList(Unchecked,Unchecked[u].s.x,Unchecked[u].s.y – 1, Unchecked[u].s.x, Unchecked[u].s.y, abs(Unchecked[u].s.x – gx) + abs((Unchecked[u].s.y – 1) – gy), Unchecked[u].steps + 1);

}

}

memset(&Unchecked[u],0,sizeof(PNode));

The last thing to do in this iteration is to remove the current node from the unchecked list. There is no need to look at this tile again.

}

while(1);

This final piece of code builds the path from the checked list by back-tracking from the goal. The way back to the starting location can always be found, because each node in the path keeps track of the node that put it in the list. The final path is then returned (through reference). The function returns the length of the new path.

IsInList(Checked,Unchecked[u].s.x,Unchecked[u].s.y,&u);

p = Checked[u].steps;

if(path != NULL)

{

for(i = (p – 1);i >= 0;i–)

{

path[i].x = Checked[u].s.x;

path[i].y = Checked[u].s.y;

IsInList(Checked,Checked[u].p.x,Checked[u].p.y,&u);

}

}

return p;

}

Summary

Intelligent agents need to observe the world around them. Simple range checks and ray tracing can be used to quickly shape those observations around the ideas of sight and hearing, which helps your agents make more lifelike decisions as the game plays out. With goals in mind, agents will need to find ways of getting where they want to go. Quick methods such as crash and turn are useful in the short term and in simple maps, while more complicated games need to analyze the maps to find the optimal path. You can optimize these methods to gain benefits from multithreaded implementations to ensure that the game runs smoothly-even with large numbers of entities and intricate maps.

For more such intel IoT resources and tools from Intel, please visit the Intel® Developer Zone

Source:https://software.intel.com/en-us/articles/designing-artificial-intelligence-for-games-part-2