Apple reportedly working on laser-based 3D sensor for iPhone’s rear camera

Apple is planning to bring 3D sensing to the rear camera of iPhone in 2019. The technology will differ from TrueDepth sensor found on iPhone X and will complement augmented reality applications.

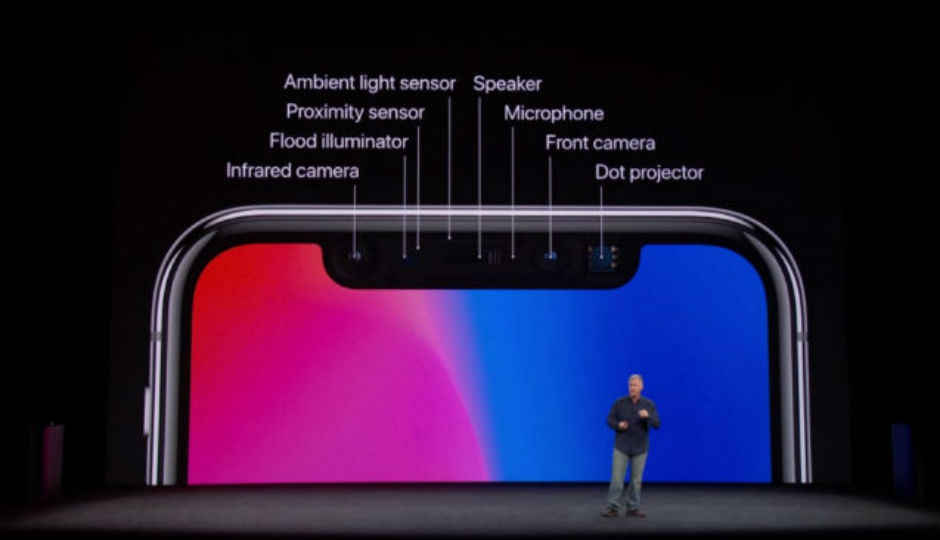

Apple may be planning to bring 3D depth sensing system to the rear camera of its 2019 iPhone. Bloomberg reports that Apple is working on a rear-facing 3D sensor for the iPhone that will make the handset more apt for augmented reality applications. Apple already has 3D sensing technology as part of the TrueDepth sensor system found on the iPhone X, which was released in September this year. For the rear-facing 3D sensor, Apple is planning to adopt a different technology using a 'time-of-flight' approach. This new approach will rely on calculations of the time taken for a laser to bounce off the surrounding objects to create a 3D model. The TrueDepth technology uses a structured light technique to projects 30,000 laser dots on a user's face to create a 3D mathematical model for authentication.

Survey

SurveyBloomberg reports that Apple will retain both the technologies for the future iPhones, with both front and rear cameras supporting 3D sensing technology. Apple has reportedly begun discussing the plans for rear-facing 3D sensors on the iPhone with prospective suppliers. Infineon Technologies AG, Sony Corp., STMicroelectronics NV and Panasonic Corp. are said to be the companies manufacturing time-of-flight sensors. People familiar with the development claim that the technology is still in early stages and it is possible that it might not be used in the final product.

A recent investor note by KGI Securities analyst, Ming-Chi Kuo suggested that Apple may once again launch three new iPhones in 2018 with all the models ditching Touch ID in favour of Face ID and and feature the notch that houses TrueDepth sensors.

Apple has already begun targetting AR platforms with the launch of ARKit early this year. Apple CEO Tim Cook believes Augmented Reality can be as big as the smartphone itself and has even reported started work on company's mass market AR glasses.

With ARKit, Apple has enabled AR support for iPhones as old as the iPhone 6. This allows developers to make augmented reality applications. The tool reportedly works well with flat surfaces but struggles with vertical objects like walls or doors. With a 3D sensor, Apple will be able to rectify that by adding more accurate depth sensing.

Google's Project Tango devices, namely the Lenovo-made Phab 2 Pro and Asus' ZenFone AR use time-of-flight approach for depth sensing and deploy depth sensors from Infineon. The technology is said to be easier to assemble than TrueDepth system since it relies on advanced image sensor rather than the positioning of the laser.