From 400 TOPS in servers to 450 TOPS in laptops: The AI 100 revolution

Dell’s Pro Max Plus laptop uses two Qualcomm AI 100 chips to deliver 450 TOPS of AI performance without any cloud connection.

The AI 100 began as a 400 TOPS server accelerator, prized for its large on-die SRAM and power-efficient design.

Dual AI 100 modules share a unified 64 GB LPDDR4x pool with 136 GB/s bandwidth, enabling large AI models to run entirely on the laptop.

Picture this: you’re sitting in a coffee shop, running the same AI models that tech giants use in their massive data centres, all from your laptop. No internet connection required, no cloud computing fees, and no concerns about data privacy. This isn’t science fiction. It’s exactly what Dell achieved when they launched the Pro Max Plus laptop with Qualcomm’s AI 100 chip, marking a pivotal moment in computing history.

Survey

SurveyThe story of how a chip designed for server farms ended up powering laptops is fascinating, and it’s reshaping how we think about AI computing altogether.

The unlikely journey from data centre to desktop

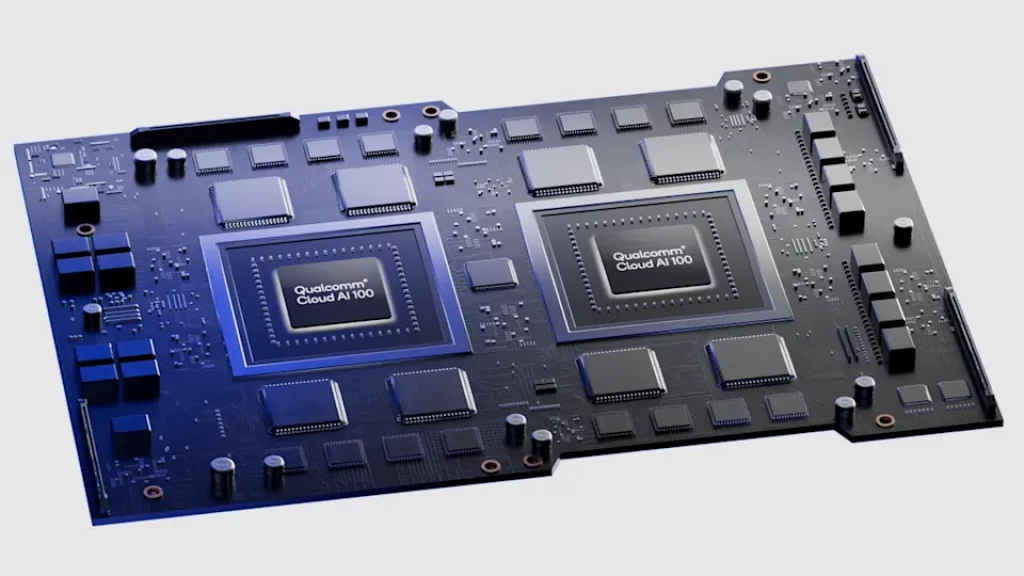

The Qualcomm Cloud AI 100 wasn’t originally meant for laptops. When Qualcomm first announced this chip in 2019, it was squarely aimed at data centres and enterprise infrastructure. Built on a 7-nanometer process, the original design featured up to 16 AI cores with 144MB of on-die SRAM, specifically engineered for 5G intelligent edge compute appliances and data centre inference workloads.

The chip came in various configurations to suit different deployment scenarios. The edge-optimised M.2 cards delivered 70 TOPS whilst consuming just 15 watts, whilst the full PCIe cards pushed 400 TOPS at 75 watts.

What made the AI 100 special wasn’t just its raw performance, but its efficiency. Qualcomm designed it with a large amount of SRAM on each AI core, 9MB per core, which dramatically reduced the need to fetch data from external memory. This architectural choice proved crucial for power efficiency, as accessing external memory is one of the biggest energy drains in AI processing.

The chip also supported coherent multi-card scaling, allowing multiple AI 100 units to communicate directly through PCIe switches without involving the host processor. This feature enabled massive parallel processing setups that could deliver extraordinary performance levels.

Dell’s bold gamble: Ditching graphics for AI

When Dell announced the Pro Max Plus laptop at Dell Technologies World 2025, they made a decision that surprised many in the industry. Instead of including a traditional graphics card, they replaced the entire discrete GPU slot with a Qualcomm AI 100 PC Inference Card.

This wasn’t a minor tweak, but it was a fundamental reimagining of what a laptop could be. The Dell Pro Max Plus became the world’s first mobile workstation to feature an enterprise-grade discrete neural processing unit. The configuration includes two AI 100 chips working together, providing 32 AI cores and 64GB of LPDDR4x memory.

Also read: A YouTuber built an open-source laptop from scratch, and it boots faster than a MacBook

The performance figures are staggering. Whilst typical laptop NPUs like Apple’s M4 Neural Engine deliver around 38 TOPS, and Intel’s latest chips reach 48 TOPS, Dell’s Pro Max Plus delivers approximately 450 TOPS. That’s roughly ten times more AI processing power than competing platforms.

To put this in perspective, the laptop can handle AI models with 109 billion parameters, the same scale of models that typically require cloud infrastructure or powerful server farms. During the launch demonstration, Dell ran a 109-billion-parameter Llama 4 model entirely offline, without any internet connection or cloud server assistance.

The technical marvel behind the magic

The Qualcomm AI 100 PC Inference Card can be considered a remarkable feat of engineering. Each of the dual chips comes equipped with 32GB of LPDDR4x memory, but they’re presented to the system as a unified 64GB memory pool with peak bandwidth reaching 136GB/s. This unified memory architecture allows the system to keep entire large language models in local RAM without constantly pulling data from system memory.

The card connects via PCIe and operates within a thermal envelope of up to 150 watts. Unlike traditional graphics cards optimised for parallel graphics processing, the AI 100 is purpose-built for neural network operations and AI inferencing. This specialisation provides superior performance-per-watt for AI workloads compared to general-purpose graphics processors.

The chip’s architecture includes several key innovations that make it particularly effective for AI tasks. The large amount of on-die SRAM means that frequently accessed data stays close to the processing cores, reducing latency and power consumption. The support for multiple data types, including INT8, INT16, FP16, and FP32, allows it to handle different AI model requirements efficiently.

Security features are also built into the hardware, including Hardware Root of Trust, secure boot, and firmware rollback protection. These features are particularly important for enterprise users who need to ensure their AI models and data remain secure.

The Broader Industry Impact

This is designed for a specific professional audience: AI engineers, data scientists, and developers who need to run massive AI models locally. The ability to process AI workloads without cloud connectivity eliminates concerns about data privacy and model integrity that can occur when models are compressed for cloud processing.

Also read: Different variants of Snapdragon X laptop processors explained

Dell and Qualcomm’s collaboration signals a significant shift in how the industry approaches AI computing. For years, the assumption has been that serious AI workloads require either cloud infrastructure or dedicated AI servers. The Pro Max Plus demonstrates that enterprise-grade AI processing can be made portable without compromising performance.

This development challenges other laptop manufacturers to reconsider their own AI strategies. Whilst most companies have focused on integrating AI capabilities into existing processors, Dell’s approach of using discrete AI acceleration hardware opens up new possibilities for mobile workstations.

Power efficiency: The unsung hero

One of the most impressive aspects of the Qualcomm AI 100 is its power efficiency. According to MLPerf benchmarks, the AI 100 consistently delivers the highest performance-per-watt in its category. This efficiency translates into real-world benefits for laptop users, including longer battery life and reduced heat generation.

The power efficiency gains are substantial. Qualcomm’s analysis suggests that AI 100-based systems can deliver significant energy savings compared to GPU-based alternatives. For large-scale deployments, these savings can amount to hundreds of millions of dollars annually and substantial reductions in carbon footprint.

In laptop form factor, this efficiency means that users can run intensive AI workloads without the machine becoming uncomfortably hot or draining the battery rapidly. The 150-watt thermal envelope is manageable within a well-designed laptop cooling system.

Looking towards the future

The introduction of the Qualcomm AI 100 Ultra variant further demonstrates the potential of this architecture. The Ultra version can support 100-billion parameter models on a single 150-watt card and 175-billion parameter models using two cards. This scalability suggests that even more powerful laptop configurations could be possible in the future.

The collaboration between Dell and Qualcomm also highlights how chip designs originally intended for one market can find new applications elsewhere. As AI workloads become more diverse and demanding, we’re likely to see more examples of server-grade hardware being adapted for mobile use.

The success of this approach could influence how other chip manufacturers design their AI accelerators. Rather than creating separate chips for different markets, companies might focus on creating more versatile designs that can scale from edge devices to data centres.

Sagar Sharma

A software engineer who happens to love testing computers and sometimes they crash. While reviving his crashed system, you can find him reading literature, manga, or watering plants. View Full Profile