OpenAI’s GPT-5 matches human performance in jobs: What it means for work and AI

On September 25, 2025, OpenAI dropped a bombshell: its latest model, GPT-5, now “stacks up to humans in a wide range of jobs.” The declaration ripples far beyond the world of AI benchmarks, it raises urgent questions about the future of work, the boundary between human and machine, and how societies will adapt when tools become peers.

Survey

SurveyWhat does it really mean, though and more importantly, what comes next?

Also read: Data commons MCP explained: Google’s AI model context protocol for developers

GDPval: Measuring economic AI performance

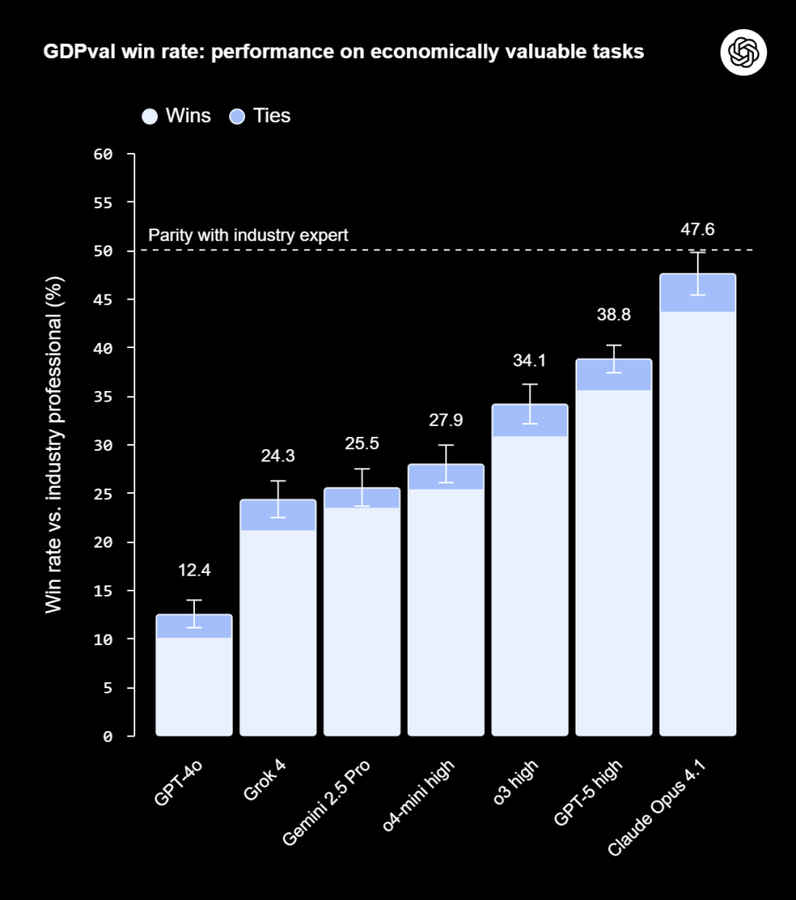

To make its case, OpenAI introduced GDPval, a new benchmark built to test AI vs. humans in economically meaningful roles. The benchmark draws on nine industries crucial to the U.S. GDP (healthcare, finance, manufacturing, government, etc.) and drills down to 44 occupations from nurses to software engineers to journalists.

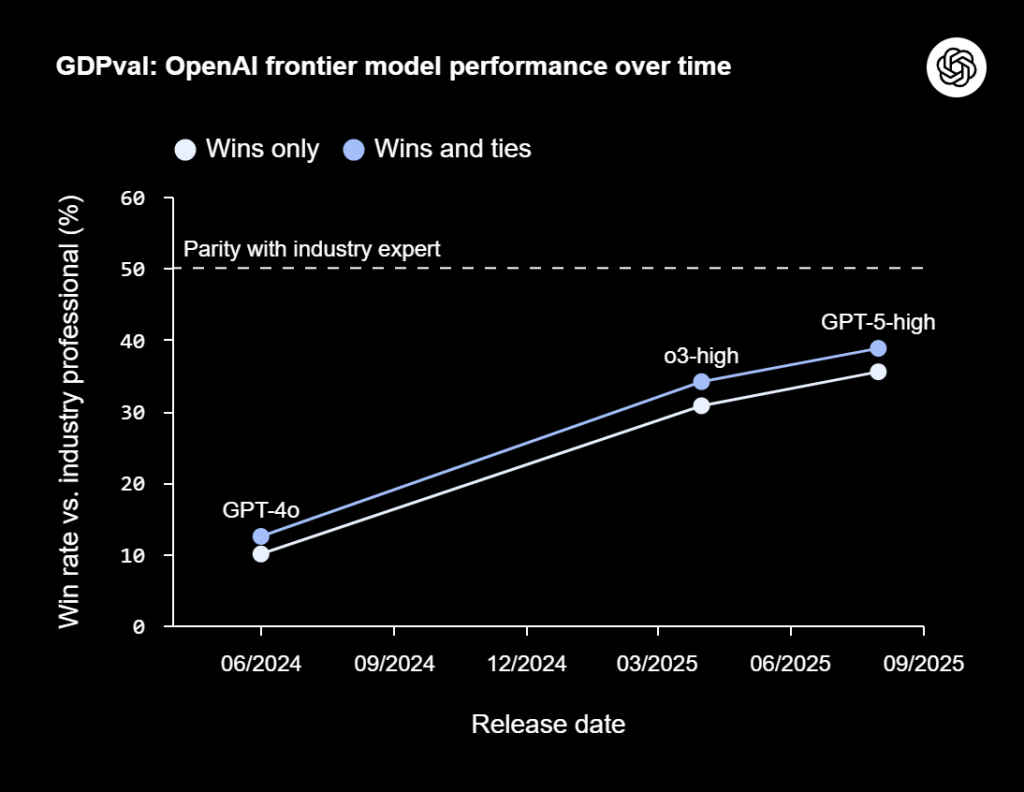

In the first version (GDPval-v0), human professionals compare reports they and the AI generate; they then judge which is better. GPT-5, in a “high compute” configuration (GPT-5-high), achieved a “win or tie” rate of 40.6% versus expert-level human output. That is a staggering leap: GPT-4o (OpenAI’s earlier multimodal model) scored 13.7% in this same setup.

OpenAI also tested Claude Opus 4.1 (from Anthropic), which scored 49% in the same evaluation, though OpenAI cautions part of that could be due to stylistic “presentation” (e.g. “pleasing graphics”) rather than pure substance.

OpenAI frames GDPval not as a final arbiter but as a stepping stone – a way to push the conversation beyond narrow academic benchmarks and into real-world tasks.

Why this is different

What sets this announcement apart is not just the raw numbers, but the framing: this is a claim of task parity, not on chess puzzles or math exams, but on work that people actually do in their jobs.

Still, OpenAI is careful to spell out the limitations. GDPval-v0 tests are limited in scope: they are static, noninteractive, and focus on output artifacts (reports, analyses) rather than on the full complexity of many jobs. Many real roles involve collaboration, stakeholder negotiation, on-the-fly adaptation, creativity, ethics, domain nuance, and interpersonal context – aspects hard to reduce to benchmark prompts.

Thus, OpenAI acknowledges that it’s not yet fielding GPT-5 to replace whole roles. Rather, the goal is augmentation: let humans offload lower-level cognitive work so they can spend more time on judgment, oversight, vision, and context. “People in those jobs can now use the model to offload some of their work and do potentially higher value things,” OpenAI’s chief economist, Aaron Chatterji, summarized.

Still, the jump from “assistive AI” to “competent peer AI” is narrowing. The trajectory is disquieting for many. The question is: will workplaces and societies adapt fast enough?

What it means for jobs

1. Workers: fear, opportunity, upheaval

For many professionals, this moment will feel existential. If machines begin writing reports, diagnosing medical cases, or performing legal drafting at near-human quality, the sense that “my job is safe” becomes shaky.

But the impact is uneven. Roles with more structured tasks (analysis, drafting, pattern recognition) are more exposed; roles grounded in human empathy, trust, high-stakes judgment, or the messy real world may resist analog substitution at least for some time.

Still, even if your job stays intact, your tools may change. Expect increasing automation of daily workflows, with AI copilots becoming standard. Your value may shift toward meta-skills: oversight of AI, domain interpretation, accountability, and human relationship skills.

2. Companies and industries: reorganization, efficiency, disruption

Firms will rush to adopt any productivity multiplier. For industries with tight margins (consulting, finance, legal, media), the pressure to incorporate GPT-5–level automation will be immense.

This may accelerate restructuring: flatter teams, fewer middle layers, more emphasis on hybrid human–AI squads. Some firms might experiment with replacing junior human roles first. Others might lean into differentiation – human judgment, ethics, brand – as their edge.

But adoption won’t be frictionless. Integration, reliability, auditing, legal liability, ethical guardrails, all these will become battlegrounds. Will clients accept AI-drafted legal memos? Will regulators allow AI medical assistants to operate with minimal oversight? Those answers will vary by jurisdiction.

3. Education, policy, and society: catching up

If AI is now approaching human-level competence in real work tasks, education systems must rethink what they teach. Memorization and literate report writing become less valuable than critical thinking, interpretive insight, collaboration, and ethical reasoning.

Policymakers will also face hard questions: social safety nets, labor transitions, regulation of AI in high-stakes domains (medicine, law, defense), certification, liability, and IP. How do you audit or validate AI work when it competes with human professionals?

Further, inequality may widen. Organizations with early access to strong AI systems and capital to deploy them could dramatically outpace smaller players and local firms, both within and across countries.

4. AI itself: the trajectory toward AGI

OpenAI frames this as progress toward its long-term ambition: building Artificial General Intelligence (AGI). GDPval is one metric – but not the ultimate one. If GPT-5 is nearing human-level performance in many domain tasks, then the next frontier is robustness, generality, safety, interactive workflows, long-term planning, object permanence, world models, adaptability under uncertainty – in short, the qualities that humans bring to open-ended problems.

Thus, GPT-5’s performance is both a milestone and a challenge: can AI systems maintain trust, explainability, correctness, and alignment as they push closer to human-level agency?

The human angle: stories from the edge

Also read: Macrohard by Musk’s xAI: The AI-powered rival to Microsoft explained

To understand the real emotional texture of this shift, consider a mid-career financial analyst. In 2027, she’s asked to pilot a workflow where GPT-5 drafts her weekly competitor analyses; she reviews, edits, and presents. Some weeks, what the AI generates is better than she might have drafted; other weeks, it’s off in subtle ways. Her role evolves: she’s no longer just doing the grunt report work – she has to coach, correct, interpret, and contextualize. Her value shifts upward, but also precariously – any slip, or any superior AI, and she may become expendable.

Or take a junior lawyer in a city firm. For routine contractual clauses and first-draft memos, his firm lets GPT-5 produce baseline versions. His “added value” becomes spotting edge cases, tailoring empathy in client communication, and managing relationships. He gains speed, but also competes with a tool that could someday absorb his tasks entirely.

These are not speculative-future vignettes, they are iterations of the present. AI’s gains in benchmarks today portend shifts in incentives, culture, and risk tomorrow.

Obstacles and open questions

Benchmarks are measured settings. In the wild, AI still struggles with domain drift, nuance, ambiguity, hallucination, and adversarial prompts. For human-level tasks, users will demand explanations, provenance, and accountability. Can systems provide that in a way humans accept? As AI handles more important tasks, the cost of misalignment or error increases. Guardrails, oversight, fail-safes become essential. Who is liable for error? Should outputs carry legal disclaimers? Can AI-generated work be copyrighted or patented? These domains remain murky. Who gets access to the strongest models? If large companies deploy GPT-5 broadly, small firms or under-resourced geographies may lag behind.

Rather than herald doom or promise messianic AI, a more balanced take is this: we’re stepping into an era of hybrid intelligence, where humans and AI gradually integrate work. Machines will handle patterns, scale, speed; humans will bring empathy, meaning, oversight, values, and interpretation.

GPT-5’s claimed parity is not a final verdict – it’s a loud signal. The coming years will test whether human societies adapt fast enough, and whether AI serves as enhancement, not erasure.

Also read: Flipkart fulfillment centre secrets: What happens after you hit buy on Big Billion Days

Vyom Ramani

A journalist with a soft spot for tech, games, and things that go beep. While waiting for a delayed metro or rebooting his brain, you’ll find him solving Rubik’s Cubes, bingeing F1, or hunting for the next great snack. View Full Profile