Meet GLM-4.5: The most capable open-source AI model yet

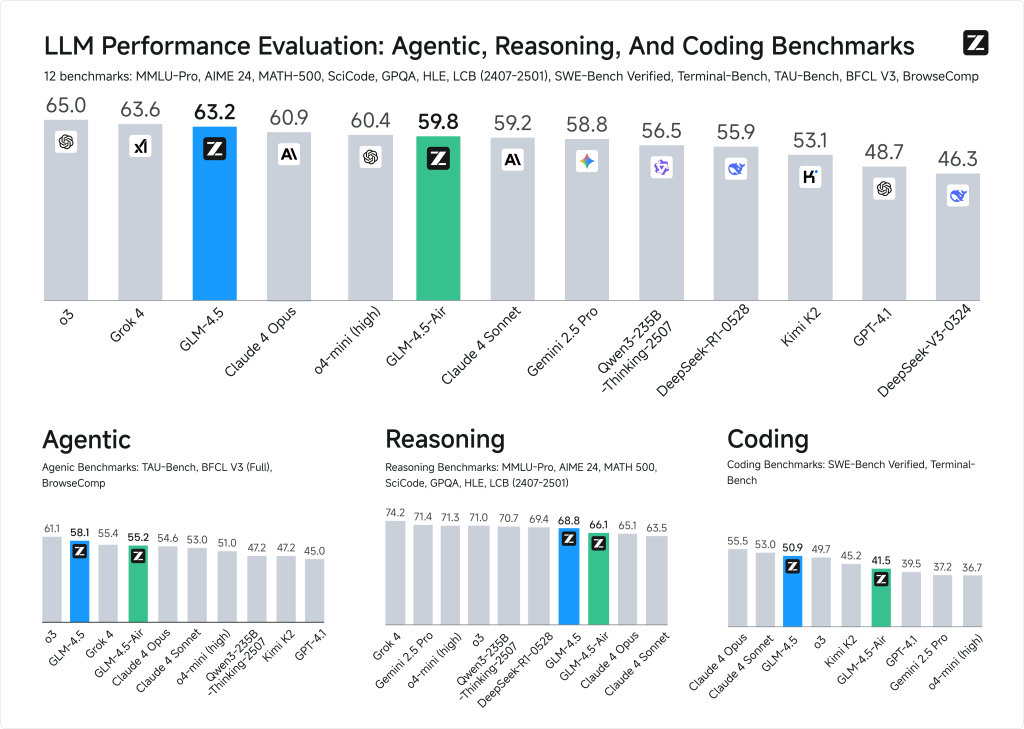

GLM-4.5 outperforms top AI models in coding, reasoning, and real-world tool use benchmarks

Open-source AI GLM-4.5 sets new benchmarks for context retention, tool integration, and agent reliability

Zhipu AI’s GLM-4.5 rivals commercial giants with 128K context, dual-mode thinking, and MIT licensing

While the West buzzes with the rise of OpenAI’s GPT-4.5 and xAI’s Grok-4, a powerful open-source challenger has emerged in the East. Developed by Zhipu AI (also known as Z.ai), GLM-4.5 is the latest entry in the company’s General Language Model series and it’s already turning heads in the AI research and developer communities.

Survey

SurveyOn paper, GLM-4.5 is a technical marvel: 355 billion total parameters (with 32 billion active), support for 128K context windows, a novel thinking/non-thinking hybrid architecture, and the highest tool-use accuracy ever recorded by an open-source model. In the benchmarks, it doesn’t just compete, it outperforms nearly every other open alternative in coding, reasoning, and real-world applications.

And perhaps most importantly, it’s free to use under the MIT license.

Also read: What is Voxtral: Mistral’s open source AI audio model, key features explained

GLM-4.5: Smart architecture

GLM-4.5 is built using a Mixture-of-Experts (MoE) framework. This architecture activates only a subset of its parameters for each query (32B active out of 355B total), giving it GPT-4-level performance while staying computationally efficient.

Also read: Gemini CLI: Google’s latest open source AI agent explained

But the standout innovation is its dual-mode processing. GLM-4.5 can toggle between “thinking” and “non-thinking” states, essentially choosing between deep, multi-step reasoning or fast, lightweight response generation based on the task. This results in more intelligent and contextual responses without always requiring full-throttle compute.

For users who want a smaller version, Zhipu has also launched GLM-4.5-Air, a leaner 106B model with just 12B active parameters which is ideal for inference on edge or budget GPUs.

GLM-4.5: Stellar benchmarks

Zhipu AI didn’t just make big claims, it backed them with data. In internal and third-party evaluations, GLM-4.5 pulled ahead in several categories. In code generation, it achieved a 53.9% win rate against top competitors, and an astonishing 80.8% win rate in dedicated coding benchmarks. On real-world agent benchmarks requiring API calling, document reading, or search, GLM-4.5 boasted a 90.6% success rate, higher than many closed and open models on record. With 128K token support, it matches Claude 3 and GPT-4 in retaining long conversations and analyzing multi-document input. These results push GLM-4.5 to the top tier of open models. In some internal comparisons, Zhipu AI even ranks it behind only xAI’s Grok-4 and OpenAI’s o3 model.

GLM-4.5: Open source with no compromises

GLM-4.5’s significance goes beyond technical specs. At a time when most cutting-edge models are locked behind APIs or usage caps, Zhipu has released GLM-4.5 completely open-source, hosted on Hugging Face and available for enterprise deployment.

That means any developer, startup, or research lab can fine-tune, embed, or deploy the model without worrying about licensing hurdles. And given its performance, it could become the default foundation model for many new AI applications, especially those focused on autonomous agents or tool-using LLMs.

The MIT license also encourages commercial adoption. Early reports suggest that Chinese tech giants and even several European research teams are exploring integrations.

Zhipu AI has been flying under the radar internationally, but GLM-4.5 may change that. The Beijing-based firm, a spin-off from Tsinghua University’s innovation labs, has now released multiple world-class models within two years including GLM-3, ChatGLM, and now GLM-4.5.

With GLM-5 already hinted to be in development and a growing suite of agent-centric tools, Zhipu seems poised to become a global leader in open, high-performance AI. And with geopolitical concerns growing around closed-source Western models, their timing couldn’t be better.

Also read: OpenAI, Google, Anthropic researchers warn about AI ‘thoughts’: Urgent need explained

Vyom Ramani

A journalist with a soft spot for tech, games, and things that go beep. While waiting for a delayed metro or rebooting his brain, you’ll find him solving Rubik’s Cubes, bingeing F1, or hunting for the next great snack. View Full Profile