India’s 8-GPU gambit: Shivaay, a foundational AI model built against the odds

India’s AI ecosystem is at a critical juncture, striving to balance its aspirations for technological self-reliance with the realities of limited resources. Amid this landscape, Rudransh Agnihotri, a 20-year-old entrepreneur and founder of FuturixAI and Quantum Works, has emerged as a prominent figure. His team’s creation, Shivaay, a 4-billion-parameter foundational AI model, has sparked both excitement and controversy. While some hail it as a breakthrough in India’s AI journey, others question its originality and scalability.

Survey

Survey“Building Shivaay wasn’t just about creating another language model,” Agnihotri explains. “It was about proving that even with limited resources, India can build something meaningful for its own people—something that understands our languages, our culture, and our problems better than any foreign model ever could.”

The challenge of limited resources with building Shivaay

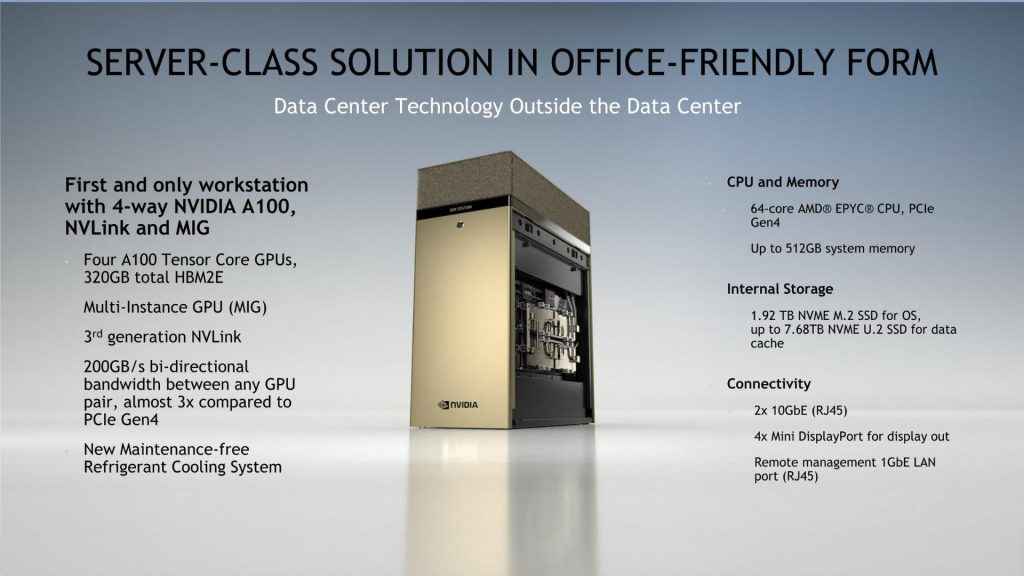

Developing Shivaay was no small feat for Agnihotri and his team of college undergraduates. Without venture capital funding, they had to rely on programmes like NVIDIA Inception and AWS Activate to access GPUs.

Also read: DeepSeek data breach: A grim warning for AI security

“We trained Shivaay on just eight NVIDIA A100 GPUs,” he reveals. “It was challenging because we didn’t have the luxury of bleeding-edge hardware like OpenAI or Anthropic. But it was also an incredible learning experience that forced us to innovate.”

The team adopted techniques inspired by Meta’s Lima paper, which emphasises using stylised prompts over massive datasets. “We realised that quality matters more than quantity,” Agnihotri says. “By focusing on properly stylised prompts and synthetic datasets annotated by GPT-4, we were able to optimise the training process significantly.”

Also read: What is Distillation of AI Models: Explained in short

Despite these constraints, Shivaay achieved impressive results. The model scored 91.04% on the ARC-Challenge benchmark for reasoning tasks and 87.41% on GSM8K for arithmetic reasoning.

The debate over originality

Shivaay’s release was not without controversy. Following the publication of its benchmark results on Reddit, critics accused the model of being a fine-tuned derivative of open-source architectures like Llama or Mistral. This scepticism intensified after a system ROM (refers to system-level configuration or preset instructions embedded within the AI model) leak revealed references to other models in Shivaay’s internal code.

Also read: Deepseek to Qwen: Top AI models released in 2025

Agnihotri is candid about the origins of Shivaay’s architecture. “We built the architecture from scratch but incorporated knowledge from multiple models,” he says. “This wasn’t about copying; it was about learning from what works while tailoring it for Indian contexts.” He adds that transparency has been a priority: “We’ve published evaluation scripts and benchmarks so anyone can test Shivaay themselves.”

The backlash has also been a learning experience for the team. “The criticism made us realise the importance of implementing robust guardrails,” Agnihotri admits. “We’re now adopting advanced techniques like FP8 quantisation to improve efficiency and reduce memory usage.”

Why India needs its own AI models?

One of Shivaay’s defining features is its ability to process multiple Indic languages, including Hindi, Tamil, and Telugu. This focus on linguistic diversity sets it apart from global models like GPT-4, which often struggle with cultural nuances.

“No foreign model understands a farmer in Nashik or a weaver in Varanasi,” Agnihotri argues passionately. “An Indic foundational model ensures digital sovereignty while addressing local challenges in healthcare, education, and governance.

“This vision aligns with India’s broader AI ambitions under the IndiaAI Mission, which aims to develop indigenous foundational models by 2026. The government has allocated ₹10,372 crore for AI infrastructure and announced plans to deploy 18,000 GPUs nationwide.

However, Agnihotri believes more needs to be done to make high-performance computing accessible to startups. “Yotta offers GPUs at $3/hour—that’s not affordable for most Indian startups,” he points out. “We need subsidised access if we want to level the playing field.”

Learning from global leaders

Agnihotri draws inspiration from China’s DeepSeek, which recently developed a reasoning model that rivals GPT-4 but at a fraction of the cost. DeepSeek achieved this by optimising its training pipelines and leveraging older GPUs with advanced engineering techniques.

“DeepSeek showed us that innovation doesn’t always require cutting-edge hardware,” Agnihotri notes. “They used mixed-precision training and auxiliary-free loss balancing to maximise efficiency and we’re exploring them for Shivaay v2.”

He also acknowledges the challenges posed by India’s talent gap in AI research. A recent study revealed that fewer than 2,000 engineers in India specialise in building foundational models from scratch. “This is why collaboration between academia, industry, and government is so crucial,” he emphasises.

The road ahead for Shivaay

Looking ahead, Agnihotri envisions Shivaay evolving into an agentic AI capable of structured reasoning and task-specific applications. The team is already exploring partnerships with IITs to develop use cases in agriculture and healthcare.

Also read: DeepSeek is call to action for Indian AI innovation, says Gartner

“Imagine ASHA workers using Shivaay to detect early signs of tuberculosis or farmers predicting pest outbreaks via satellite data,” he says enthusiastically. However, he remains pragmatic about the challenges ahead: “India should focus on developing domain-specific models rather than trying to replicate GPT’s scale.”

Agnihotri also hopes that initiatives like MeitY’s foundational model scheme will encourage more startups to enter the space. “If we can build smaller, compute-efficient models tailored for specific industries, there’s no reason why VCs wouldn’t invest,” he argues.

Sagar Sharma

A software engineer who happens to love testing computers and sometimes they crash. While reviving his crashed system, you can find him reading literature, manga, or watering plants. View Full Profile