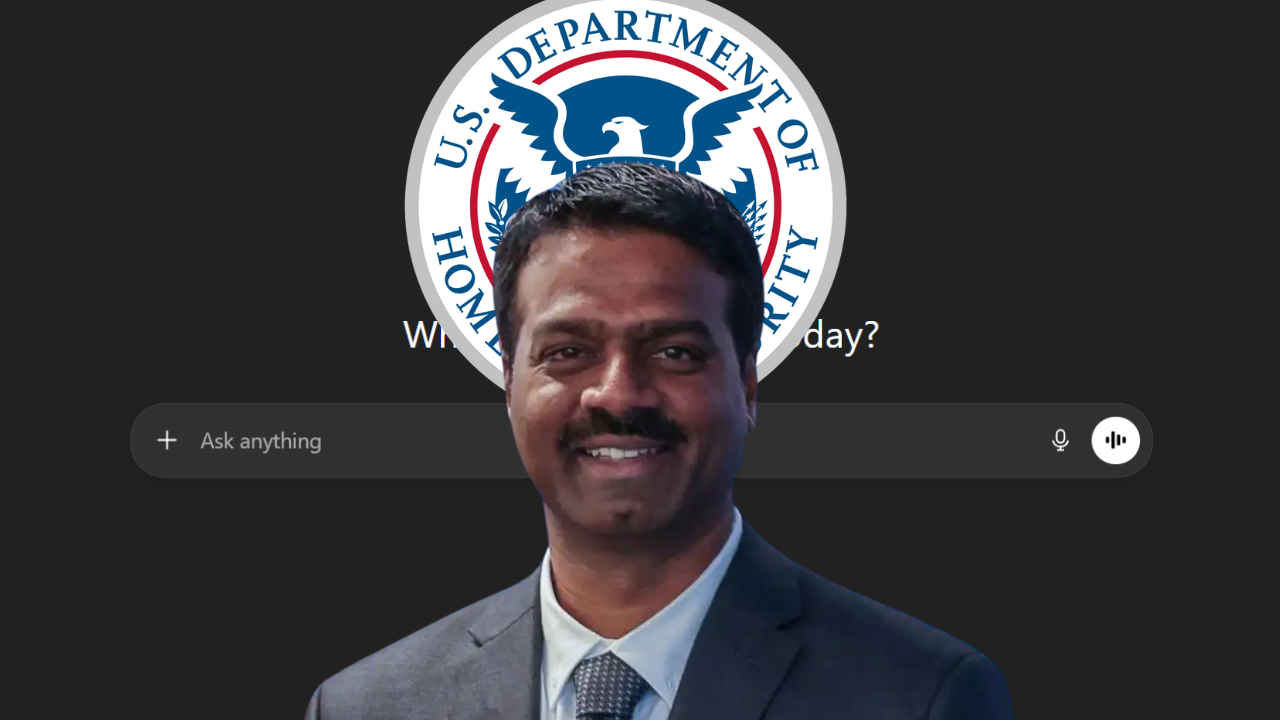

CISA ChatGPT leak: Acting director Madhu Gottumukkala investigation explained

The core principle of modern cybersecurity is “Zero Trust” – never trust, always verify. However, a recent data exposure incident involving Madhu Gottumukkala, the Acting Director of the Cybersecurity and Infrastructure Security Agency (CISA), has highlighted a massive blind spot in federal AI governance: the human element.

Survey

SurveyGottumukkala is currently under investigation by the Department of Homeland Security (DHS) after CISA’s internal sensors flagged multiple uploads of sensitive “For Official Use Only” (FOUO) documents to the public, commercial version of ChatGPT.

Also read: Windows 11 crosses 1 billion mark, as Xbox revenue falls

JUST IN: 🇺🇸 Head of US cyber defense agency CISA Madhu Gottumukkala uploaded sensitive documents into public ChatGPT, prompting a DHS investigation. pic.twitter.com/EHh7J94BRb

— Remarks (@remarks) January 28, 2026

Why public LLMs are a risk

The incident began when Gottumukkala bypassed standard DHS blocks on generative AI tools by requesting a “special temporary exception” in May 2025. While CISA has since stated this was a controlled, limited trial, the technical fallout remains significant.

When a user interacts with the public version of ChatGPT, the data isn’t just processed; it is often assimilated. By default, OpenAI’s commercial models use user prompts and uploaded documents to:

- Iterate and Train: Refine future weights of the Large Language Model (LLM).

- RAG Context: Information can persist in Retrieval-Augmented Generation (RAG) caches, potentially surfacing to other users if the model perceives the leaked data as “general knowledge.”

For CISA, which handles the blueprint of the nation’s cyber defense, feeding contracting documents into a public cloud means that proprietary government data effectively left the “federal perimeter” and entered a third-party server with no zero-retention guarantee.

Also read: AlphaGenome explained: How Google DeepMind is using AI to rewrite genomics research

Sensors vs. privilege

Perhaps the most striking technical detail is that CISA’s own automated security sensors worked exactly as intended. In the first week of August 2025, these systems – designed to detect the exfiltration of sensitive strings and metadata – triggered multiple high-priority alerts.

These sensors are calibrated to stop “Shadow AI” (the unauthorized use of AI tools). The irony here is that the person responsible for overseeing the nation’s response to such alerts was the one triggering them. This has sparked a DHS-led “damage assessment” to determine if the FOUO material, which included internal CISA infrastructure details, has been permanently ingested into OpenAI’s training logs.

The government alternative: DHSChat

The investigation also centers on why Gottumukkala eschewed DHSChat. Unlike the public version, DHSChat is a “sandboxed” instance of an LLM. It offers:

- Data Sovereignty: Data never leaves the government-controlled Azure or AWS cloud environment.

- Zero-Training Policy: Prompts are never used to train the base model.

- Audit Logging: Every interaction is logged and searchable for FOIA and compliance purposes.

While the investigation is technical, it follows a period of administrative tension for Gottumukkala. In passing, it’s worth noting that this leak adds to existing scrutiny over his recent failed counterintelligence polygraph and the subsequent administrative leave of career staffers involved in his security vetting.

For the tech industry, the CISA leak is a landmark case study. If the head of the world’s most sophisticated cyber defense agency can fall victim to the convenience of “copy-paste” AI, it proves that AI Security Policy is only as strong as its most privileged user.

Also read: Dell and NVIDIA combine to power NxtGen’s largest India AI factory

Vyom Ramani

A journalist with a soft spot for tech, games, and things that go beep. While waiting for a delayed metro or rebooting his brain, you’ll find him solving Rubik’s Cubes, bingeing F1, or hunting for the next great snack. View Full Profile