AI and LLMs can get dumb with brain rot, thanks to the internet

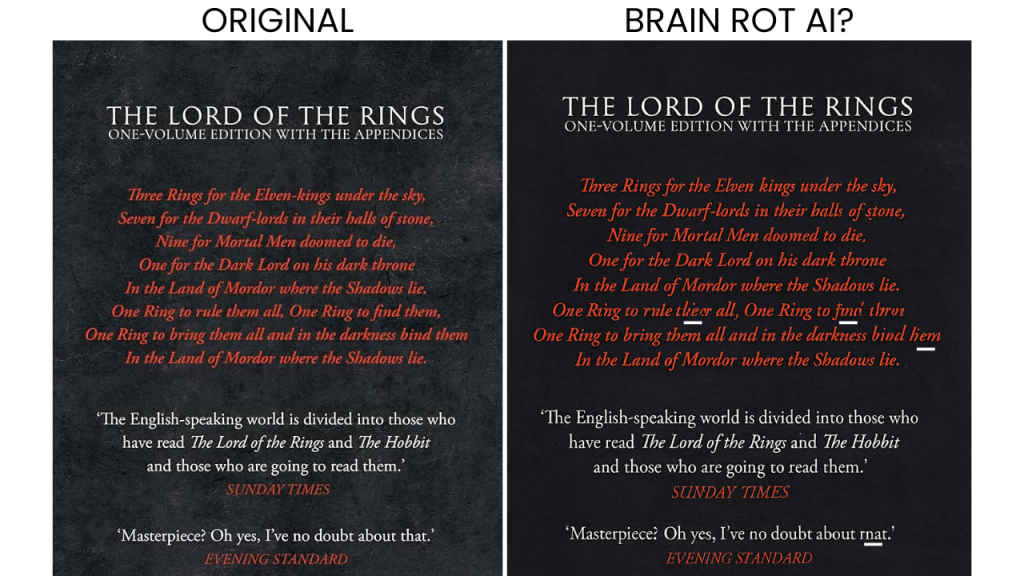

Junk internet data causes measurable cognitive decline in AI models, research claims

Study mirrors human brain rot from doomscrolling and low-quality media

Both humans and AI show lasting damage from digital junk diets, says study

There’s a strange symmetry unfolding between us humans and AI (at least the LLM kind in GenAI). One that feels almost poetic, and a bit tragic. The same way our brains corrode under the endless scroll of tweets, reels, and short videos, so do LLMs can literally get dumber by consuming too much junk on the internet.

Survey

SurveyI wish I was joking, but this is one of those ‘reality’s stranger than fiction’ instances. A new paper titled “LLMs Can Get Brain Rot!” by researchers Shuo Xing, Junyuan Hong, Yifan Wang and others, spells it out with scientific precision. Their hypothesis is that continual exposure to junk web text – low-quality, superficial posts optimized for engagement rather than meaning – induces lasting cognitive decline in artificial intelligence. Models trained on that kind of data lose reasoning ability, long-context comprehension, and even start exhibiting personality traits like narcissism and psychopathy.

In other words, what they’re describing, in machine learning parlance, is the digital sickness afflicting AI – the same kind that’s been quietly eroding human cognition for years.

How researchers tested for brain rot in AI (and us)

According to their scientific study, the researchers built their own controlled social media dataset, feeding four different models a diet of “junk” tweets that were short, viral, and emotionally loud. The control group got longer, information-rich content instead. The results were sobering.

Accuracy on the ARC-Challenge reasoning test plummeted from 74.9% to 57.2% as junk data increased. “Thought-skipping” emerged as the primary behavioural symptom – models stopped completing reasoning chains midway, like a student giving up halfway through an exam question because they can’t think or recollect.

Even when the researchers tried to restore the LLMs in the study with clean, high-quality instruction data, the recovery was partial at best. The cognitive scar tissue remained on these LLMs. “Representational drift,” they called it – a kind of data-induced dementia. This is quite crazy to think about, how an AI-based LLM can develop brain rot that never truly goes away. When you gorge on empty digital calories, even a superintelligent neural network gets duller, shallower, and less useful.

Swap the neural nets for real neurons and the parallels become really uncanny. A growing body of research in human psychology has been arriving at the same conclusion. That constant exposure to low-quality, sensationalist content – doomscrolling – erodes our focus, empathy, and executive function.

A 2025 review titled “Demystifying the New Dilemma of Brain Rot in the Digital Era” found that compulsive social media use leads to emotional desensitization, memory lapses, and decision-making impairment. Another 2022 study, “Doomscrolling Scale: Its Association with Personality Traits and Psychological Distress”, showed strong correlations between doomscrolling and depression and a measurable dip in life satisfaction.

Also read: Hallucinations in AI: OpenAI study blames wrong model measurements

Neuroscientists have even found structural changes – reduced grey matter density in regions of the human brain tied to attention and emotional regulation. As Dr. Andreana Benitez at MUSC put it, “It’s like junk food for the brain.” The dopamine spikes from every like and retweet slowly rewire our reward circuits, leaving us less capable of sustained thought. Or as The Guardian memorably described it in their 2024 report, “It’s like being in a room where people are constantly yelling at you.”

Humans and AI seem to be more alike

Both humans and machines appear to suffer from the same cognitive malnutrition. The mechanism differs – one biochemical, the other algorithmic – but the consequence is the same: a collapse of depth.

For LLMs, junk data triggers “thought-skipping” loops. For humans, it manifests as shortened attention spans and compulsive tab-switching. In both cases, we lose the ability to sustain context, which is the ability to hold multiple threads of reasoning in working memory long enough to derive any beneficial insight.

The AI study suggests that when a model consumes too much engagement-optimized content, it stops learning meaning and basically learns to imitate noise. You could replace “model” with “teenager on Instagram Reels,” and the sentence would still apply and feel surreal.

In terms of any good news or silver lining, there appears to be none as of now. There’s no easy detox, for us humans or for them LLMs powering GenAI. The research paper found that retraining LLMs on high-quality text only partially restored their cognitive capacity. Likewise, it can be said that no meditation app can fully undo years of doomscrolling-induced dopamine depletion.

But the study does say something about intelligence. Be it human or artificial, intelligence isn’t a static value but a dynamic one that depends on the quality of its inputs. Feed it noise, and it decays. Feed it signal, and it thrives. Maybe that’s the ultimate mirror this research holds up.

Also read: Claude AI glitch explained: Anthropic blames routing errors, token corruption

Jayesh Shinde

Executive Editor at Digit. Technology journalist since Jan 2008, with stints at Indiatimes.com and PCWorld.in. Enthusiastic dad, reluctant traveler, weekend gamer, LOTR nerd, pseudo bon vivant. View Full Profile