Yann LeCun warns of a Robotics Bubble: Why humanoid AI isn’t ready yet

Yann LeCun urges focus on AI world models over flashy robots

Humanoid robot startups risk collapse without breakthroughs in common-sense AI

Meta’s JEPA research could redefine machine learning foundations for robotics

The dream of domestic robots, of intelligent machines seamlessly integrating into our daily lives, is a pervasive one, fueling the ambitions of countless startups and tech giants alike. From Boston Dynamics’ astonishingly agile Atlas to countless more refined prototypes hinting at future home assistants, the vision of a metallic companion assisting with chores or elder care seems perpetually just around the corner. Yet, amidst the flurry of investment, the dazzling demos, and the palpable excitement, a stark warning has been issued by one of the founding fathers of modern AI: Yann LeCun. The Chief AI Scientist at Meta and a Turing Award laureate, LeCun believes that the current fervor around humanoid robotics, particularly for general-purpose use, is profoundly premature, a bubble awaiting the necessary, fundamental breakthroughs in artificial intelligence itself.

Survey

SurveyAlso read: Humanoid robots still can’t match human hand’s dexterity, which is a big problem

The “big secret” of the industry

In a recent keynote at the MIT Generative AI Impact Consortium Symposium, LeCun didn’t mince words, delivering a sobering dose of reality to an often-overheated field. He characterized the unspoken truth of the humanoid robotics industry: “the big secret of the industry is that no one who’s building those robots has any idea how to make those robots smart enough to be generally useful.” It’s a blunt assessment that cuts through the hype, asserting that while a robot can be painstakingly trained for a highly specific, repetitive task – think assembly lines, intricate surgical procedures, or automated warehouse logistics – the conceptual leap to a truly adaptable, intelligent companion is still a distant one.

The distinction is crucial. While impressive feats of robotic dexterity and navigation are routinely showcased, these are often the result of highly specialized programming and extensive training for a narrow set of tasks. A robot can perfectly sort packages or weld car parts with superhuman precision, but ask it to improvisationally clear a cluttered kitchen counter, prepare a meal, or comfort a child, and the limitations of current AI quickly become apparent.

Beyond text: The data bottleneck and lack of common sense

The crux of LeCun’s argument lies in the fundamental limitations of contemporary artificial intelligence, even the much-lauded Large Language Models (LLMs). While systems like ChatGPT have demonstrated astonishing capabilities in processing and generating human-like text, LeCun emphatically states that “we’re never going to get to human-level intelligence by just training on text.” His critique highlights a critical data bottleneck and a profound deficit in what he terms “common sense” understanding.

Consider the sheer volume and richness of data that a human child processes. LeCun points out that a four-year-old child processes a comparable amount of high-bandwidth sensory data through vision alone – constantly observing, interacting, and learning from their physical environment – as the largest LLMs have consumed from the entire public text corpus. This immense disparity underscores a profound deficit in current AI’s understanding of the physical world. LLMs excel at language because they are trained on language. They can infer relationships, generate coherent sentences, and even “reason” within their textual domain, but they lack a grounded, intuitive grasp of physics, causality, and social dynamics – a common sense that even a house cat possesses. Without this foundational understanding, a robot, regardless of its physical dexterity, remains largely unintelligent in a dynamically changing, real-world environment.

The imperative of world models

This lack of “common sense” and a robust “world model” is precisely what, in LeCun’s view, fundamentally holds humanoid AI back. Imagine a domestic robot tasked with a seemingly simple instruction: “Please tidy the living room.” To execute this, the robot needs to perform an incredibly complex chain of inferences and actions. It must identify objects, understand their function, categorize what constitutes “tidying,” anticipate the effects of its actions (e.g., placing a book on a shelf, not on the floor), adapt to unforeseen obstacles (a pet, a misplaced toy), and make nuanced judgments in an ever-changing environment. This is a far cry from a robot programmed to grasp a specific component on an assembly line.

Also read: ChatGPT to chips: How OpenAI wants to be the Windows of AI era

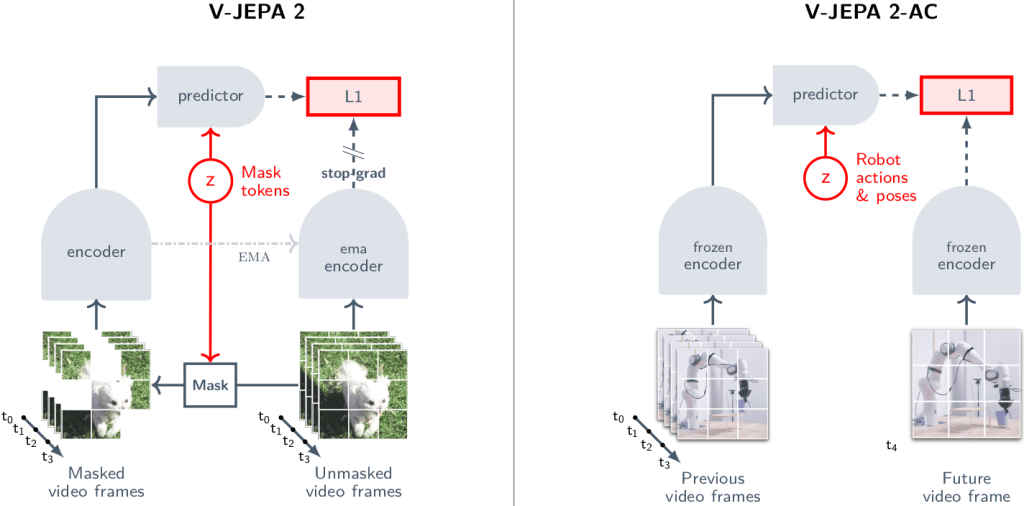

LeCun argues that a “bunch of breakthroughs that need to arrive in AI” are required before such general usefulness becomes a reality. His own research, and that of his team at Meta, offers a compelling glimpse into this necessary future. He champions Joint Embedding Predictive Architectures (JEPA), a non-generative approach to AI that aims to build sophisticated “world models.” Unlike traditional generative models that try to predict every pixel in a future scenario (a near-impossible task given the inherent uncertainty and infinite possibilities of the real world), JEPA focuses on learning abstract, compact representations that capture the core dynamics and underlying structure of the world, filtering out unpredictable, irrelevant details.

JEPA: Building a foundation for robotic intelligence

Early successes with V-JEPA (Video JEPA) demonstrate the potential of this approach. Systems trained with this architecture begin to acquire a rudimentary “common sense” about physical interactions. They exhibit predictive errors when impossible or illogical events occur in a video, indicating an implicit understanding of the rules of physics and causality. This ability to implicitly understand how the world works, how objects interact, and what actions lead to what outcomes, is foundational for truly intelligent robotics.

The ultimate goal, according to LeCun, is to equip robots with these sophisticated World Models, enabling them to perform zero-shot planning. This means a robot could be given a novel, high-level task – say, “prepare me a sandwich,” or “help an elderly person get dressed” – and, without prior specific training for that exact command, it could autonomously plan and execute a sequence of actions to achieve it. This involves leveraging its learned understanding of how the world works, reasoning through possible outcomes, and selecting the most effective path. This capability would bypass the laborious and inefficient process of traditional reinforcement learning, where every new task requires extensive trial and error and millions of simulations.

The Future Awaits Breakthroughs

For LeCun, the future of many robotics companies, particularly those betting heavily on general-purpose humanoids, “basically rests on the field achieving this kind of progress toward world model planning type architecture.” He optimistically predicts that such architectures will become dominant within three to five years, signaling a potential paradigm shift in AI development. But until then, the industry risks an overextension based on present capabilities rather than truly transformative future potential.

So, while the allure of intelligent robots remains undeniably strong, and the sight of a humanoid navigating complex environments is captivating, LeCun’s warning serves as a crucial reality check. The development of truly smart, generally useful humanoids isn’t merely an engineering challenge of building better actuators or more resilient materials; it’s a profound, fundamental AI challenge. It awaits breakthroughs that will endow machines with the intuitive common sense, predictive capabilities, and deep world understanding that we, as humans, often take for granted. Until those breakthroughs arrive, the humanoid robotics bubble, however exciting, may prove to be just that – a captivating but fragile illusion.

Also read: AI thought a Doritos bag was a gun. What’s worse? We believed it!

Vyom Ramani

A journalist with a soft spot for tech, games, and things that go beep. While waiting for a delayed metro or rebooting his brain, you’ll find him solving Rubik’s Cubes, bingeing F1, or hunting for the next great snack. View Full Profile