Nano Banana to Sora 2: No one worried about AI Deepfakes anymore?

AI tools like Nano Banana and Sora 2 normalize deepfake realism

Watermarks and metadata fail to prevent widespread misuse or deception

Cultural adoption outpaces concern, blurring lines between fiction and truth

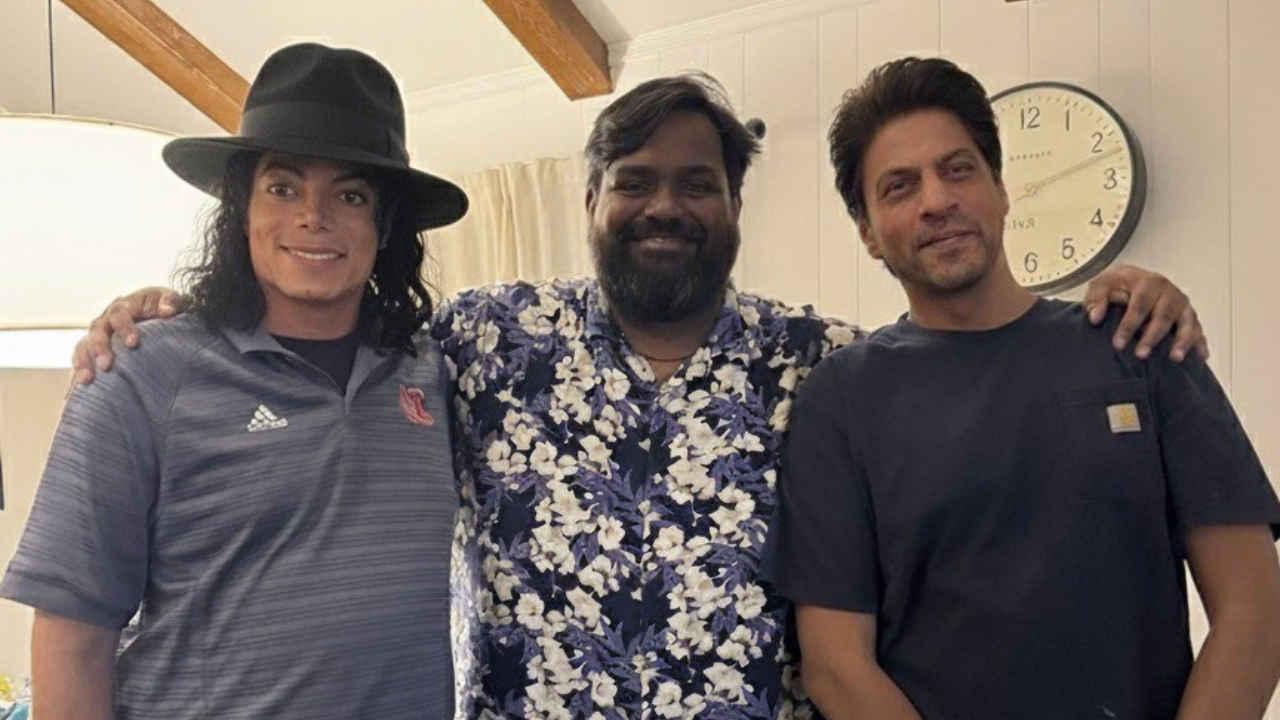

By now, you’ve probably seen it – a friend transformed into a toy figurine on Instagram, a cousin styled like a 90s Bollywood starlet, or maybe your own face morphed into a dreamlike avatar holding hands with your younger self. Or the likes of me hugging both Michael Jackson and Shahrukh Khan a few days ago in the above thumbnail image. All courtesy of Gemini’s Nano Banana, the viral AI image tool that’s swept across India’s social feeds like a monsoon cloudburst that refuses to end.

Survey

SurveyNot to be outdone, OpenAI’s Sora 2 dropped shortly after – a text-to-video platform so realistic, so fluid in its cinematography, it practically dares you to believe it’s fake. With Sora, users don’t just insert themselves into short films, but they almost become the film. Actors in scenes they never shot, characters in memories that never existed.

It’s unbelievably mind boggling how easy Nano Banana and Sora 2 have made creating realistic fakes of anyone. And yet, amid the euphoria and creativity, the question lingers – one nobody seems particularly eager to ask: Is no one worried about AI deepfakes anymore? Because if there was ever a time to worry, it’s now.

Gemini Nano Banana’s going viral in India

Let’s be clear – tools like Nano Banana and Sora 2 are not gimmicks. They represent a radical leap in the accessibility and realism of generative AI. With Nano Banana, everyday users are pumping out photorealistic edits by the hundreds of millions. In India alone, Gemini’s app downloads spiked by 667% after launch, with local users driving nearly 27% of the global Nano Banana user base.

Also read: Gemini Nano Banana viral trends: Fun AI edits, serious privacy questions

These aren’t just quirky selfies. We’re talking Bollywood-style glamour shots, vintage monochrome portraits, and emotionally charged blends of childhood photos with adult faces – a viral trend dubbed “Hug My Younger Self.” It’s slick, expressive, and just nostalgic enough to disarm critical thinking.

Sora 2, meanwhile, brings a whole new layer: movement, voice, plot. Its “Cameos” feature lets anyone insert their own (or someone else’s) face into AI-generated videos. Suddenly, deepfakes aren’t the fringe experiment of visual effects nerds or political bad actors. They’re just… content. Made in seconds. Shared in minutes. And indistinguishable from reality for most casual viewers.

Watermarks are no defence against deepfakes

Both Google and OpenAI insist they’re aware of the risks. Nano Banana images come tagged with SynthID watermarks, Sora videos carry metadata disclaimers. But once content leaves their platform, those safeguards often don’t stick. Strip the watermark, crop the metadata, and what you’re left with is a hyperreal artifact with no fingerprint – a synthetic memory with no origin.

And the platforms know this, just as they also know that frictionless virality is the name of the game in the GenAI battleground. The same tools designed for fun are also – perhaps inevitably – becoming tools for fabrication.

Also read: Sora 2 vs Veo 3: How OpenAI and Google’s AI video tools compare

Already, Sora 2 has spawned fabricated celebrity clips, AI-edited political scenes, Sam Altman deepfakes and pseudo-documentary videos that some users have mistaken for news. And Nano Banana has seen more than one case of users uploading altered images to scam sites or sharing hyperreal edits without the subject’s knowledge – a troubling gray zone between creativity and consent.

OpenAI's Sora 2 is a deepfake AI slop machine. It's an unholy abomination. https://t.co/6U1epLIcyE

— Vox (@voxdotcom) October 3, 2025

The normalization of deepfakery

What’s remarkable isn’t that these tools exist, but how quickly we’ve stopped being surprised by them. There was a time when face-swaps and photo retouching sparked public outrage. Now, we retweet deepfakes with laughing emojis. We’re caught somewhere between amusement and apathy.

In India, especially, the cultural uptake has been staggering. What began as an aesthetic playground has quickly merged into the everyday. Brands are using Nano Banana for campaigns. Influencers edit AI cameos into their reels. Bollywood nostalgia is algorithmically enhanced.

We’ve reached a point where AI doesn’t just copy reality – it replaces it, with something smoother, shinier, and more emotionally calibrated. The scary part to contemplate in all of this is when the fake becomes more appealing than the real, what happens to trust? What happens to memory?

The heart of the matter isn’t just technological – it’s cultural. These tools aren’t being deployed in labs or tucked behind paywalls. They’re free, fun, and frictionless. Which means they’re shaping cultural norms faster than regulators can react.

The question is no longer whether AI generated deepfakes are dangerous. It’s how comfortable we’ve become with their presence. In that sense, both Nano Banana and Sora 2 aren’t just creative tools. They’re social experiments – testing just how far we’ll go to accept fiction as fact, stylization as selfhood, and entertainment as an excuse.

In the end, this article isn’t as much about drumming up panic but a plea to pay attention. Because we are racing into a future where every face can be faked, every voice simulated, every memory manufactured. And when the tools that do this are dressed up as toys – charming, delightful, harmless – that’s when we’re most at risk of forgetting the line between real and not.

Jayesh Shinde

Executive Editor at Digit. Technology journalist since Jan 2008, with stints at Indiatimes.com and PCWorld.in. Enthusiastic dad, reluctant traveler, weekend gamer, LOTR nerd, pseudo bon vivant. View Full Profile