Google I/O 2025: Google takes on Meta with stylish, AI-powered Android XR glasses

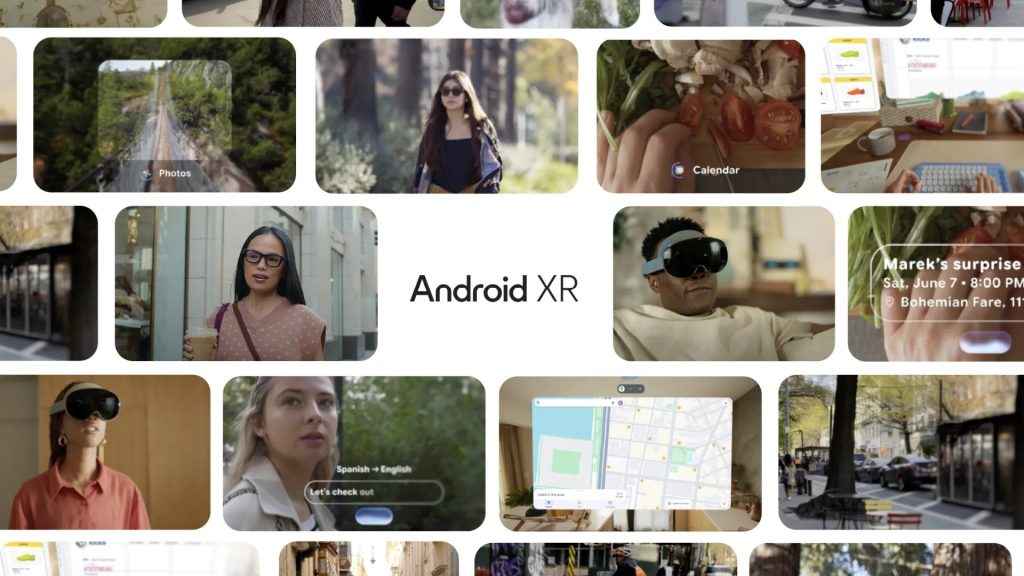

At its annual I/O developer conference, Google unveiled Android XR, a new platform for smart glasses and headsets, powered by its Gemini AI. Unlike the Google Glass experiment of a decade ago, this time, the company seems serious not just about what the glasses do but also how they look.

Survey

SurveyThe vision behind Android XR, Google says, is to make a seamless blend of augmented reality and artificial intelligence that brings your digital assistant into the real world, sharing your view and responding in real time, hands-free and context-aware.

Also Read: Google adds ChatGPT-like AI Mode to Search: How it will work

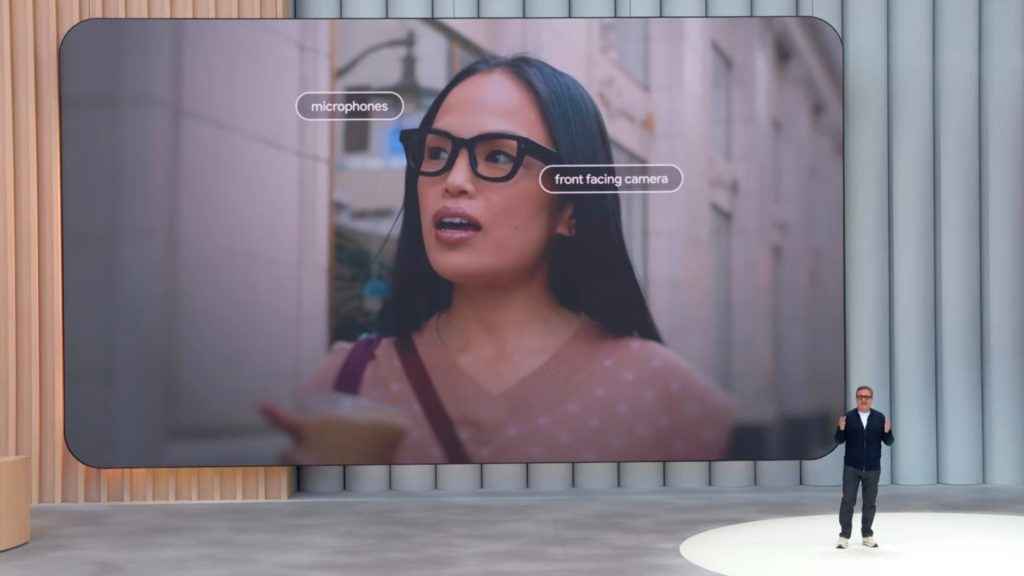

The company demonstrated glasses that can message friends, take photos, offer turn-by-turn directions, and even translate live conversations with AI-generated subtitles. With built-in cameras, microphones, and speakers, these XR glasses sync with your phone and optionally display private, in-lens visual cues, giving you answers, navigation, and reminders right when needed.

What sets this apart from earlier attempts is the emphasis on style. Google announced partnerships with high-fashion eyewear brands like Gentle Monster and Warby Parker, as well as a deeper collaboration with Samsung to co-develop both the platform and reference hardware. Gentle Monster, known for its trendsetting designs embraced by global celebrities, adds the kind of fashion credibility that Google Glass sorely lacked.

The first pair of Android XR-enabled glasses will launch later this year under the name Project Aura, built by Xreal. Developers will be able to start building experiences for the platform by the end of 2025.

In a swipe at Meta’s Ray-Ban smart glasses, which have sold over 2 million units, Google’s VP of XR, Shahram Izadi, made it clear that Android XR is designed for both functionality and fashion. While Meta has leaned heavily into familiar shapes like the Wayfarer, Google is betting on diversity: from everyday-friendly frames to bold, Gen Z-favoured styles.

Gemini integration is the core selling point. These glasses don’t just offer camera and audio capture, they offer a context-aware AI that understands what you see and hear. Think of it as an AI assistant with eyes and ears, helping you navigate physical and virtual spaces alike.

As Google rolls out this platform, it also acknowledges the need for privacy and user trust. The company has begun testing prototypes with select users and promises to share more on safety and transparency in the coming months.

Read More: Google Meet adds real-time AI speech translation

Siddharth Chauhan

Siddharth reports on gadgets, technology and you will occasionally find him testing the latest smartphones at Digit. However, his love affair with tech and futurism extends way beyond, at the intersection of technology and culture. View Full Profile