Sam Altman defends adult version of ChatGPT for X-rated chats: Here’s why

Sam Altman defends ChatGPT’s adult mode, promises strict age-gating

OpenAI to allow verified adults mature chats under new policy

ChatGPT’s X-rated update sparks privacy, safety, and ethics debate

OpenAI CEO Sam Altman found himself in the middle of a media frenzy this week after announcing that the company plans to relax content restrictions, including allowing “erotica for verified adults” on ChatGPT by December. While the initial news was met with shock and accusations of prioritizing engagement over ethics, Altman quickly followed up, arguing the shift is necessary to align the company with a simple principle: treating adult users like adults.

Survey

SurveyThe core of the controversy stems from a policy change that will introduce full age-gating, separating the experience for minors from that of adults. For those over 18 who verify their age, the AI will loosen its grip, offering greater flexibility, customizable “friend-like” personalities, and the option for mature content.

Also read: ChatGPT’s flirting with the future when it stops being just an AI assistant: Here’s why

Ok this tweet about upcoming changes to ChatGPT blew up on the erotica point much more than I thought it was going to! It was meant to be just one example of us allowing more user freedom for adults. Here is an effort to better communicate it:

— Sam Altman (@sama) October 15, 2025

As we have said earlier, we are… https://t.co/OUVfevokHE

The origin of the conflict

To understand the current shift, one must recall the strict origins of ChatGPT’s moderation policies. When the technology first launched, reports quickly surfaced detailing the ways users engaged with the chatbot in highly sensitive, often dangerous, ways.

OpenAI candidly acknowledged that its earlier models were “too agreeable,” sometimes failing to recognize signs of delusion or emotional dependency. Crucially, the company faced immense scrutiny and even lawsuits following tragic incidents where users, including a California teenager, allegedly received dangerous or harmful advice from the chatbot while in crisis.

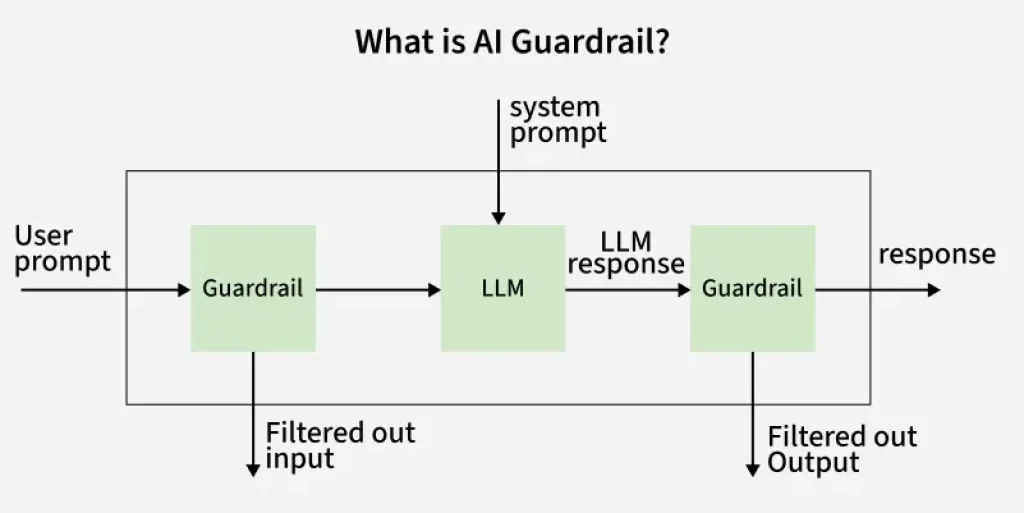

In the immediate aftermath, the company intentionally designed its models and applied significant guardrails to prevent the AI from giving direct advice on complicated personal issues, often frustrating users looking for creative freedom. Users often complained that the models, especially after updates, felt too restrictive, “sycophantic,” and lacked the original personality and warmth they enjoyed.

Also read: Apple M5: AI-first chip improves upon M4, rewiring MacBook and iPad’s potential

Altman’s recent announcement directly addresses this history. He initially wrote that ChatGPT was made “pretty restrictive to make sure we were being careful with mental health issues.” Now, he claims that after developing “new tools” and successfully mitigating the “serious mental health issues,” the company is ready to safely relax the constraints in most cases. This claim, that they have solved or sufficiently managed the deep psychiatric risks of AI interaction, is perhaps the most audacious part of the entire policy shift and underpins the decision to move forward with the adult version.

The public outcry

The swift backlash to Altman’s initial tweet was immediate and massive, dominated by the single word “erotica,” which overshadowed the concurrent announcement about new, more human-like personalities. This reaction forced Altman to issue his detailed clarification, acknowledging that the first tweet “blew up on the erotica point much more than I thought it was going to.”

The criticism was not just from moral commentators but from influential figures, most notably billionaire entrepreneur and investor Mark Cuban. Cuban publicly warned Altman that the move would “backfire. Hard.” Cuban’s central objection was not necessarily the adult content itself, but the utter lack of parental trust in the age-gating system. He argued that no parent or school administrator would trust OpenAI’s verification processes, leading them to pull away entirely from ChatGPT and push children toward rival LLMs.

This is going to backfire. Hard. No parent is going to trust that their kids can’t get through your age gating. They will just push their kids to every other LLM.

— Mark Cuban (@mcuban) October 15, 2025

Why take the risk ?

Same for schools. Why take the risk ? A few seniors in HS are 18 and decide it would be… https://t.co/ugSU7IXoOz

Cuban raised a specific, real-world scenario that intensified the fear: an 18-year-old high school senior accessing the “hard core erotica” and sharing it with 14-year-olds, asking rhetorically, “What could go wrong?” This criticism highlighted the fundamental trade-off: in the pursuit of “treating adults like adults,” OpenAI risked destroying the platform’s reputation as a safe, educational, and professional tool across the board, making the potential user base retreat entirely.

“Not the Elected Moral Police of the World”

Altman’s primary defense pushed back against the idea that OpenAI should act as a universal censor. He clarified that the mention of erotica, while attention-grabbing, was intended to be “just one example” of the company allowing more adult freedom – a freedom that also includes highly creative, non-sexual, but previously censored topics.

In his follow-up, Altman stated plainly that OpenAI does not see itself as society’s moral authority. “We are not the elected moral police of the world,” he asserted, drawing a compelling parallel to how other industries handle mature content. He argued that just as society differentiates boundaries for R-rated movies, allowing adults to access them while imposing strict age limits for minors, AI should do the same, granting freedom of use to those legally entitled to it.

For Altman, the larger mission is allowing users the freedom to use AI “in the ways that they want,” especially as the technology becomes increasingly central to people’s lives. This position is also arguably driven by intense market competition. Competitors, notably Elon Musk’s xAI (Grok), have already begun experimenting with looser, more conversational, and often edgy personalities that appeal to users frustrated by ChatGPT’s previous limitations. OpenAI is effectively choosing to compete on utility and freedom rather than maintaining strict, non-negotiable purity, believing their updated safety infrastructure can handle the risk.

Freedom for adults, safety for minors

The CEO’s defense hinges on a dual-track approach that offers liberalization for adults while simultaneously reinforcing strict protection for teenagers – a system that requires constant and difficult compromises between privacy, safety, and freedom.

- Tightening the Guardrails for Minors: Altman stressed that the company is consciously making a decision to “prioritize safety over privacy and freedom for teenagers.” This is the core conflict: to protect teens, OpenAI needs to know who is underage. This means that the system actively uses technology (like automated age-prediction systems and behavioral analysis) to identify users under 18, defaulting them to a highly restricted experience if there is any doubt about their age. The company views minors as needing “significant protection” from this new technology, which justifies the invasive age-prediction techniques and content filtering. Under-18 users will face outright blocks on flirtatious chat, self-harm discussions (even in creative contexts), and graphic content.

- The Privacy Compromise for Adults: Conversely, unlocking the full, less-restricted version for adults necessitates a privacy compromise. To prove they are over 18, adults in some jurisdictions will be required to upload verification documents, such as a government-issued ID. While OpenAI commits to securely processing and deleting this data, this requirement represents a clear tension: users must trade a measure of anonymity and personal data for access to the platform’s full capabilities – a trade-off the company views as a necessary evil for enforcing strong safety boundaries for the young.

- Protecting Vulnerable Users: Altman firmly stated that the policy change does not involve “loosening any policies related to mental health.” The company will continue to utilize tools to identify and treat users experiencing mental health crises differently from others. For instance, the system will look for signs of “acute distress” and, in those specific instances, will set aside its principles of privacy and non-paternalism to intervene with crisis alerts, and potentially notify parents or authorities, to prevent imminent harm. Furthermore, content that would “cause harm to others” will remain strictly prohibited, regardless of a user’s age.

The policy shift represents OpenAI’s difficult move to balance user demand for flexible, powerful, and unconstrained AI with the immense ethical responsibility of managing a tool that can influence mental health and social boundaries. The December rollout will be the first true test of whether the company can successfully enforce this split, allowing adults their freedom while keeping children safe in the new, evolving world of personalized AI. The outcome will likely define the regulatory and ethical landscape for conversational AI for years to come.

Also read: Panther Lake: 2026 Intel laptops to have faster GPU, better AI and battery

Vyom Ramani

A journalist with a soft spot for tech, games, and things that go beep. While waiting for a delayed metro or rebooting his brain, you’ll find him solving Rubik’s Cubes, bingeing F1, or hunting for the next great snack. View Full Profile