Inside Intel’s reboot: Sachin Katti’s blueprint for an open, heterogeneous AI future

Intel is rebuilding its culture around execution, focus, and humility

Sachin Katti’s AI strategy centers on open, heterogeneous compute systems

2026’s Panther Lake marks Intel’s push into scalable AI PCs

Having sat through his opening keynote, and interviewing him after, I get a chance to sense what the new Intel is all about through my exclusive conversation with Sachin Katti, Intel’s new Chief Technology and AI Officer. Not only is Intel doubling down on all things AI, from the PC to the cloud and edge deployments, it’s doing so without any of the chest-thumping bravado or mindless hyperbole of the past. The company’s tone is more measured, more pragmatic, more humble even I daresay. Not just Katti’s, but from all the executive and product leadership team at the new Intel – which is trying hard to turn a corner under the leadership of CEO Lip-Bu Tan.

Survey

SurveyFor better or worse, Intel seems to be at a historical cusp unlike ever before as a deep tech company. The past 12 months leading up to the past few weeks have seen historical highs and lows, a rollercoaster not for the faint of heart. From executive changes to massive layoffs, presidential shakedowns to unlikely alliances, all eyes are on Intel. And there seems to be a lot that’s riding on what Intel does more than what Intel says. I ask Sachin Katti if he feels the magnitude of this moment within Intel, because I certainly feel it as someone who’s covered Intel now for over a decade.

“I’d say the whole industry is at an inflection point, because of what’s happening around us. And these happen once every two, three decades, right? I think for Intel doubly so, because it needs to reinvent itself for the new computing landscape, and at the same time reinvent itself as a company, as a foundry plus a products business. That sense is, of course, there every single day. That sense of urgency, if you will,” Katti responds.

“But at the same time, you live for these moments,” he continues. “As a technologist, this is what you want to be doing, right? These moments don’t come that often. It’s a privilege to be in the position I am in to at least make an impact in some of these changes we as Intel are trying to bring about.”

New Intel: Agentic AI on heterogeneous, open standards

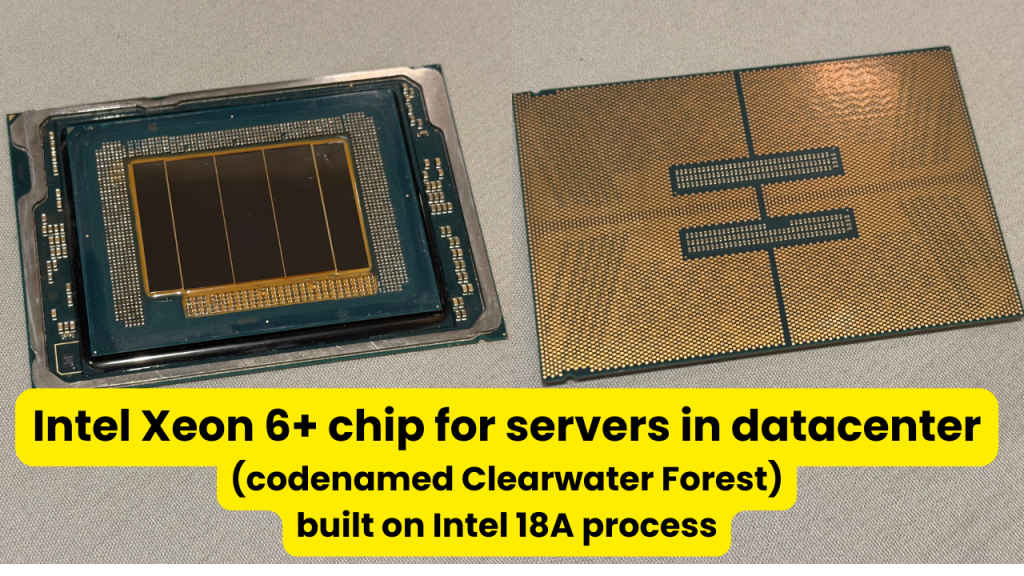

Sachin Katti then went about explaining some of the attributes of the new Intel. Katti’s read on the moment is both pragmatic and unashamedly ambitious. “We are building a new Intel. It’s centered on engineering, innovation and disciplined execution,” he says. “Fab 52, Intel 18A and Panther Lake aren’t just milestones. They are foundational to our future. They are foundational to the future of AI computing.” Curiously, there was no mention on quantum or neuromorphic computing, something that Intel’s always spoken about in the past.

That framing matters because the bet Intel is placing isn’t just “more GPU.” It’s a systems-level swing at how AI is built, deployed, and paid for—from agents on the PC, to inference farms, to (eventually) training frontier models. And it’s an admission of what hasn’t worked: the industry’s increasingly monolithic, vertically integrated AI boxes aren’t going to scale economically with the token explosion headed our way.

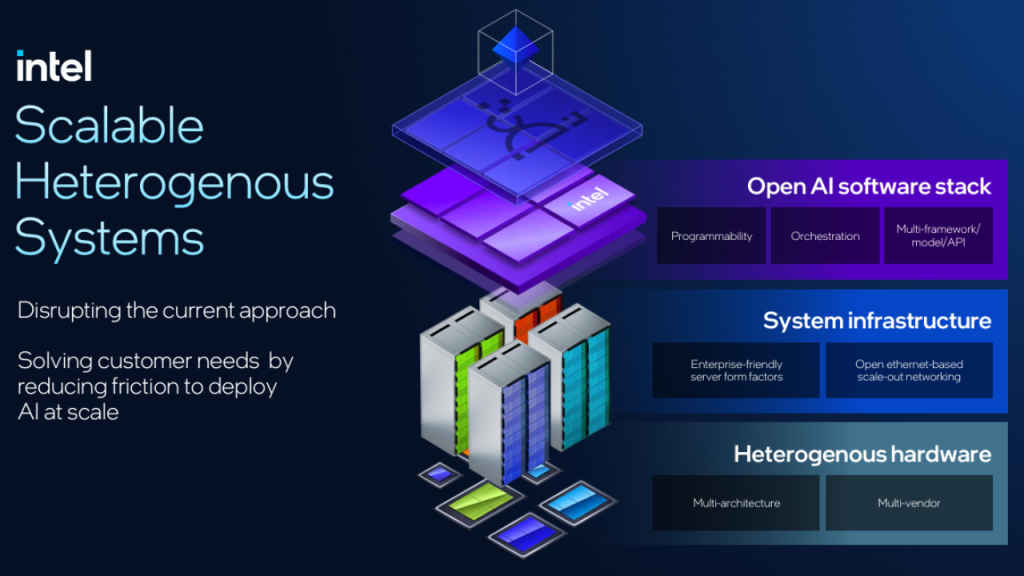

The centre of gravity in Katti’s thesis is a pivot from homogenous, “all-GPU-everything” racks to intentionally heterogeneous systems that match the right silicon to each phase of agentic AI workflows. In the new heterogeneous architecture developers stay in PyTorch, LangChain, Hugging Face, whatever gets them home.

Under the hood, an orchestration layer decides which engine pulls which car and when. If decode is memory-bound, route it to the device that loves capacity and bandwidth. If prefill is compute-hungry, send it to the brawny lane. If retrieval can live at the edge, keep it close. The point is not ideological purity, but always perf-per-dollar. Trying to get more done more efficiently without increased cost.

“We strongly believe that the architecture for the future of AI is heterogeneous,” says Katti. “A zero friction way of deploying these Agentic AI applications that can automatically figure out how to deploy and orchestrate across the heterogeneous infrastructure to deliver better performance-per-dollar.”

Katti made a point of underscoring “open” alongside “heterogeneous.” The play isn’t to force-fit Intel everywhere, he tells me, but to design a software fabric that can schedule across Intel and non-Intel parts, then earn insertion points with competitive CPUs, accelerators, memory, optics, and networking.

“When I say heterogeneous, I also mean open. That means we will support multiple vendors’ infrastructure. If we have an open software approach, with choice at the systems and the hardware layer, as we bring more and more disruptive technologies to the table, we can insert it into this open, heterogeneous architecture. That’s our mission, driving this architectural change in AI.”

To prove this isn’t just slideware, Intel claims to have run a lab demo already. Where Llama prefill was done on an Nvidia GPU, while decode on an Intel accelerator, coordinated by Intel’s orchestration stack, according to Sachin Katti. “Even in this very simple example, we are observing nearly a 1.7x improvement in performance per dollar compared to the homogenous systems out there today.”

And crucially, “heterogeneous” here is inseparable from “open.” It only works if the fabric is multi-vendor from day one – CPUs, accelerators, networking, optics – because that’s what customers are asking for. Intel’s job, as Katti narrates it, is to build a mesh where Intel parts are frequently the best answer, without requiring them to be the only answer. “As far as customers are concerned, we just slip in the new hardware, and the software will figure out how to integrate and extract the performance,” suggests Katti.

Intel’s partnership with Nvidia and quest for annual GPU

The implication is less “Nvidia bad, Intel good,” and more “economics demand mix-and-match.” Katti is blunt about why the world wants this: “Every country that is building sovereign AI clouds wants diversity, wants choice… the perf-per-dollar is just the cherry on top.”

Which brings us to the eyebrow-raising bit: Intel partnering with Nvidia. In the old hero-narratives of Silicon Valley, this would be treated like a cease-fire. In Katti’s systems-narrative which is indicative of the new Intel, it’s more closer to first principles approach.

“AI is a systems game. It’s a CPU. It’s a GPU. It’s networking, it’s optics, and many more things,” according to Sachin Katti. The goal, he says, is to ensure Intel’s technology is “designed into the future architecture” at multiple layers, starting with CPUs. To that end the open software strategy, he says, “ensures that when we come out with our GPUs, it’s not like the software blocks or locks us up.” The Nvidia partnership, in other words, “is just the first step of our AI strategy rather than a diversion.”

For me, this framing reveals Intel’s forward-looking bet on the future of AI quite clearly. If the software fabric really is multi-vendor and the economics reward heterogeneity, then Intel can expand its share by being useful everywhere – supplying CPUs in Nvidia systems, interconnects and optics where they fit, GPUs where they make sense, etc – rather than fighting for a monoculture or single vendor solution that customers say they don’t want to begin with.

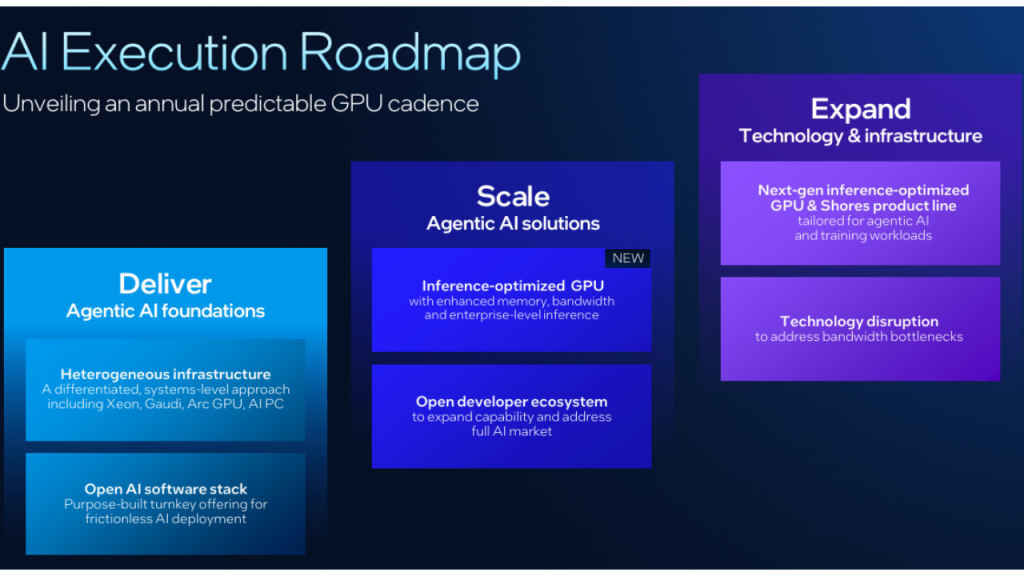

Of course, to be “insertable” at scale, you need parts on a drumbeat. On that note, Katti was explicitly open, “We need to get onto an annual, predictable cadence of GPUs, and we are working hard on an inference-optimized GPU [with] enhanced memory bandwidth, lots of memory capacity, overall a fantastic product for token clouds and enterprise-level inference.”

That’s one half of Intel’s AI product thesis, where the desire is to own inference and agentic AI-workflow economics. The other half included a clear nod to the rest of the hardware stack meant for AI training workloads, which according to Katti will arrive via packaging, photonics, and memory innovation.

“We are also making disruptive bets in photonics and memory and logic stacking to overcome the memory bandwidth wall that exists in AI today. That opens the doors for frontier model training. Stay tuned,” Kattis says on this front.

Read between the lines and you can see Intel’s moves on the chessboard. A year-over-year GPU rhythm for inference, near-term wins in mixed vendor deployments, then using manufacturing leverage (18A, PowerVia, RibbonFET, advanced packaging) to attack the bandwidth bottlenecks that define AI training workload economics.

Intel’s take on India’s semiconductor aspirations

When the conversation turns to India’s chip ambitions – factories, packaging, assembly, the whole geopolitics-meets-industrial-policy brew, Katti slips easily from product to policy. You get the sense he sees the ecosystem as a continuum, and Katti was encouraging without being naïve.

“India is a super important market for us, it has several decades of growth ahead,” began Katti. “Given the geopolitical situation, just like the US is realizing how important fab, foundry and process technology is for national security, I think India, given its political stance, has to also come to the same conclusion.”

For India’s semiconductor roadmap, the question isn’t as much if and when but what, according to Katti. “What makes sense, what kind of capability, how can we help India in general. On that front, we remain very engaged with the Indian government as well as a lot of the business partners, big companies in India who are all playing in this in different ways. And obviously the Tata Group is the biggest of them all. When I talk to N Chandrasekaran (Chairperson of Tata Motors) and Randhir Thakur (CEO & MD, Tata Electronics who was also president of Intel Foundry Services), I applaud them. I think it’s a very courageous thing they’re doing, but I think it’s also important for the country,” says Katti.

For a company that wants to be both a products powerhouse and a contract foundry, that duality – help India build capacity while selling its chips designed and packaged on 18A and beyond – feels like a feature, not a bug.

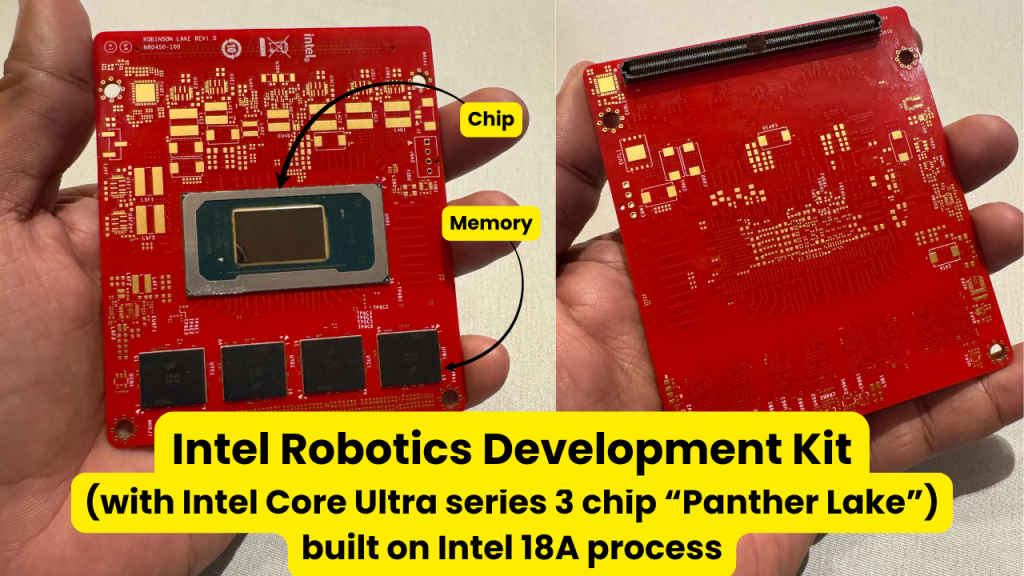

2026 and the AI PC: Panther Lake is timed for lift-off

Back to Phoenix, and you could feel Intel’s leadership trying to make “AI PC” mean something tangible. In this cycle, the argument is that agentic AI workloads don’t just live in hyperscale racks – they’ll inhabit clients and edge devices where latency, privacy, and cost dynamics favour on-device reasoning.

Also read: Panther Lake explained: How new Intel chip will impact laptops in 2026

“Panther Lake is going to be our biggest AI PC platform, it’s the broadest PC platform out there for AI applications, now it’s in the third generation starting with Meteor Lake,” according to Katti. “Every laptop that we ship is going to be packing a lot of AI horsepower, and you will start to see AI software running on these [PCs] at scale.”

If you map that to calendar time, 2026 is the “third-time’s-the-charm” year: a maturing software layer (OpenVINO, assistant builders), a rising developer comfort with local agents, and a platform family (Panther Lake on 18A) built with AI blocks as the default, not a bolt-on. And in case anyone missed it in Katti’s keynote: “AI PC and industrial edge are natural places where agentic AI is going to drive a massive transformation. We have a very easy-to-use platform, at the right power, so customers can build these agentic AI workflows on their PC itself.”

The most interesting subtext here isn’t the neural TOPS number, but it’s about these Panther Lake or Intel Core Ultra series 3 AI PCs plugging into the bigger thesis outlined by Katti. If the future is heterogeneous, your laptop is just one node in a larger agent mesh – sometimes it’s the first hop (capture, summarize, triage), sometimes it’s the last (render, redact, act), and often it’s both, shaving latency and cost by keeping the right steps local.

If Katti is right, 2026 will feel like an inflection not because your laptop writes better emails thanks to better on-device AI, but because your laptop joins a much larger conversation. One where compute is finally deployed like language flexibly, heterogeneously, with the right words in the right places at the right time. And if Intel can help the industry pull that off – quietly, predictably, on time – that will be the loudest thing of all. Then the company won’t need to say much at all, the systems will say it for them.

Also read: 5 things Intel revealed about our AI future

Jayesh Shinde

Executive Editor at Digit. Technology journalist since Jan 2008, with stints at Indiatimes.com and PCWorld.in. Enthusiastic dad, reluctant traveler, weekend gamer, LOTR nerd, pseudo bon vivant. View Full Profile