Google’s AI copying my writing style feels creepy, wrong and dangerous

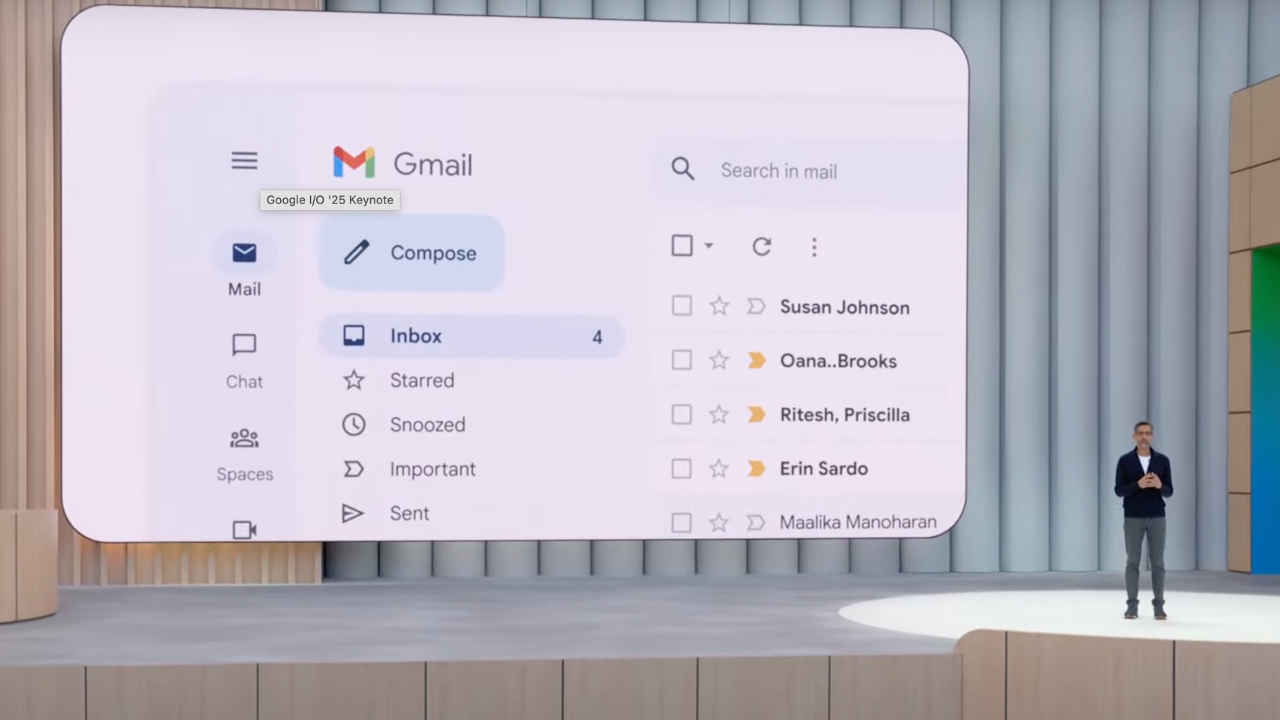

Google has come up with so many tech innovations that has made our lives easier over the past few years. However, in a similar bid on Tuesday night, the Search Giant crossed a line — that many users, including myself — find deeply unsettling. At its recent I/O developer conference, the tech giant unveiled a new feature set to roll out later this year: Personalised Smart Replies in Gmail, powered by Google’s Gemini AI models. Just like most of its Gemini features, this one too wants to make artificial intelligence feel more ‘personal’.

Survey

SurveyOn paper, it sounds like a convenience dream. Imagine your friend emails you asking for advice on a road trip you took a year ago. Instead of you having to dig through your inbox and Drive, Google’s AI will do it for you — scanning your old emails, itineraries, and even Google Docs — to craft a response that pulls in specific details and matches your typical tone, style, and favorite phrases.

But scratch the surface, and what you uncover a chilling truth that everything from your language, thoughts and tone is being replicated by a machine that not only readsyour personal correspondence but learns from it to become you.

That isn’t just smart. It’s invasive.

And, it’s not the first time Google is doing it!

Is it a help or a breach?

Let’s start with what this new feature really entails. Google in its official blog says, “By pulling from your past emails and Google Drive, Gemini provides response suggestions with specific details that are more relevant and on point, eliminating the need to dig through your inbox and files yourself. Crucially, it also adapts to your typical tone — whether crisp and formal or warm and conversational — so your replies sound authentically like you.”

Basically, it’s no longer just autocomplete or a generic ‘Thanks’. This is AI impersonation, powered by deeply personal data that is accessed by one of the biggest tech companies in the world.

While Google wraps it up behind phrases such as “with your permission,” “private,” and “under your control” — the fact remains: the system needs access to the very contents of your life to function. Every email, every diary-like travel plan in Docs, every shared file on Drive becomes raw material for a chatbot.

Do I want help drafting replies? Sure.

Do I want an AI to study the way I write to my mother, my partner, or my boos, just so it can fake my voice in a reply to someone else? Absolutely not.

While there is still time before the feature actually rolls out, at this point, it feels less like assistance and more like a breach of digital intimacy. With AI, Google is blurring the line between help and surveillance. Even worse is the precedent this sets: where AI is not just a tool you use, but a system that uses you to become more intelligent.

Now, some may argue that AI is supposed to learn from humans. But, it’s one thing for AI to learn from public data. It’s another for it to be trained on the emotional nuance, linguistic quirks, and stylistic fingerprints of individual users. Your writing style is a part of your identity. Should a machine be allowed to replicate it?

It might sound convenient today. But what happens when AI-generated content floods inboxes. This raises uncomfortable questions about authorship, consent, and control in the AI age. Is Google’s AI just responding on your behalf, or becoming a version of you that you didn’t create?

Also read: Google I/O 2025: Gmail’s AI smart replies can now use your personal context to respond just like you

Not just a Google problem

Google’s announcement might have brought this to attention but it’s not just a Google problem. It’s part of a larger and growing concern in the world of generative AI: the erosion of human distinctiveness. Whether it’s deepfake videos, voice clones, or AI-written text that mimics specific people, we’re rapidly entering a world where your digital likeness can be copied, synthesised, and deployed without your active participation.

To be clear, the idea of personalised AI isn’t inherently evil. Technology should adapt to serve human needs. But it shouldn’t do so by compromising the private data or the uniqueness of personal expression.

I think this is the point where we need to ask what kind of relationship we want to have with technology in the future. For me, the answer is clear: if AI is going to speak for me, it needs to ask more than just permission…it needs to know when to stop. Because being helpful doesn’t give it the right to become me.

Manas Tiwari

Manas has spent a decade in media, juggling between Broadcast, Online, Radio and Print journalism. Currently, he leads the Technology coverage across Times Now Tech and Digit for the Times Network. He has previously worked for India Today where he launched Fiiber for the group, Zee Business and Financial Express. He spends his week following the latest tech trends, policy changes and exploring gadgets. On other days, you can find him watching Premier League and Formula 1. View Full Profile