A brief history of display panels

From the humble CRTs to the complex VR headsets – a history lesson.

Ever since John Logie Baird first telecast the image of a ventriloquist dummy across a dingy one-bedroom apartment in 1920s London, the science of display technology has forever been obsessed with improving the way images and videos are broadcast virtually. Simple cathode ray tubes with electrons pinging mindlessly against a phosphorescent screen have now evolved into a multi-billion dollar industry. The humble origins of this field owes its gratitude to the minds of some truly exceptional engineers and scientists who were driven by a need to communicate through the most allusive of our senses: sight. This article pays homage to these men and women, and to the technology they spawned, by trying to trace our fancy 1080p full HD displays back to their roots.

Survey

SurveyThe first CRTs

The first proper piece of display equipment was the Cathode Ray Tube (CRT), a relatively simple piece of equipment despite what every engineering and science student who has ever had the misfortune to have to handle this in a lab will tell you. It operates on the simple principle that electrons, when fired on a screen, produce light, like a microscopic paint ball gun firing pellets into a wall. By adjusting the voltage, and correspondingly the amount and position of electrons on the screen, it became possible to produce some pretty crazy patterns on a screen. For a long time, the use of CRTs was mainly restricted to experimental physics and chemistry, but in 1907, Russian scientist Boris Rosing explored the possibility of displaying simple geometrical shapes by using a CRT as a display.

Spurred by Boris’s work, early pioneers started incorporating higher degrees of complexity in the CRTs to try and obtain greater resolution of images. The first televisions combined the growing complexity of CRTs with the now eponymous Nipkow disk, a semi mechanical contraption that could scan an image and reduce it to a series of dots and lines. With a Nipkow disk, a pair of needles, a pair of scissors, a hatbox, and some smart tinkering, Baird successfully developed the first television in 1923. The first monochrome images displayed by these primitive displays were blurry, pixelated and very laggy, but they were still all the impetus needed for an entire industry to crop up to capitalise on humanity’s growing fascination with images.

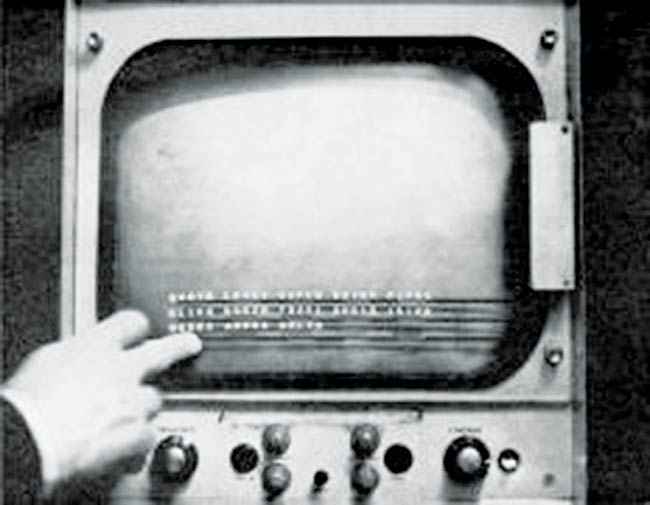

An early CRT device

Bringing the TV Home: The first couch potatoes

The growing popularity of Hollywood was a major catalyst as well. People really wanted to bring all of the glamour and excitement of the movie theatre and merge it with the potato chip fuelled lethargy of their couch. The television industry single-handedly revolutionised display technology. Imagine, if you can, the first generation of TV viewers guffawing to Charlie Chaplin falling down an assembly line in Modern Times, all from a magical box in their living rooms.

The people couldn’t get enough of it! At around the same time, the world of CRT’s was continuing to evolve. Growing in complexity, engineers were experimenting with the possibility of incorporating colour into their screens, which eventually resulted in the first colour CRT displays in the 1950s which used differing shades of red, green and blue light to produce coloured images.

Replacing the CRT

The 1960s witnessed a slew of new display technologies arriving onto the scene, many of which are still in use today. The first Light Emitting Diodes(LED’s) were developed at Texas Instruments at around the same time that Liquid Crystal Displays(LCD’s) and Plasma Displays were being developed which used the light modulating properties of liquid crystals and ionised gases respectively. The first LCD displays were used in simple appliances like watches and calculators. A standard Casio calculator today uses the same LCD technology that the first displays in the 1960s did. This period also marked the beginning of the age old ‘Plasma vs LCD’ debate, a debate which still has proponents on both sides. The first of these displays were monochrome, and were a far cry from the lush colours that one finds on today’s screens but they provided the basis for innovation to be carried forward.

Simultaneously, LED displays were also growing in complexity and were finding uses in many places, especially in the world of sign board advertising. The main problems that LEDs faced were the finite size of each individual lamp, which was reduced with each successive year. As the world of computerisation and mechanisation grew, so did the demand for diversified displays that could serve individual needs. There were new forms of displays that were evolving for specialised requirements. Split-flap displays like the ones that were common in airport arrival/departure boards of the last decade and vacuum fluorescent displays that the annoying counter clerks at the local supermarket still use today are all inventions that were spawned during this period. This period also saw the first touchscreen technologies being developed. It’s interesting to note that the first touchscreen displays were used in the field of air traffic control. However, these touchscreen displays remained largely unpopular as they offered little functionality in everyday use.

Early touchscreens were bulky and offered little functionality

Putting the TV on a diet: Thinner, longer screens

The next revolution in the world of display technology was heralded by the arrival of personal computers. Screens now had to be made that could offer the users the option to interact with the images, which posed a new challenge: the images were no longer relayed by an external source, they were controlled precisely by each individual user. These requirements were addressed, along with a drastic improvement in quality, by the advent of Thin Film Transistor (TFT) LCD displays in the 1980s which were fitted onto some of the first large scale commercial PCs and laptops. These displays offered a wider range of colours and faster refresh rates as they were able to change the colour and display properties of each pixel on the screen in an ‘active’ manner. These are probably familiar to us. Remember the bulky monitors that we used to have in the early half of the last decade (or the ones that the State Bank of India still uses)? Those were ‘thin’ film transistors displays.

This period of TFT development also saw the rapid growth of the television industry. While televisions were a novelty even in the ‘70s, suddenly everyone and their grandmothers had TVs in the ‘80s. This competitive marketing between the different television manufacturers put the display on a diet and they grew thinner and taller every year. Touchscreen also became more and more common as many industries started adopting the technology into their products. ATMs introduced the world of touch technology to the common man and the first touch phones started appearing in the market. Plasma was slowly phased out and the definitive killing stroke was struck by the LCD with the arrival of the first HD TV stations in the USA in the late ‘90s. LCD displays for HD became cheaper, and, more importantly, larger than plasma.

LCD became the norm in the 2000s. Every display in the world, right from laptop screens at home to inbuilt entertainment systems on airlines whizzing at over 30,000 feet above sea level, were some variation of the LCD technology. HD started becoming more affordable as more broadcasting channels start viewing HD as the norm rather than as a special service. Apple’s Retina display also debuted in the late 2000s along with their series of iPhone and iPod devices, and other companies were now forced to offer Ultra HD touch integrated displays as part of all their devices. These screens have gotten progressively better with each generation of smartphone and TV and is sure to continue to do so.

| Do I need a 4K TV |

|---|

| Short answer is that you don’t. The human eye has a finite resolution and it’s impossible to tell the benefit of a 4K (Ultra HD) resolution from the ideal viewing distance. Unless, of course, you’re using a projector on a 11 ft screen. Ultra HD in 40-inch and 50-inch screens makes no sense unless the viewer is sitting a foot or two away from the screen. Furthermore, the lack of 4K content means that your existing Blu-rays and Full HD will end up looking awful because digital flat panel displays only look good in the native resolution. Don’t fall for this gimmick until you have enough 4K content to justify the purchase. |

The magic of 4k displays

The future: Now in ultra-realistic immersive HD!

That’s where we stand, at the pinnacle of visual display technology, but this is not the end of the story by any stretch of the imagination. Flat screen TV’s are now passé, curves are in. Offering a better viewing experience, these next generation curved displays are also adopting the technology offered by the new wave of 4k technology: the magic of displays which offer four times the pixel density of a normal 1080p HD device. These curved televisions are also likely to have 3D as a standard part of their working technology very soon, offering a more immersive experience for the viewer.

The most exciting development in the past couple of years that is set to revolutionise display technologies, is the idea of Virtual Reality(VR). Ever since Facebook bought the company Occulus VR for $2 billion in 2014, the Occulus Rift has become the most anticipated product in the world of technology. Slated for release in March of this year, this device has captured the fascination of people around the world, with its promises of a completely immersive world that the user can pop onto their heads. Since the idea of VR exploded onto the scene, many other companies have started-up, including many from India such as Absentia VR. These companies offer HD displays, 3D audio tech, rotational and positional tracking with their devices, which will make entertainment such as movies and games a novel experience by completely transporting the viewer to a different world altogether.

Virtual reality: The future of immersive entertainment

With a lot of exciting innovation happening at this very moment, it is impossible to predict what the next generation of display technology will bring us. The barriers between science and science fiction are slowly eroding and we may find the technology we use today obsolete in as few as 10 years. Until then, all we can do, is to stay tuned.

This article was first published in the March 2016 issue of Digit magazine. To read Digit's articles first, subscribe here or download the Digit e-magazine app.