Gemma 3n: Google’s open-weight AI model that brings on-device intelligence

Google’s Gemma 3n enables advanced AI tasks like speech recognition and video analysis entirely offline.

This small yet powerful AI model allows real-time, multimodal processing on edge devices without cloud reliance.

Gemma 3n’s open weights give developers unmatched freedom to build, customize, and deploy on-device AI.

The future of AI isn’t just in vast server farms powering chatbots from afar. Increasingly, it’s about models smart enough to run right on your phone, tablet, or laptop, delivering intelligence without needing an internet connection. Google’s newly launched Gemma 3n is a major leap in this direction, offering a potent blend of small size, multimodal abilities, and open access. And crucially, it arrived before similar efforts from OpenAI.

Survey

SurveyWhat is an open-weight model?

At the heart of Gemma 3n’s significance is its status as an open-weight model. In simple terms, an open-weight model is an AI system where the actual model data, the “weights” it learned during training is publicly shared. This allows developers to download, inspect, modify, fine-tune, and run the model on their own hardware.

Also read: ROCm 7: AMD’s big open-source bet on the future of AI

This contrasts with closed-weight models like OpenAI’s GPT-4 or Google’s Gemini, where the model runs only on company servers, and users interact with it via an API. Open-weight models give developers more control, encourage innovation, and let AI run independently on local devices, something increasingly important for privacy, security, and offline use.

Introducing Gemma 3n: small, powerful, and multimodal

Gemma 3n is the latest in Google’s family of open-weight AI models, specifically designed for on-device AI, that is, AI that can run directly on edge devices like smartphones, tablets, and laptops. The “n” in its name stands for “nano,” a nod to its compact size and efficiency.

What sets Gemma 3n apart is its ability to handle multimodal inputs natively. Earlier models were text-only, but Gemma 3n can process text, images, audio, and even video as input, generating text responses in return. This opens up possibilities for real-time transcription, translation, image understanding, and video analysis, all done directly on the device.

Gemma 3n isn’t just smaller, it’s smarter in how it uses resources.

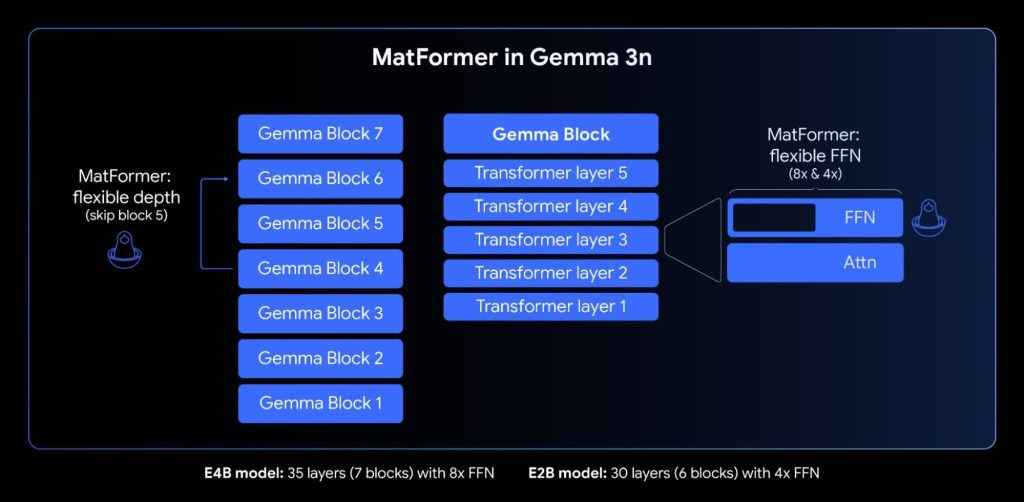

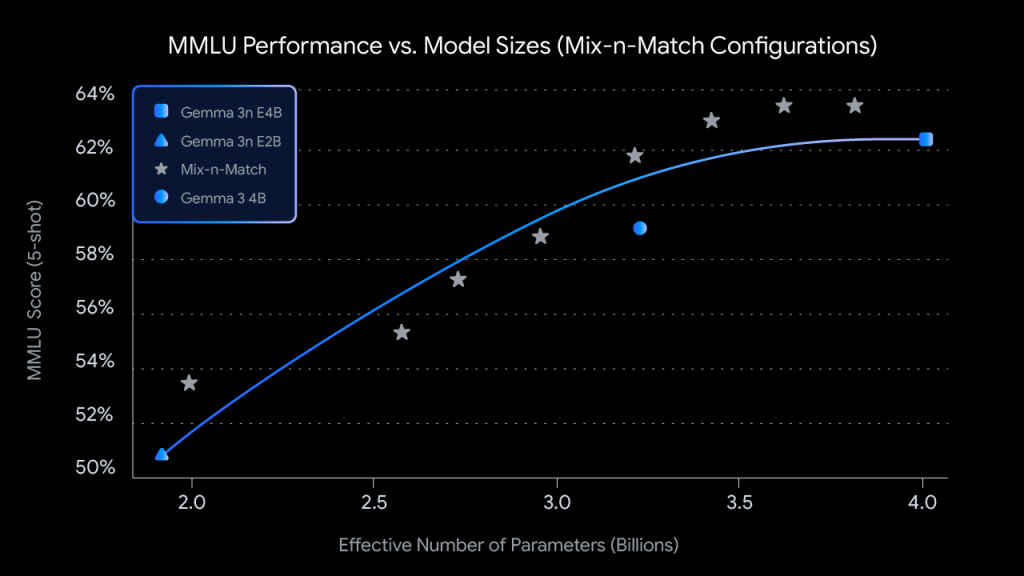

- Memory efficiency: Thanks to innovations like Per-Layer Embeddings (PLE), which offload some processing to the CPU, and MatFormer, a novel nested architecture, Gemma 3n’s 5B and 8B parameter models can run with a memory footprint comparable to older 2B and 4B models.

- Fast response: Google reports about 1.5x faster inference on mobile compared to Gemma 3 4B, helped by features like KV Cache Sharing for better handling of long audio and video streams.

- Offline operation: Once installed, Gemma 3n can work without any internet connection, critical for privacy-sensitive use cases and for regions with poor connectivity.

The model comes in two sizes:

- Gemma 3n E2B: 5B parameters, designed to run on as little as 2GB of RAM.

- Gemma 3n E4B: 8B parameters, operating efficiently with about 3GB of RAM.

Both versions bring high-quality AI performance to devices that would have struggled with earlier-generation models.

Also read: Gemini CLI: Google’s latest open source AI agent explained

Gemma 3n’s architecture reflects its on-device focus. MatFormer allows the model to flexibly scale its compute usage depending on hardware limits which is a concept Google calls “elastic inference.” The audio encoder is based on Google’s Universal Speech Model (USM), this enables high-quality speech-to-text and translation directly on-device. Vision encoder is powered by the lightweight MobileNet-V5, it supports fast, efficient video analysis at up to 60FPS on modern smartphones.

Google moves faster than OpenAI in the on-device AI race

OpenAI has long spoken of on-device AI and GPT-4o showed what’s possible in terms of efficiency, but its models remain cloud-bound. You can’t download or modify GPT-4o; it runs on OpenAI’s servers. Google, with Gemma 3n, has delivered what OpenAI so far hasn’t: a powerful, open-weight, multimodal AI model that can run locally, offline, and at scale on everyday hardware. It’s available now via Hugging Face, Kaggle, Google AI Studio, and other developer-friendly platforms.

Gemma 3n represents more than just another model release. It signals a new phase of AI development: one where powerful models don’t just sit in the cloud, but live on devices in your pocket. It opens the door to smarter, more private, more customizable AI, and raises the bar for what on-device AI can be.

Also read: Google’s new Gemini AI model can run robots locally without internet, here’s how

Vyom Ramani

A journalist with a soft spot for tech, games, and things that go beep. While waiting for a delayed metro or rebooting his brain, you’ll find him solving Rubik’s Cubes, bingeing F1, or hunting for the next great snack. View Full Profile