YouTube rolls out likeness detection for creators: Will it reduce deepfakes menace?

The internet has always blurred the line between what’s real and what’s not, but in the age of generative AI, that blur has become something more unsettling. In a world where anyone’s face or voice can be replicated within minutes, the threat of losing control over one’s own identity is no longer hypothetical, it’s personal.

Survey

SurveyTo address this growing menace, YouTube has officially launched its new likeness detection tool, an AI-driven system designed to identify and flag videos that use a creator’s likeness without their consent. The feature, now available to members of the YouTube Partner Program, gives creators a degree of protection that was previously out of reach in the open, unpredictable ecosystem of online video.

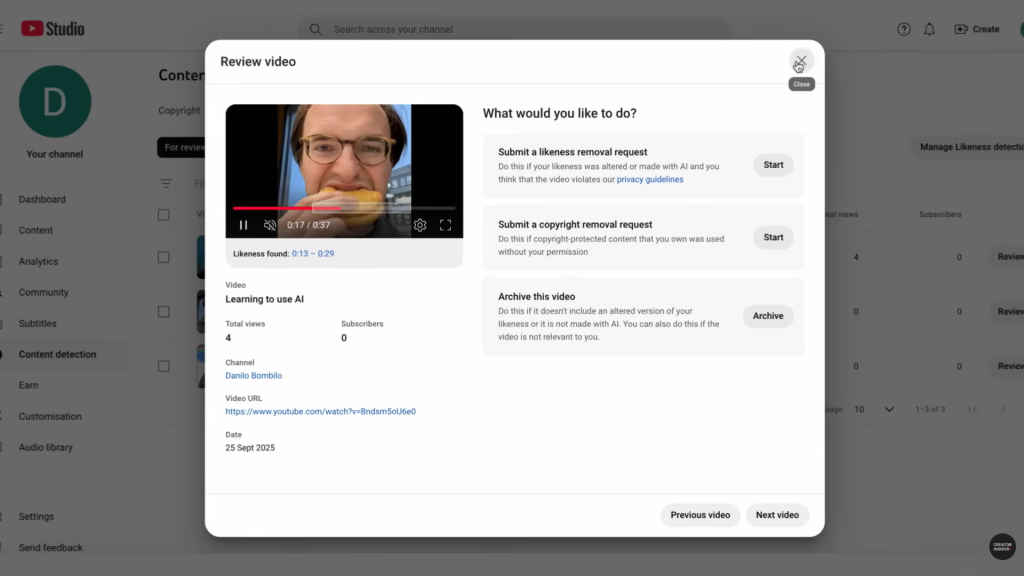

At its core, the system scans for appearances or audio resembling a creator who has enrolled in the program. If the AI detects that a creator’s face or voice has been used elsewhere on the platform, the video appears in a dashboard where the creator can decide whether to take action. They can request its removal, archive it for reference, or dismiss the alert entirely. The process is designed to be both transparent and flexible – a crucial consideration in an environment where false positives or creative reinterpretations are inevitable.

Also read: ChatGPT Atlas launched but do we really need a new browser in 2025?

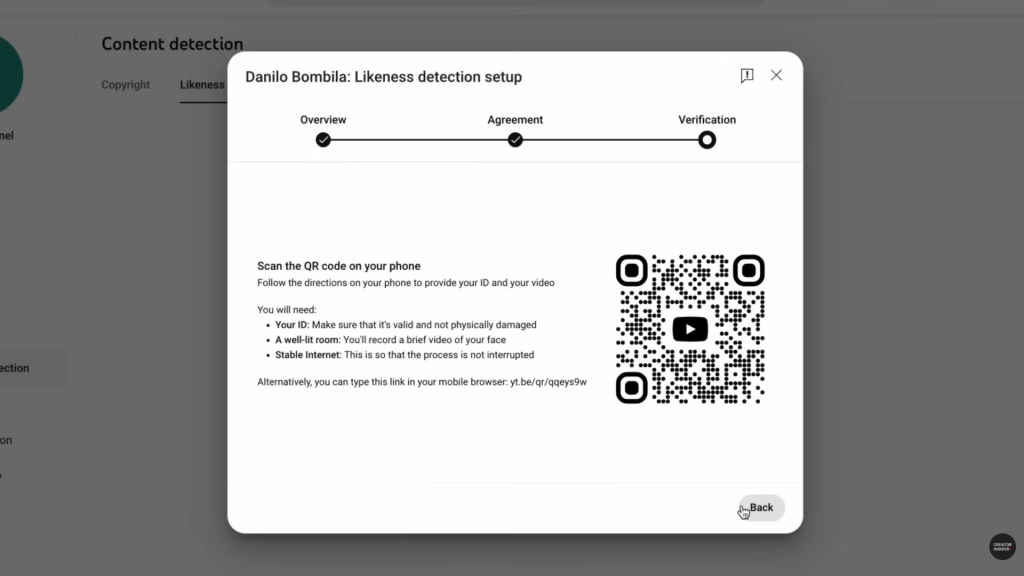

To begin, creators verify their identity by scanning a QR code, submitting an ID, and recording a brief selfie video. Once the setup is complete, YouTube’s AI begins its silent watch, continuously scanning for content that matches the creator’s verified likeness. If a creator opts out, scanning stops within 24 hours, giving users full control over participation.

Restoring control in the age of AI confusion

YouTube’s move comes at a time when creators have become increasingly vocal about the misuse of their likeness. The emotional and professional consequences of deepfakes are difficult to measure. When a face or voice becomes detached from the person it belongs to, it erodes trust – not just in the content, but in the creator’s relationship with their audience. For many, that trust is the foundation of their livelihood.

The new tool doesn’t erase those risks entirely, but it does shift the power dynamic back toward creators. Instead of reacting to damage after it’s been done, they now have a proactive mechanism to detect and address it early. In that sense, YouTube isn’t just moderating content, it’s mediating identity.

Still, the system’s limitations are clear. Access is currently restricted to those within the YouTube Partner Program, meaning smaller creators – who often lack the resources to fight impersonation – remain exposed. Extending this protection more widely could be the next logical step, but one that comes with scale and resource challenges.

The tool also raises difficult questions about interpretation. AI-generated likenesses often exist in a gray zone, satire, education, and commentary can all mimic real people without malicious intent. A creator’s right to remove such videos will need to be balanced carefully against freedom of expression, especially in cases that test the boundaries of parody or fair use.

Platform power meets personal identity

YouTube’s likeness detection system builds on a series of recent initiatives aimed at improving transparency around AI content. Earlier this year, the platform required creators to label realistic AI-generated material, and it has supported legislative efforts like the No Fakes Act, which seeks to grant individuals legal control over their digital likeness.

These moves suggest that YouTube sees AI not only as a tool for innovation, but also as a threat to the authenticity that drives its ecosystem. Platforms built on trust and creativity can’t thrive if creators fear being cloned or misrepresented. The company’s partnerships with agencies and advocacy for legal frameworks signal that this issue is as much cultural as it is technological.

The success of this initiative will depend on its accuracy and fairness – on whether YouTube’s detection AI can distinguish imitation from inspiration without stifling creativity. But even with its imperfections, the rollout marks a turning point in how major platforms treat identity in the digital age.

A small step toward a safer digital future

As deepfakes grow more sophisticated and accessible, the burden of proof, of what’s real and who’s real, will increasingly fall on technology itself. YouTube’s new tool may not eliminate the problem, but it offers something meaningful: a sense of agency in an era where that’s been slipping away.

For creators who have built their livelihoods on being authentic, that’s a step worth taking and perhaps the beginning of a broader shift in how platforms, policymakers, and audiences define what “real” means online.

Also read: Neuralink’s competitor restored eyesight in blind patients with this retinal implant: Here’s how

Vyom Ramani

A journalist with a soft spot for tech, games, and things that go beep. While waiting for a delayed metro or rebooting his brain, you’ll find him solving Rubik’s Cubes, bingeing F1, or hunting for the next great snack. View Full Profile