Apertus, the Swiss open-source AI model: What it does and how it is different?

Swiss open-source AI model Apertus redefines transparency with full training access

Apertus supports 1,000+ languages, including Swiss German and Romansh, unlike most LLMs

Built under EU AI Act rules, Apertus ensures privacy, compliance, and digital sovereignty

In the rapidly evolving world of artificial intelligence, a new player has emerged, not from the tech giants of Silicon Valley, but from the heart of Europe. Switzerland’s first large-scale, open, and multilingual language model, Apertus, is a collaborative effort by the Swiss Federal Institutes of Technology in Lausanne (EPFL) and Zurich (ETH Zurich), along with the Swiss National Supercomputing Centre (CSCS). The model, whose name is Latin for “open,” is making waves for its distinct approach to AI development, one built on transparency, public good, and digital sovereignty.

Survey

SurveyAlso read: Meet GLM-4.5: The most capable open-source AI model yet

New definition of “open”

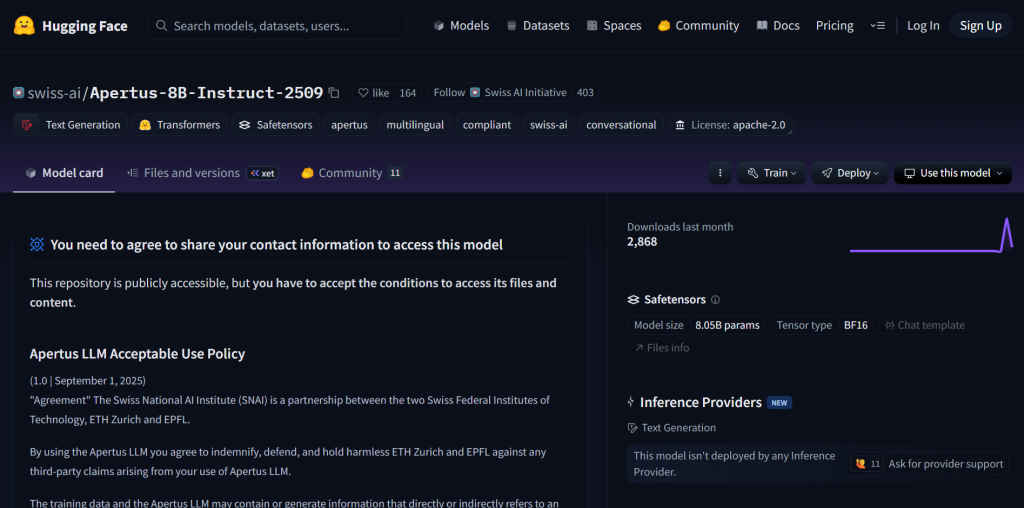

Apertus’s most defining feature is its commitment to being a “fully open-source” large language model (LLM). While many models are labeled as “open,” they often only make certain components available, such as the model weights. In contrast, Apertus provides unprecedented access to its entire development process. This includes its architecture, training data, model weights, and even the “recipes” for training. This level of transparency is a deliberate choice, as highlighted by Martin Jaggi, a Professor of Machine Learning at EPFL, who stated the goal is to “provide a blueprint for how a trustworthy, sovereign, and inclusive AI model can be developed.”

Black box alternative

This radical openness sets Apertus apart from most of its competitors, particularly the proprietary models like ChatGPT or Google’s Gemini. These commercial models are often described as “black boxes,” where the inner workings are hidden from public view. This lack of transparency raises concerns about bias, data usage, and accountability. Apertus, by contrast, allows researchers, developers, and even casual enthusiasts to inspect and audit every part of its creation. This means that data sources can be verified, adherence to data protection laws can be confirmed, and any potential biases can be identified and addressed by the community.

The model’s development was explicitly guided by Swiss data protection laws and the EU AI Act, with a focus on using only publicly available data. The project’s developers went a step further by implementing a system to honor “machine-readable opt-out requests” from websites, even retroactively, and to filter out personal data and other unwanted content before training. This meticulous attention to privacy and compliance positions Apertus as a model built for the public good, a tool that can be trusted by a society increasingly wary of data exploitation.

Also read: Google antitrust ruling: Chrome, Android, Search and India impact explained

Foundation for innovation

Beyond its ethical and transparent foundation, Apertus is designed to be a driver of innovation. Rather than being a conventional technology transfer from academia to a single product, the project aims to serve as a foundational technology and infrastructure for the entire Swiss economy and beyond. It is freely available in two sizes (8 billion and 70 billion parameters) under a permissive open-source license, allowing for broad societal and commercial use. This means that startups, SMEs, and researchers can build upon it, adapting it to their specific needs without the high costs or restrictive licenses of proprietary models.

Another significant differentiator is Apertus’s commitment to multilingualism. Trained on a massive 15 trillion tokens across more than 1,000 languages, the model boasts a remarkable 40% of its data being non-English. This includes languages that are often underrepresented in mainstream LLMs, such as Swiss German and Romansh. This focus on linguistic diversity ensures that Apertus is not only globally relevant but also serves the unique linguistic landscape of Switzerland and other smaller language communities.

Collaborative power

The project is the result of the Swiss AI Initiative, a large-scale open-science and open-source effort that brings together over 800 researchers from more than 10 academic institutions. The initiative is powered by one of the world’s leading AI supercomputers, “Alps,” located at the CSCS. This collaborative, non-commercial approach is a stark contrast to the closed-door, highly competitive nature of private AI research.

In essence, Apertus is more than just a large language model; it is a statement of intent. It demonstrates a belief that AI, as a foundational technology, should be developed openly, responsibly, and for the benefit of all. By providing a blueprint for a “trustworthy, sovereign, and inclusive AI,” Apertus stands as a compelling alternative to the dominant proprietary models, offering a path toward a more transparent and equitable future for artificial intelligence.

Also read: ChatGPT Projects explained: Everything you need to know to get started

Vyom Ramani

A journalist with a soft spot for tech, games, and things that go beep. While waiting for a delayed metro or rebooting his brain, you’ll find him solving Rubik’s Cubes, bingeing F1, or hunting for the next great snack. View Full Profile