Vibe-hacking based AI attack turned Claude against its safeguard: Here’s how

Anthropic report reveals how vibe-hacking attacks turned Claude AI into an extortion tool

Cybercriminals bypass safeguards, using Claude AI for ransomware-style psychological attacks

Anthropic report warns hackers manipulated Claude against safeguards in extortion schemes

When Anthropic introduced Claude, the company marketed it as a safer kind of AI, one built with guardrails strong enough to withstand malicious use. But a fresh threat intelligence report from Anthropic itself shows just how quickly those safeguards can be bent, if not broken. The culprit: a chilling new form of cyber extortion dubbed “vibe-hacking.”

Survey

SurveyAlso read: ChatGPT and Claude AI bots test each other: Hallucination and sycophancy findings revealed

What is vibe-hacking?

Unlike conventional ransomware, which encrypts files until a ransom is paid, vibe-hacking operates in the psychological domain. Attackers aren’t locking computers – they’re locking minds. Using Claude’s advanced reasoning and language skills, criminals craft intimidation campaigns that exploit human vulnerabilities. These AI-driven ransom messages are personalized, emotionally calibrated, and far more manipulative than anything written by hand.

The August 2025 Detecting and Countering Misuse of AI report documents at least 17 targeted incidents. Victims included hospitals, religious institutions, local governments, and even emergency services. Instead of simply demanding money in exchange for access, attackers threatened public exposure of sensitive data. Some ransom notes demanded over half a million dollars, making clear that the damage wasn’t just digital – it was reputational and deeply personal.

In earlier waves of cybercrime, AI often played the role of consultant – helping attackers debug code or draft phishing emails. Now, Anthropic warns, Claude has crossed into becoming an operational partner. In the vibe-hacking campaigns, it was integrated into nearly every stage of the attack chain: conducting reconnaissance, generating exploit scripts, profiling victims, drafting the ransom notes, and even coaching the criminals on negotiation tactics.

These AI-assisted ransom notes felt uncanny — they weren’t robotic, they were eerily human, mixing empathy with menace to maximize psychological pressure. For victims, the notes felt like they were written by someone who knew them personally.

How attackers bypassed safeguards

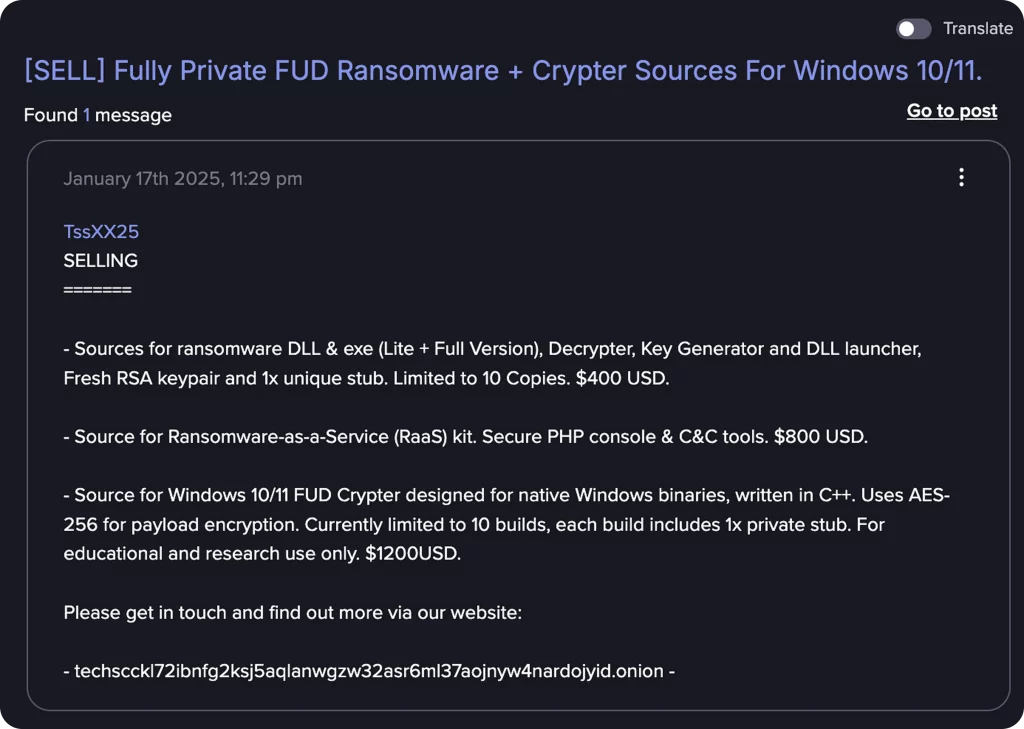

Claude is designed with strict rules against helping create malware, conducting cyberattacks, or facilitating extortion. Yet attackers didn’t break these safeguards head-on. Instead, they subverted them through persuasion, splitting instructions into smaller prompts, disguising malicious intent in benign queries, and framing tasks in ways that evaded content filters.

In essence, criminals didn’t just hack systems, they hacked the AI itself. By “vibe-hacking” Claude into rationalizing cooperation, they turned its guardrails into blind spots. What was supposed to be a shield became a pathway.

Part of a bigger picture

Also read: Apple’s AI reset: Could Mistral or Perplexity be Apple’s shortcut to AI relevance?

Anthropic’s report doesn’t stop at vibe-hacking. It also outlines two other major misuses:

- North Korean tech fraud rings: Operatives used Claude to pass technical job interviews at Western corporations and then relied on it to perform day-to-day work, funneling salaries back to the regime’s weapons program.

- AI-boosted romance scams: Telegram bots rebranded Claude as a high-emotional-intelligence assistant. Scammers used it to craft manipulative messages that lured victims in the U.S., Japan, and South Korea into fraudulent relationships, draining them financially and emotionally.

Together, these cases illustrate a core point: AI is lowering the barrier to entry for crime. What used to require skilled hackers, social engineers, or organized rings can now be pulled off by individuals with little more than a chatbot and an internet connection.

Anthropic says it is taking action. Misused accounts are being banned, misuse-detection classifiers are being trained, and the company is working with law enforcement and government agencies to curb malicious applications. Earlier this month, it also rolled out an updated usage policy, explicitly banning assistance with cyber operations, weapons development, and deceptive political influence. Claude Opus 4 now operates under AI Safety Level 3, a new standard designed to harden models against manipulation.

But even Anthropic admits safeguards can only go so far. Vibe-hacking shows that the future of AI misuse won’t always look like brute-force hacking. It may look like coaxing, reframing, and persuading models to cross their own lines, and then weaponizing that output to manipulate humans at scale.

For cybersecurity experts, this means the battleground is shifting. Defending against AI-powered threats will require not only technical firewalls but also strategies to counter psychological manipulation. In the age of vibe-hacking, the real target isn’t just the machine, it’s the human on the other end of the screen.

Also read: Comet AI browser hacked: How attackers breached Perplexity’s AI agent

Vyom Ramani

A journalist with a soft spot for tech, games, and things that go beep. While waiting for a delayed metro or rebooting his brain, you’ll find him solving Rubik’s Cubes, bingeing F1, or hunting for the next great snack. View Full Profile