OpenAI, Meta and Tesla desperately want this Nvidia GPU, but why?

The AI industry faces a severe Nvidia H100 GPU shortage, stalling major projects and affecting tech giants' bottom line.

Nvidia H100's technical edge includes high Tensor core density, enhanced memory bandwidth, and energy efficiency.

Production challenges include raw material sourcing, manufacturing precision, and maintaining stringent quality control.

The world of artificial intelligence (AI) is currently facing an unprecedented challenge, and it's not about algorithms or data privacy. The AI industry is grappling with a severe shortage of Nvidia H100 GPUs, a crucial component for training large language models (LLMs). This shortage has far-reaching implications, from stalling research projects to affecting the bottom line of major tech corporations.

Survey

SurveyAs of August 2023, the demand for Nvidia H100 GPUs has reached an all-time high. Major tech giants like OpenAI, Azure, and Microsoft are in a fierce race to procure these GPUs, highlighting their significance in the AI ecosystem. Elon Musk's startling remark that "GPUs are harder to get than drugs" and Sam Altman's revelation about OpenAI's delayed projects due to GPU limitations paint a vivid picture of the crisis.

Also read: Acer Nitro 16 Gaming launches in India with Nvidia RTX 4060 GPU

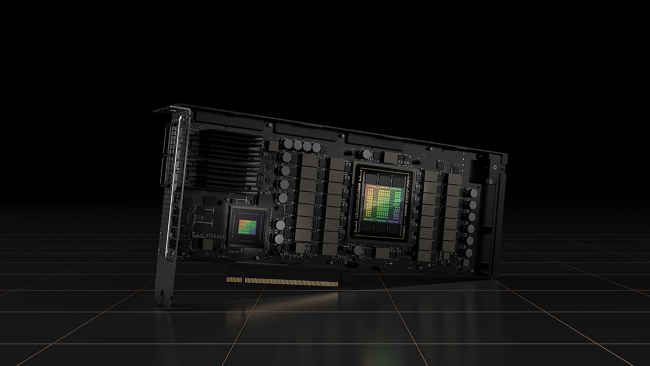

Technical Brilliance of Nvidia H100

So, what makes the Nvidia H100 GPU so sought after? The answer lies in its technical prowess. The Nvidia H100 is designed for high-performance computing, making it ideal for training LLMs. The H100 boasts a significant number of Tensor cores, specialized hardware for accelerating deep learning tasks. These cores enable faster matrix multiplications, a fundamental operation in neural network training.

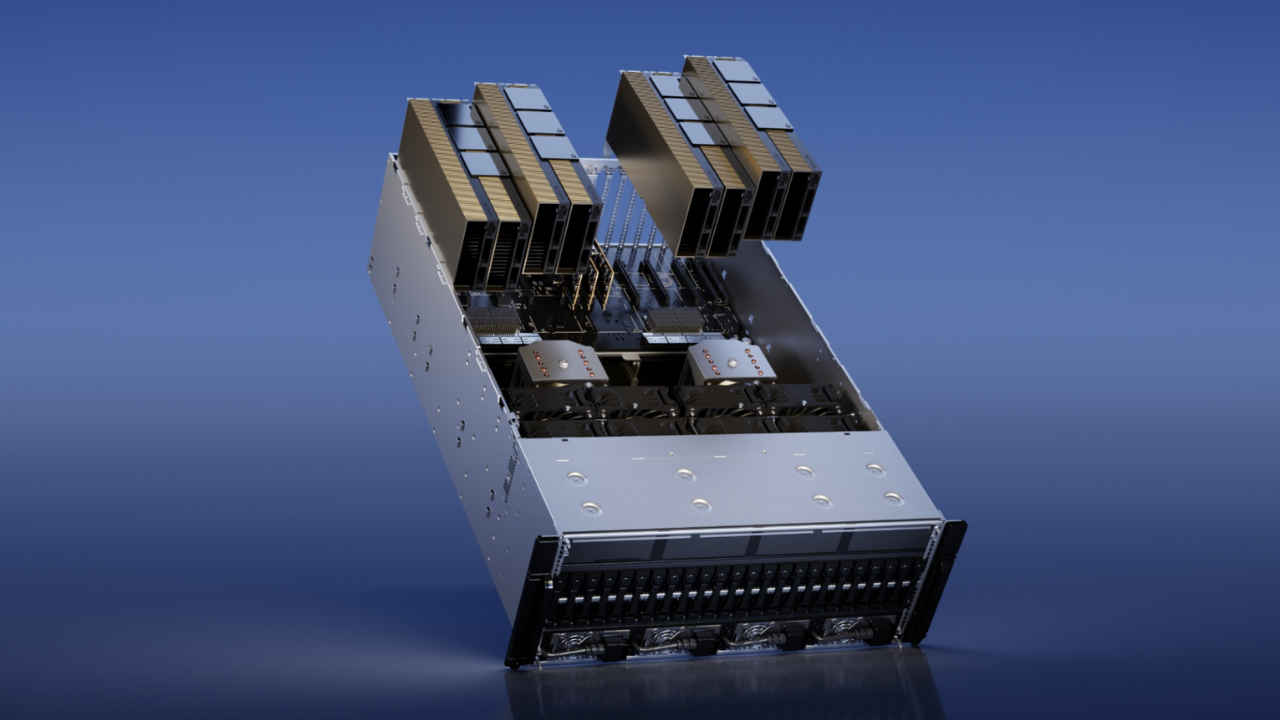

Furthermore, with higher memory bandwidth, the H100 can handle vast datasets efficiently, reducing the time required for data transfer between the GPU and the main memory. The architecture of the H100 also allows for seamless scalability. Researchers can link multiple GPUs together to handle larger models and more complex computations.

Despite its power, the H100 is designed for energy efficiency, ensuring that while it delivers top-tier performance, it doesn't excessively contribute to energy costs. Meeting the soaring demand for the Nvidia H100 is no easy feat. The production process is intricate, involving multiple stages: The GPUs require specific rare metals and semiconductors. With the current demand, sourcing these materials in the required quantities is a challenge. The production of GPUs demands high precision to ensure each unit functions optimally. Any compromise here can lead to faulty GPUs, which can be detrimental to AI projects.

With the pressure to increase production, there's a looming risk of quality control oversight. Ensuring that each GPU meets stringent quality standards is paramount.

Also read: Nvidia is probably the most important company in tech right now: Here's why

The Nvidia H100 GPU shortage is a testament to the rapid advancements in AI and the increasing reliance on high-performance computing. As the industry navigates this challenge, it's crucial to strike a balance between meeting demand and maintaining the quality and integrity of the GPUs. The coming months will be pivotal in determining how tech giants, startups, and Nvidia itself address this bottleneck, shaping the future trajectory of AI innovations.

Yetnesh Dubey

Yetnesh works as a reviewer with Digit and likes to write about stuff related to hardware. He is also an auto nut and in an alternate reality works as a trucker delivering large boiling equipment across Europe. View Full Profile