Intel Architecture Day 2020 – Intel 10nm SuperFin, Intel Xe GPUs, Tiger Lake and OneAPI

Intel held their Architecture Day 2020 as a virtual conference

Technology roadmaps for Intel 10nm Superfin, Xe GPUs, Tiger Lake CPUs, Optane Memory and OneAPI revealed

Tiger Lake to have Intel Xe Graphics with 96 EUs and GNA 2.0

Intel opened up about their technology roadmaps across all of their hardware and software verticals at Intel Architecture Day 2020. Like with the previous Architecture Day that was held in 2018, Intel focused on 6 key aspects which they call the “Six Technology Pillars” and they are: Process and Packaging, XPU Architecture, Memory, Interconnect, Security and Software. Intel unveiled plenty of information regarding their upcoming CPU cores, process technology improvements, all the different architecture variants for the Xe GPUs, memory advancements with Optane Persistent Memory and their efforts on the software front through the OneAPI program. Here’s a brief overview of everything that went down at Intel Architecture Day 2020.

Survey

SurveyProcess and Packaging

It’s no secret that Intel took on a rather ambitious project with their 10nm and 7nm process nodes and in doing so, they’ve had to experiment a lot with the transistor process, lithography and material technology that went into each of their upcoming process nodes. Intel stated that they were moving away from the silliness of tacking on additional plusses (14nm++++) each time an improvement was made to the process technology. Instead, they will now be using more descriptive terms and letting the world know more about the improvements made. Superfin, is the first step in this new “descriptive” direction. Intel’s upcoming 10nm process will now be referred to as Intel 10nm Superfin.

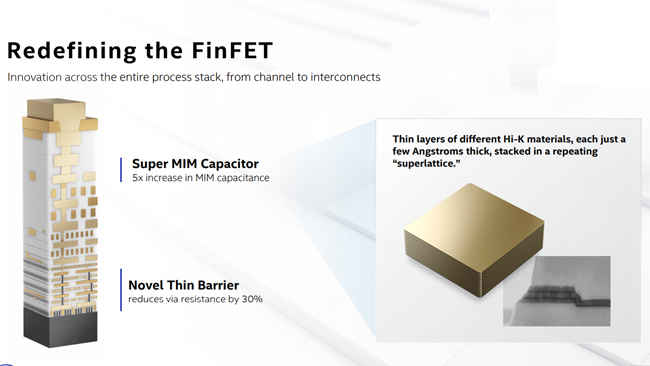

Key improvements include improving the transistor gate pitch to allow higher drive current, enhanced epitaxial source/drain to reduce resistance and lower strain over time, lastly, the gate process has also been improved to help with channel mobility. At a macro level, they’ve introduced the Super MIM Capacitor which is basically a stacked lattice of thin layers of different Hi-K materials, each of which is just a few angstroms thick. An angstrom is one hundred-millionth of a centimeter. Simply by using different Hi-K materials they build a capacitor and the stacking helps increase the capacitance of the entire structure. Another improvement is with the reduced resistance of the vias within the stack.

All of the above improvements had to do with the individual chips. Zooming out to an even larger scale lies the challenge of interconnectors for bridging chips together. Intel makes use of several types of interconnects to enable this. The most recent of which is Foveros which helped make their latest Lakefield CPUs happen. Lakefield interconnects had a bump pitch of between 50-25 microns and their future interconnects are expected to have a bump pitch of less than 10 microns. They’ve also taped out chips using hybrid interconnects which make use of multiple interconnect technologies such as Co-EMIB and ODI(Omni-Directional Interconnect). Each of these interconnects are suitable for a different use case. Some work with enabling the stacking of a smaller package onto a large package whereas other interconnects help with the situation with a larger package being stacked onto a smaller one. All of these improvements will help Intel integrate more chiplets within a smaller footprint.

XPU Heterogeneous Architecture and Tiger Lake

The impact of a CPU microarchitecture is dependent on its generality i.e. how many different applications can you execute using a particular architecture. Intel has a diverse portfolio of silicon which is spread across CPUs, GPUs, AI accelerators and FPGAs. Each of these adhere to a particular architecture domain. CPUs are scalar, GPUs are vector, AI accelerators are Matrix and FPGAs are spatial. These are all defined on the basis of their typical workloads. For example, CPUs are generic, GPUs are highly parallelised, AI accelerators have to handle a lot of matrix match and FPGAs are used for more cutting edge algorithms that the other domains aren’t as good at handling.

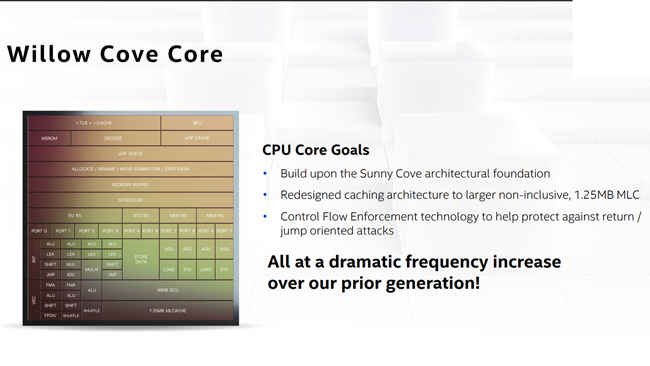

Intel then moved into stating their goals for Tiger Lake, their upcoming processor family. To summarise, Intel wanted a generational leap in performance with disruptive graphics, scalable AI, increased memory bandwidth and ensure data security. We’ve already covered the packaging and process aspect. Coming to the performance factor, Tiger Lake will be using Willow Cover which is built upon their Sunny Cove cores that have been redesigned to have a larger non-inclusive cache and with security improvements to protect against return or jump oriented attacks. Intel states that they have achieved large frequency gains while maintaining power efficiency. The net result being that they have a greater dynamic V-F curve to play with.

For graphics, Intel had already demonstrated the “Horseshoe Bend” concept earlier this year at CES 2020 which had Xe Graphics. Since Xe is a broad architecture, Intel has different architecture variants for each focus area. With CPUs, they’re using Xe-LP architecture and in Tiger Lake, we can expect up to 96 Execution Units with 3.8 MB of L3 cache.

Tiger Lake will support LP4x-4267 and DDR4-3200 memory and up to LP5-5400 memory for when we have commercial kits available. Overall, the total memory bandwidth would be about 86 GB/s and Tiger Lake will have two memory controllers on the package.

Since Willow Cove builds upon Sunny Cove, we have AI capabilities built in as well. Intel calls this the Gaussian and Neural Accelerator (GNA 2.0). The key improvement here is that it is optimised for low-power neural inference and also consumes less CPU compute resources.

Intel Xe GPU

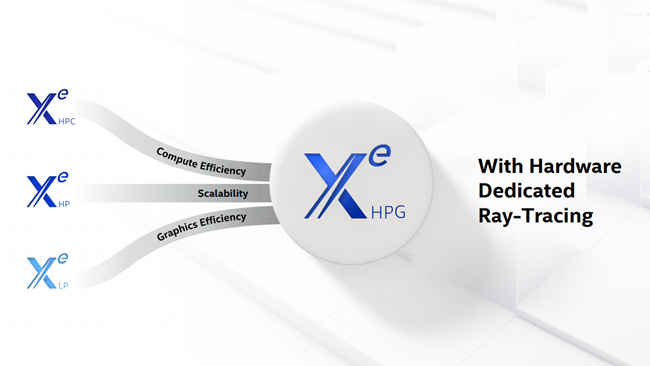

We first heard of Xe in the context of Ponte Vecchio, Intel’s enterprise-grade GPU for data centres. As leaks around a desktop variant started surfacing, we were made aware of Xe being used for consumer graphics as well. And at CES 2020, we saw the first prototypes of thin and light notebooks with Intel Xe graphics. Basically, Intel has taken Xe and branched it into variants which are suited for data centres, accelerators, enthusiast gamers and for integrated graphics. The variant for integrated graphics is XeLP whereas the one for enthusiasts is termed XeHPG. Xe is technically Intel’s Gen12 graphics engine.

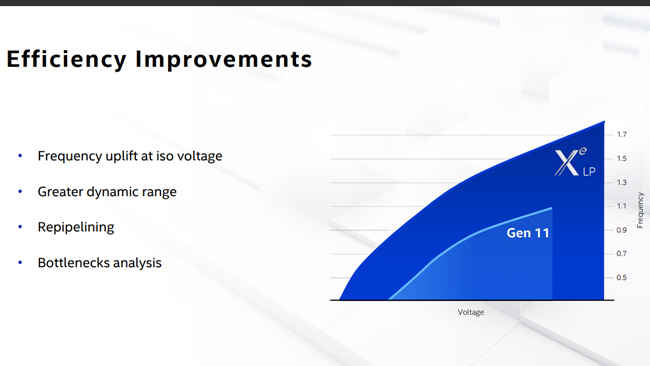

For XeLP, Intel set out with a target of 1.5x in terms of the performance targets. Compared to Gen11 on Ice Lake which had a maximum of 64 Execution Units (EUs), Xe will have up to 96 EUs and the new Superfin transistor will help extract large frequency gains while retaining power efficiency. So in terms of pure EU count, Intel has gone 1.5x over Gen11. The Gen11 IGP within Ice Lake 1065G7 can scale up to 1.1 GHz and the V-F graph showcased by Intel shows that it can operate over a larger voltage range and feature a max frequency in excess of 1.7 GHz. Overall, Intel states that XeLP will feature 2x the performance of Gen11 graphics.

Xe is a ground up re-architected graphics system so it features quite a lot of differences with the EU as well. In Gen11, Intel had two four-wide ALUs for FP/INT and FP/EM workloads. Overall, these were 8 ALUs operating on different workloads. In XeLP, Intel is throwing in two additional ALUs and the configuration has now been changed so that eight ALUs are used for FP/INT and two for FP/EM. Intel is rearranging the innards of the EUs to adapt to the changing workloads and given the fact that some of their extensions have become more efficient at handling certain workloads. XeLP effectively has 2x the INT math capabilities thanks to the doubling of the FP/INT ALUs from 4 to 8. Also, the FP/EM ALUs now operate independently from the FP/INT ALUs so that the FP/INT ALUs don’t have to idle while waiting for the FP/EM ALUs to get done with their workload. This has increased the control logic required to handle the FP/EM ALUs but the overall impact of this trade off is a more efficient graphics pipeline.

Coming to the memory subsystem, Xe-LP now has a new L1 cache that’s independent of what the rest of the CPU uses and the L3 cache has been bumped up to 16 MB. Another improvement is that the compression codecs are now extended to the media and display interfaces. Intel then showcased gaming performance on Xe-LP with games such as Battlefield V, PUBG and DOOM Eternal.

Lastly, Intel unveiled Xe-HPG, a variant of Xe for High Performance Gaming. Xe-HPG will also have support for Hardware-Accelerated Ray Tracing. Intel did not mention if Xe-HPG will be used to build discrete graphics cards to compete with the likes of NVIDIA and AMD. Considering that Xe-LP is already focused on integrated graphics, we could easily assume that discrete graphics are on the way. Intel stated that Xe-LP will be coming out in 2020 and the rest of the Xe family including the HPC, HP and HPG variants will be out in 2021.

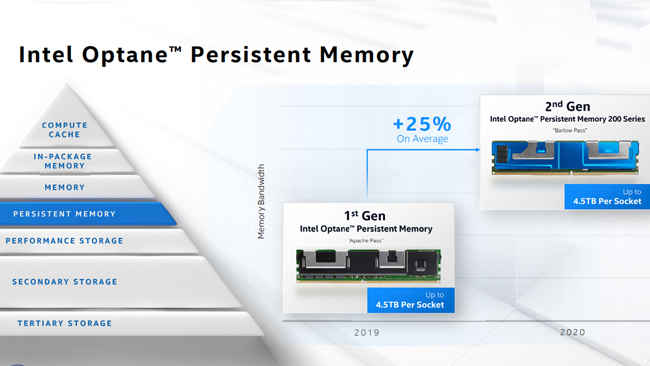

Second Gen 3D-XPoint Optane Memory and SSDs

Intel had unveiled their first gen Optane DC Persistent Memory Modules (DCPMM) in 2017 and they were a massive leap in memory capacity for data centres. Enough to add a new storage tier in the memory hierarchy. At Architecture Day 2020, Intel unveiled the next gen of Optane DCPMM in the form of Barlow Pass and for SSDs, they have Alder Stream. With the second gen Optane memory, Intel is opting for a 4-layer stack which essentially double density along the vertical axis. Aside from density gains, Intel is also equipping these new Optane devices with improved memory controllers that can support PCIe 4.0. Barlow Pass claims a 25% increment in performance over the first gen Apache Pass modules.

The new SSDs will be made available soon whereas the Barlow Pass DCPMM modules have already hit the market.

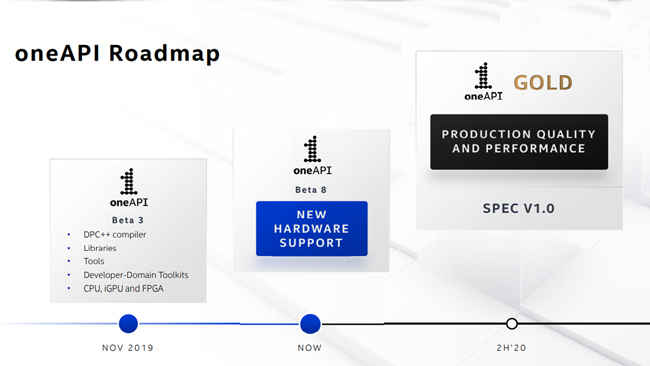

Intel OneAPI goes Gold

OneAPI, Intel’s unified programming model which was unveiled at Architecture Day 2018 is now set to go gold in the second-half of 2020. OneAPI is a comprehensive stack of compilers, libraries, migration tools and analysis software that’s designed to run across the length and breadth of Intel’s hardware portfolio, hence, “Unified”.

This will be the first stable release of OneAPI (Version OneAPI v1.0) and will offer production quality and performance.

Mithun Mohandas

Mithun Mohandas is an Indian technology journalist with 14 years of experience covering consumer technology. He is currently employed at Digit in the capacity of a Managing Editor. Mithun has a background in Computer Engineering and was an active member of the IEEE during his college days. He has a penchant for digging deep into unravelling what makes a device tick. If there's a transistor in it, Mithun's probably going to rip it apart till he finds it. At Digit, he covers processors, graphics cards, storage media, displays and networking devices aside from anything developer related. As an avid PC gamer, he prefers RTS and FPS titles, and can be quite competitive in a race to the finish line. He only gets consoles for the exclusives. He can be seen playing Valorant, World of Tanks, HITMAN and the occasional Age of Empires or being the voice behind hundreds of Digit videos. View Full Profile