What is Time of Flight camera sensor and what does it do

Smartphones are starting to see 3D Time of Flight (ToF) sensors being incorporated into smartphones and there's a lot of hype around them. Here's how they work and how they could make smartphone photogrpahy better

Over the last few months, we’ve been hearing a lot about something called a Time of Flight (or ToF for short) in smartphone cameras. There’s a lot of hype around this new technology coming to smartphone cameras, and with it, there are some misconceptions as well. In this story, we are going to explain what a ToF sensor is, what it does and most importantly, what it does NOT do. It is important to be careful to not develop unrealistic expectations, especially when there’s a lot of hype being built around something. So, without further ado, let’s understand what Time of Flight sensors are all about.

Survey

SurveyThe Concept

Time of Flight sensor works on the principle of measuring the time taken by a light signal to bounce off an intended subject. The time taken for the reflected light to come back to the sensor is interpreted by the circuitry into depth information. Since nothing travels faster than light, the system can work pretty fast to get the job done, provided the circuitry and algorithms can keep up.

How Does it Work

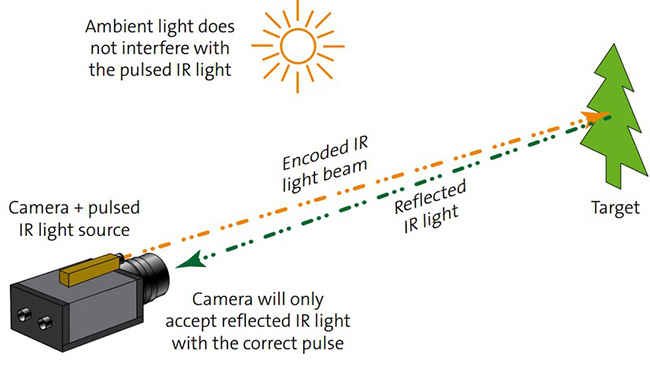

The Time of Flight sensor comprises of a light emitting source, usually a solid-state laser or an LED. The light being emitted is in the near-infrared range (roughly 850nm) so it is not visible to the naked eye. The emitted light will bounce back from the subject, but the sensor is also receiving ambient light, and natural light also contains infrared light. So in order to not get the signals mixed up, the light is pulsed or modulated by a continuous-wave source, typically a sinusoid or square wave. Square wave modulation is more common because it can be easily created using digital circuitry. Each pixel on the sensor reads the light coming back to assimilate the distance data, and voila! You get accurate distance information.

Image Courtesy: Stemmer Imaging

So What is it for

The Time of Flight sensor is typically used for collecting depth information, which means, its all about helping you take better portraits. The problem with the current dual-lens system is that while two cameras are able to collect depth information, they do have some drawbacks. For example, while the two lenses can combine their data and ascertain the distance to the subject, they can offer encounter problems matching objects and features in the two distinct frames and placing them into one. This is often seen in your portrait mode shots as details from varying distances blending into one. Time of Flight sensors eliminates this problem entirely by relying on light and the speed at which it registers on the sensor.

How it benefits you

The primary purpose of the Time of Flight sensor is to be able to capture 3D information (depth) of a scene. While this is good moving forward for portrait mode based shots, ToF sensors could also additionally have unforeseen benefits. For starters, this could also help with the overall focusing performance of the smartphone, especially in low light. Since the sensor is using infra-red light to pick up “distance-to-subject,” technically this could help smartphones focus even in pitch darkness. This could be huge for smartphone photography, especially for those who tend to take photos in places like clubs, pubs or other dimly lit places.

How is it different or better than what we already have

Current focusing systems for regular photos are incredibly reliable from current day solutions, but where they consistently falter is in low light. The implementation of ToF should alleviate that entirely. We have also seen laser-AF systems being used in smartphones, but they don’t work as well as ToF sensors. The way Laser AF works is by projecting a micro pattern through the laser and onto the scene. The camera then reads the pattern and distortions within it to ascertain information. This is fine when you’re dealing with stationary subjects, but when movement is involved, the system cannot keep up. This is due to the system requiring multiple frames to extract depth information. Time of Flight sensors eliminate this entirely since depth information is extractable from a single, low-resolution frame.

Should you be excited

Short answer, yes. However, the truth is, ToF sensors are just starting to make their way into smartphones now and we don’t really know the kind of hardware and algorithms they’re being baked with. It is entirely too soon to herald them as the next best thing, but the tech does hold a lot of promise. The Honor View 20 that launched tonight does have a ToF sensor and we can’t wait to see how the company has chosen to implement it. Between ToF sensors, pixel binning and 48-megapixel sensors, smartphone photography is finally seeing a major upgrade.

Swapnil Mathur

Swapnil was Digit's resident camera nerd, (un)official product photographer and the Reviews Editor. Swapnil has moved-on to newer challenges. For any communication related to his stories, please mail us using the email id given here. View Full Profile