Inside the OnePlus Camera Test Lab: How the company tuned the OnePlus 7 and OnePlus 7 Pro cameras

OnePlus recently reached out to us with an opportunity that was too good to refuse. The company wanted us to visit their camera lab in Taipei and give us unfettered access to their test lab, their testing methodology and some engineers to walk us through everything. The insights we received during this visit shed light on what goes on behind the scenes in tweaking multiple aspects of a camera’s performance. Here’s what we learned.

Survey

SurveyBefore we get into the specificity of the testing, the OnePlus Camera Lab in Taipei is one of two outfits and is responsible for tuning the camera algorithms using data generated by standardized testing targets. The team comprises of 32 engineers and are headed by Simon Liu, Head of Image Development Department at OnePlus. There is a second team in Shenzhen, China, that does the same work, but using images shot in the real world. Our story revolves around the Taipei facility of OnePlus, and some insights offered by the various engineers working there, including Simon Liu.

How OnePlus Tests their Cameras

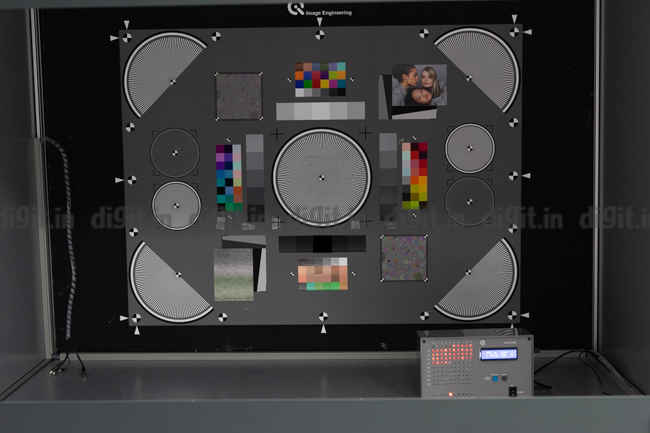

Back in 2017, OnePlus had announced a partnership with DxO Mark, but at the launch of the OnePlus 5, they spoke very little of it. The visit to the OnePlus Camera lab finally clarified what that partnership was all about. Within the walls of this test lab, we found numerous charts that are used to measure various aspects of an imaging stack’s capabilities. Since then, the company has grown to use resources from other test makers as well, including Image Engineering and Imatest. The company has taken tests from all the established players and created their own setup for verifying the quality of the algorithm that determines the nature of the camera.

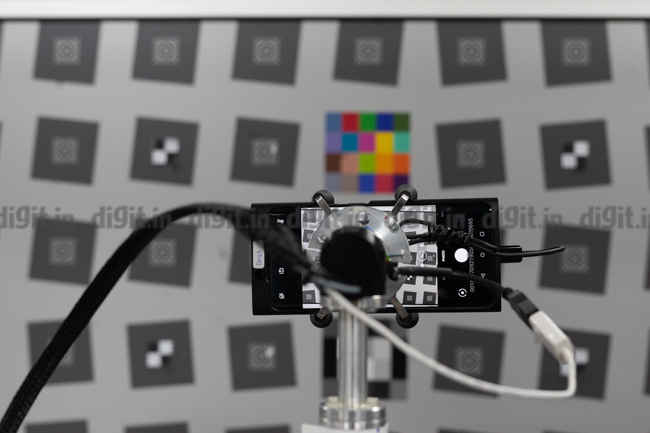

It starts with the automated objective test lab, a room where we find over 20 test charts and a robotic arm. The whole system is automated, eliminating any user error or variance between photos. The system is calibrated in such a way that OnePlus can test any focal length using the same charts and simulate over a thousand illumination levels and multiple lighting conditions.

A robotic arm will lift the camera from its cradle for testing

The software will automatically align the camera with the test target

The robotic arm can be configured to shoot at all the three focal lenghts with the push of a button

The automated setup eliminates any human errors or variances between test shots

On the left of the test charts are three dummy heads made from very high-quality gel to simulate skin texture. All three have distinct skin tones, in an attempt to take into account more colours than just white. All three dummies also wear wigs. What we found missing was any male head with facial hair, which is surprising since that would also make for a great way to collect data points when it comes to texture reproduction.

The gel based heads that help OnePlus calibrate their cameras to various skin tones

Image data from the automated objective test lab is fed through one of the many image analysis software, allowing the OnePlus engineers to pinpoint any optical deficiencies (such as varying levels of sharpness across the frame) or colour oddities (such as white balance shift under a specific lighting condition).

With the OnePlus 7 Pro, Simon Liu says that he is most proud of the HDR algorithm the company has developed. To test this algorithm, the company has a test box divided into two distinct sections. In each section is printed a test scene, with varying colour saturation, contrast, highlight information and shadow information. Each of the two boxes are lit independently, allowing OnePlus engineers to create a lighting difference of up to 22 stops. This is a very wide difference and allows OnePlus engineers to squeeze out as much from the sensor using the software as possible.

One of the setups to evaluate colour reproduction, dynamic range, and sharpness

Data from the above two test labs is fed into a computer for analysis and once every aspect of the image quality, that is, colour accuracy, clarity (sharpness) and dynamic range, the acceptable image set is sent over to another computer dedicated to machine learning. Here, the image parameters are incorporated into the imaging algorithms and consequently pushed out as part of an OTA update.

Now seeing the setup and talking to various imaging engineers, here are some things we learned.

The Secret behind DxO Scores

A lot of the testing equipment in the OnePlus imaging lab had DxO Mark branding on it, possibly from the time when OnePlus and DxO Mark struck a partnership back in 2017. DxO Mark as a company doesn’t only evaluate imaging systems and publish their results, but a key part of their business is also selling the testing process to camera and lens manufacturers. What this means is that any company that ties up with DxO for setting up a camera test lab, will naturally score highly on their tests. Its kind of like having the question paper before you enter an exam, yet, its not cheating. Let me explain.

DxO, over the years, has established a fairly rigorous testing protocol for imaging components. Their tests have been quick to find faults and inconsistencies in performance. Additionally, their tests do tend to offer some level of insight into how that camera would perform in the real world. Therefore, if a smartphone company has used DxO’s setup to prototype and fine-tune their camera, we can definitely be assured of a certain grade of quality. It still, however, means that the scores should be taken with a slight pinch of salt.

HDR is the Star of the Show

We asked Simon Liu a simple question. What aspect of the OnePlus 7 (7 Pro) camera was he most proud of and his rather quick answer was “the HDR algorithm.” He said that the algorithm was a result of the company’s extensive work put into creating the lab setup for not only emulating HDR conditions but also incorporating moving targets within that shot. “the reason it's special is because there are many many number of scenes that drop into HDR now. the lighting conditions are very very complex.” Simon further alludes to the point that their counterpart in Shenzhen feeds plenty of image data from the real world to simulate various scenic and lighting conditions, all of it eventually going into training the HDR algorithm. He says that it was an immense amount of work for the team to accomplish.

A OnePlus Image Engineer pouring over code for tweaking the HDR algorithm

On the unimpressive Pro Mode

We asked Simon about why the Pro Mode on the OnePlus 7 Pro was missing critical features. If you’re wondering what this is about, then know that when shooting in the Pro mode, you won't get access to the ultra-wide or telephoto lenses. Additionally, the RAW file captured is a 12-megapixel file instead of being natively 48MP. Simon Lui says that “based on the numbers we see, there are very very few people who actually use Pro Mode. Allowing the usage of the other two lenses is not that difficult it can be done, but it just needs some time on it.” He further adds that “based on the time we had, we made the choice to put the resources on scene coverage instead of the Pro Mode.” When asked what it would take to make the Pro Mode a fully loaded feature, Simon simply said: “a team 10 times bigger.”

On the 3X Zoom discrepancy

We finally asked Simon about why was there was a disagreement on the 3x optical zoom. He clarifies that the zoom factor comes down to 2.86 (roughly), which is very close to the 3x number. Simon clarified the discrepancy by saying that the company was aiming for a 3x field of view and instead of resorting to an optical setup, which would have added thickness to the phone, they went with capturing the image from a portion of the sensor. The lens’s native focal length combined with an 8MP capture area, the phone does deliver a true 3x field of view. He says this is not digital zoom in any way and we are inclined to agree. When asked about why the EXIF data didn’t say the resulting image had a 3x field of view, he simply said “the EXIF data is what we program it to be,” alluding to the fact that it could simply be a bug. Simon did, however, agree that according to conventional understanding, the way OnePlus achieves 3x zoom is not purely through optics, and hence, may not be the most accurate when referred to as “optical zoom.”

Endurance testing the OnePlus 7 Pro's camera hardware

The Takeaway

Visiting the OnePlus Camera Lab, it is nice to see the company invest so heavily in fine tuning not just their hardware, but also the software. The equipment they are using is from three companies that are known for being at the very bleeding edge of image analysis tools, which should give many of us the peace of mind that the company isn’t shortchanging the public. One thing that Simon did say was that the camera team heavily relies on the feedback its community gives through the forums. So if you guys want the Pro mode to actually be ‘Pro’ enough, the forum is where you ask for it. We have obviously given our feedback to the engineering team and are looking forward to seeing them implemented in the coming months.

Swapnil Mathur

Swapnil was Digit's resident camera nerd, (un)official product photographer and the Reviews Editor. Swapnil has moved-on to newer challenges. For any communication related to his stories, please mail us using the email id given here. View Full Profile