Nvidia-Intel partnership explained: USD 5 billion investment, custom x86 with NVLink, and a shared roadmap

Nvidia will invest 5 billion dollars into Intel and the firms will co-develop custom data centre CPUs and PC SoCs.

The plan brings NVLink to Intel’s x86 platforms and pairs Intel CPUs with Nvidia RTX GPU chiplets for new classes of AI PCs.

Executives framed the move as a multi-generation roadmap with large addressable markets in data centres and notebooks.

Nvidia and Intel have announced a collaboration that, on paper, reorders parts of the semiconductor landscape. The companies will co-develop multiple generations of custom products for both data centres and personal computers, with a technical focus on tightly coupling Intel’s x86 CPUs to Nvidia’s accelerated computing stack via NVLink. Nvidia will also invest 5 billion dollars in Intel at a stated purchase price of 23.28 dollars per share, subject to customary approvals.

Survey

SurveyIf that sounds like an historic about-face, the language from both chief executives matches the scale of the move. Jensen Huang called it a fusion of two world-class platforms that lays the foundation for the next era of computing, while Intel’s Lip-Bu Tan highlighted x86’s foundational role and Intel’s packaging and manufacturing strengths as complements to Nvidia’s AI leadership.

What is being built by NVIDIA and Intel?

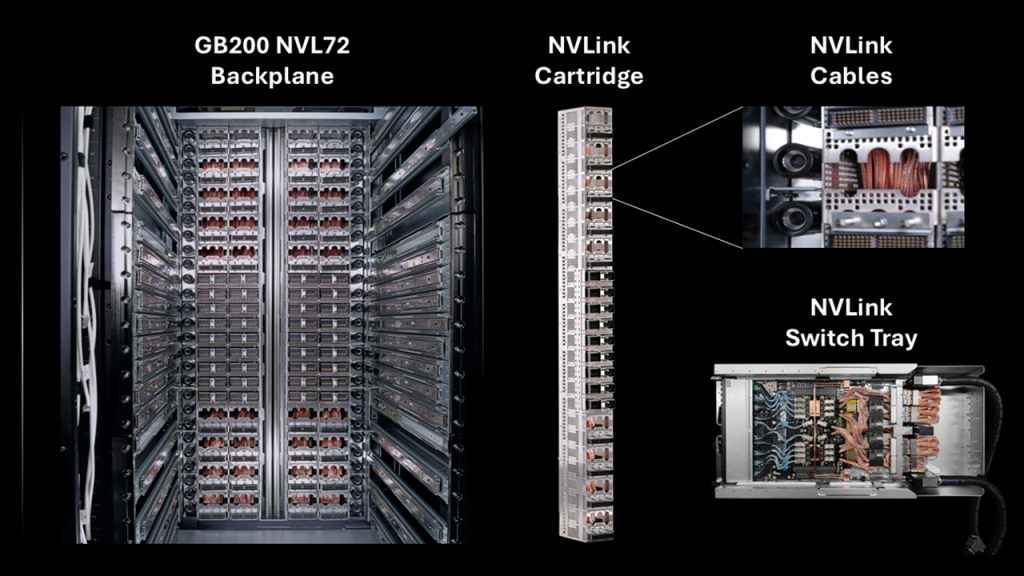

Two product tracks define the deal. First, Intel will build Nvidia-custom x86 server CPUs that Nvidia will integrate into its own AI infrastructure platforms. The intent is to place these CPUs directly on Nvidia’s NVLink fabric, creating rack-scale AI systems that behave more like single, enormous accelerators than traditional clusters.

Second, on the client side, Intel will produce x86 system-on-chips that integrate Nvidia RTX GPU chiplets, connected over NVLink to present, in effect, a virtual giant SoC inside thin-and-light form factors. This targets the large swathe of laptops where integrated graphics has dominated for reasons of size, cost, and battery life, a segment that Nvidia’s discrete GPUs have not fully addressed.

NVIDIA-Intel partnership details

Obviously, everyone wants to know how big is the prize? Nvidia and Intel pointed to two very large fronts. On servers, Nvidia talked about bringing x86 into its NVLink 72 rack-scale architecture, historically tied to ARM-based CPUs in its own platforms. On PCs, the companies see an addressable market of roughly 150 million notebooks per year, with a new class of integrated RTX laptops that sits between today’s integrated graphics and high-end dGPU designs.

As for the economics of the two new products lines being architected, Nvidia described a two-sided flow. In data centres, Nvidia becomes a major purchaser of Intel server CPUs, which it will fuse into NVLink superchips and resell as part of its rack-scale nodes. In PCs, Nvidia supplies GPU chiplets that are packaged with Intel CPUs inside x86 SoCs. In both cases, the collaboration opens new revenue for Intel and expands Nvidia’s reach into segments it did not previously serve.

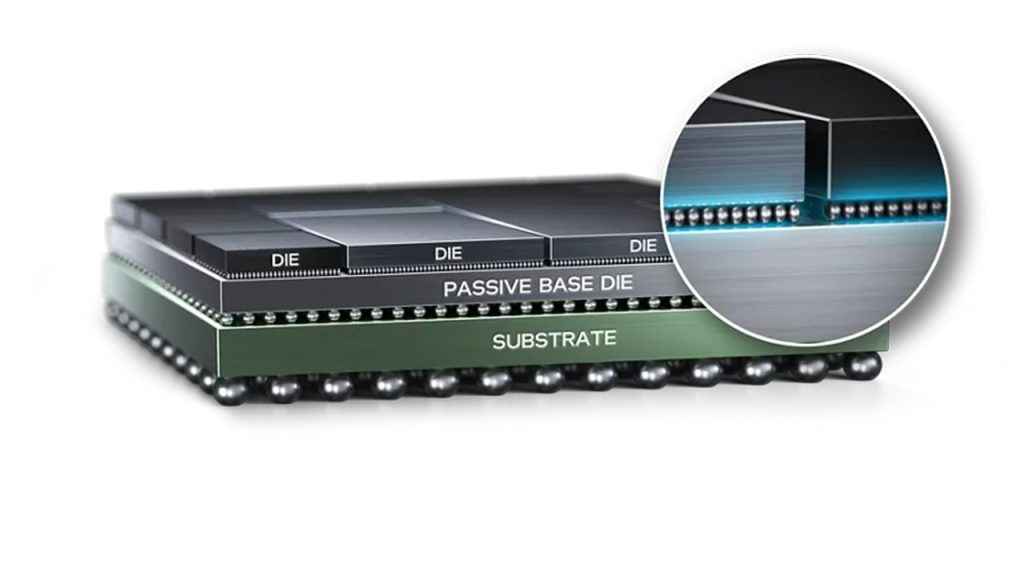

Which brings up the question of who’s making the new products. The executives kept the manufacturing discussion focused on product goals rather than immediate process nodes. They reiterated respect for TSMC and said Intel will continue to qualify its own advanced nodes, while emphasising that Intel’s multi-technology packaging capabilities, cited on the call as Fevros and EMIB, are enabling because they allow mixing an Nvidia GPU chiplet fabricated at one foundry with an Intel CPU from another.

So when will the products ship? While no launch dates were given, the executives said architecture teams have already been working together for about a year across server and PC product lines. That implies substantive design maturity, though the companies framed detailed process and schedule disclosures as coming closer to product readiness.

NVIDIA has been using ARM designs for a while now and this announcement does raise a few questions about the future of those product lines. This alliance will not be replacing NVIDIA’s current ARM plans. Nvidia reiterated continued commitment to its ARM roadmap, including next-generation Grace, robotics platforms, and control processors for AI systems. The point, according to Nvidia, is to accelerate any CPU platform with meaningful market reach, not to privilege one instruction set over another.

NVLink across architectures

The linchpin is NVLink as the computing fabric. In Nvidia’s view, the NVLink 72 rack-scale design turns an entire rack into one logical GPU, provided the host CPU is designed to participate in that topology. Until now, this has been available on its Vera ARM CPUs, not on the broader x86 ecosystem that is usually limited to PCIe. By collaborating with Intel on custom x86, Nvidia aims to remove that ceiling for scale-up systems.

On the PC side, NVLink becomes the interconnect that unifies an Intel CPU and an RTX GPU chiplet into a single, tightly coupled system. The appeal here is performance per watt and form factor: an SoC that behaves more like a co-designed CPU-GPU than a bolted-on discrete part. Nvidia and Intel explicitly positioned this for the integrated graphics tier, which has been underserved by high-end GPUs.

One practical reason this alliance is feasible is the maturity of heterogeneous packaging. The call highlighted Intel’s ability to mix and match dies from different processes and even different foundries, letting an Nvidia GPU chiplet sit alongside an Intel CPU inside the same package. That reduces latency, increases bandwidth, and gives both firms freedom to choose the best process for each die. Intel’s EMIB and its named multi-technology packaging flow were presented as central to rapid iteration.

The strategy in plain terms

Strip away the slogans and the strategy reads cleanly. In data centres, Nvidia gains a way to scale its NVLink supercomputer architecture into the x86 world where enterprises have entrenched investment, while Intel’s CPUs ride on top of Nvidia’s AI platform momentum. In client computing, Intel expands the value of x86 SoCs with RTX-class graphics, and Nvidia gets to participate in a vast integrated graphics segment it previously could not reach. The 5 billion dollar equity ticket aligns incentives and signals confidence that the combined roadmap can move revenue for both companies.

For hyperscalers and large enterprises, the promise is a rack that behaves like one giant accelerator, with x86 control planes that fit existing software and procurement habits. If the execution matches the pitch, this should reduce integration friction for customers that prefer x86 for orchestration but want the throughput and memory semantics of NVLink-attached GPUs.

For PC makers, the new x86 RTX SoCs could enable thin-and-light designs that deliver AI workflows, creative workloads, and gaming at levels previously reserved for machines with discrete GPUs. That does not displace premium dGPU notebooks, it fills the space below them with more capable integrated platforms.

What does this mean for AMD?

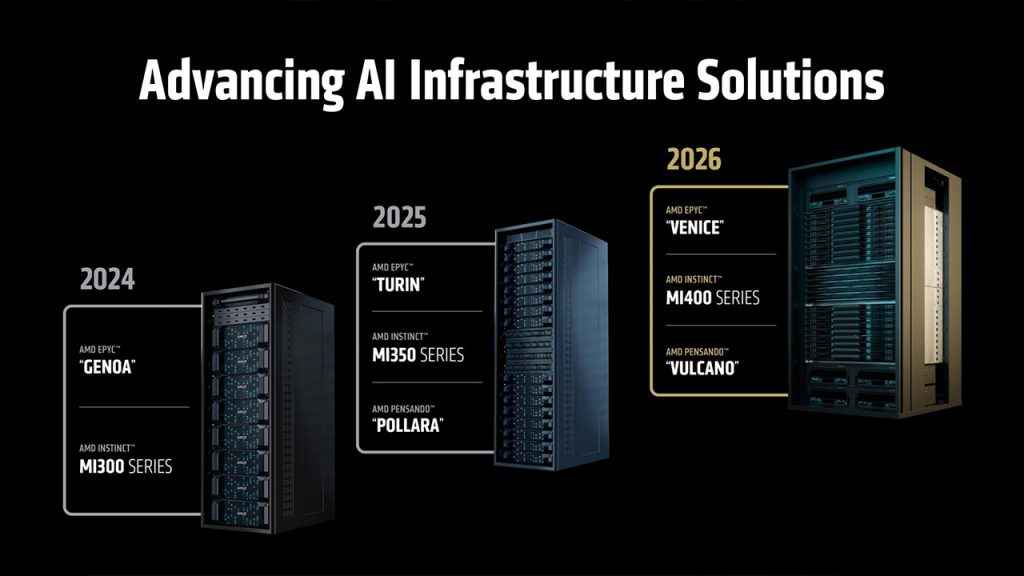

This move puts pressure on several fronts. AMD has been pushing its own accelerated platforms for years, spanning EPYC in data centres and Ryzen APUs on the client side. An Intel-Nvidia pairing that makes x86 a first-class citizen on NVLink would challenge AMD not just on GPU capability, which is Nvidia’s home turf, but on the CPU attach rate inside AI racks that speak Nvidia’s native fabric. On the PC side, an Intel SoC with RTX chiplets places a new ceiling on integrated graphics performance, and invites a response from both AMD’s APU roadmap and any ARM-based entrants at the high end.

At the same time, the companies were careful to avoid overcommitting on foundry. TSMC remains central to Nvidia’s GPU production, while Intel is progressing its own nodes. The thread that ties this together is packaging: a way to bridge foundry choices without forcing either party’s hand.

Risks and open questions

There are several, the first is sheer execution complexity. Multi-die, multi-foundry packages are powerful, although they require meticulous co-design of power, thermals, coherency, and software stacks. The second is market timing. The firms indicated that architecture work has been under way for roughly a year, however, detailed product schedules will only emerge as designs near readiness. The third is that Nvidia is not abandoning ARM. That multiplies, rather than simplifies, its CPU strategy, which could be a strength if managed well and a distraction if not.

Regulatory approval is also a factor. The equity investment will pass through standard processes, and the companies signposted forward-looking risks at the top of the call. None of this is unusual, but it is worth noting given the geopolitical sensitivities around advanced computing supply chains.

There appear to be a couple of key milestones that we need to be watching for. First, a technical briefing that details how Intel’s custom x86 CPU for NVLink differs from conventional PCIe-attached server CPUs, especially with regard to memory semantics, coherency domains, and NVLink switch disaggregation. Second, a client platform reveal that shows performance-per-watt and battery life for the first x86 RTX SoC laptops compared with today’s integrated graphics tier. Third, packaging demos that quantify latency and bandwidth gains from the mixed-foundry chiplet approach, and the range of thermals OEMs can realistically support in thin chassis. Each of these will determine whether the collaboration’s promise translates into product-market pull.

A multi-generation product plan

This is a multi-generation product plan tied to a sizeable equity stake, with a clear division of labour: Intel contributes x86 CPUs, process and packaging, and Nvidia brings NVLink, RTX chiplets, and the software stack that currently defines accelerated computing. If the companies hit their engineering marks, the result could be data centre systems that scale more cleanly and client platforms that make integrated AI performance feel far less compromised. The two firms have spent a year laying the architectural groundwork. Now the market waits for silicon.

Mithun Mohandas

Mithun Mohandas is an Indian technology journalist with 14 years of experience covering consumer technology. He is currently employed at Digit in the capacity of a Managing Editor. Mithun has a background in Computer Engineering and was an active member of the IEEE during his college days. He has a penchant for digging deep into unravelling what makes a device tick. If there's a transistor in it, Mithun's probably going to rip it apart till he finds it. At Digit, he covers processors, graphics cards, storage media, displays and networking devices aside from anything developer related. As an avid PC gamer, he prefers RTS and FPS titles, and can be quite competitive in a race to the finish line. He only gets consoles for the exclusives. He can be seen playing Valorant, World of Tanks, HITMAN and the occasional Age of Empires or being the voice behind hundreds of Digit videos. View Full Profile