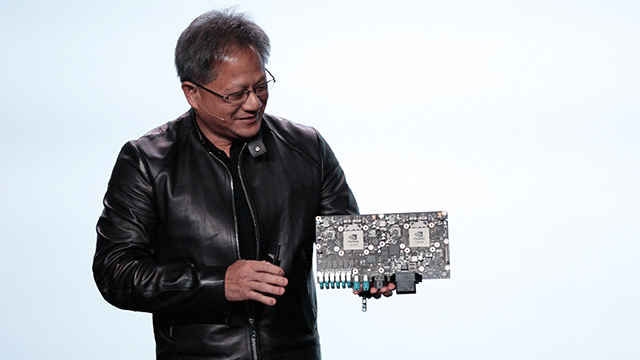

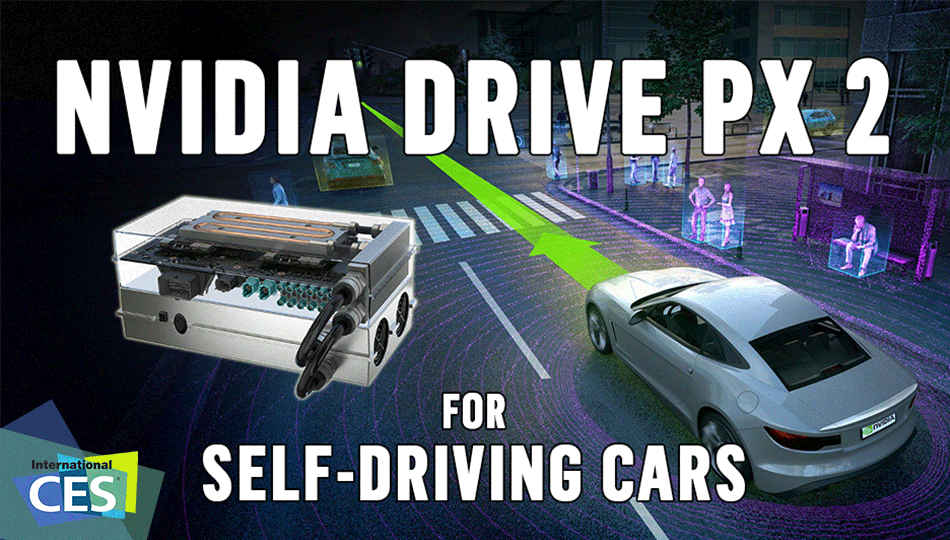

NVIDIA unveils DRIVE PX 2 at CES 2016

Autonomous vehicle technology gets a shot in the arm with the NVIDIA DRIVE PX 2 and NVIDIA DIGITS

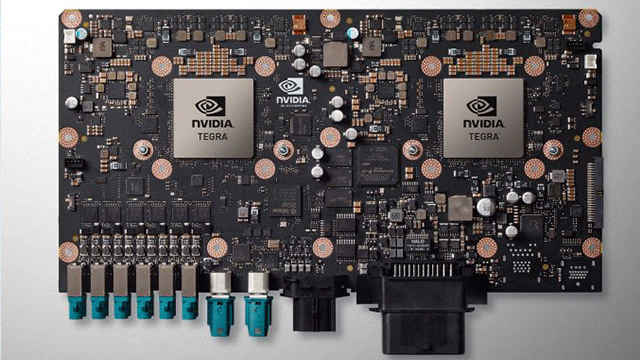

NVIDIA unveiled NVIDIA DRIVE PX 2 – supposedly the world’s most powerful engine for in-vehicle artificial intelligence and an upgrade to last year’s DRIVE PX – at CES today. The water-cooled unit packs two Tegra processors along with two Pascal GPUs and is capable of delivering up to 24 trillion operations per second sourced from 12 video cameras, LIDAR, RADAR and ultrasonic sensors. The DRIVE PX 2 is part of NVIDIA’s end-to-end solution for enabling self-learning cars along with NVIDIA DIGITS.

Survey

SurveyIf at all, NVIDIA’s GPU Technology Conference 2015 had any underlying theme then it was all about deep learning. And the PX 2 is a significant step over last year’s PX. The PX is also capable of handling the same number of sensors as the PX 2 but with the improved SoCs on the PX 2, the newer iteration boasts of 24 teraflops compared to the 2.3 teraflops that the PX was capable of. This is indeed huge in the world of computing as the improvement is an entire order of magnitude greater than the previous generation.

The PX Auto-Pilot Platform

The first iteration of the PX auto-pilot platform was powered by not one but two Tegra X1 processors. The two Tegra X1 processors can together process more than 360 Megapixels per second when all 12 cameras are in operation. Each of these cameras were capable of pulling 1 Megapixel (1280 x 800) @30FPS. The operational threshold for the PX is 1.3 Gigapixels per second so it can incorporate more redundant cameras or increase the resolution of each camera. The latter seems to be the better solution. In addition to the visual data, the PX has to incorporate sensor data from LIDAR, RADAR and ultrasonic sensors. Traditionally, LIDAR, RADAR and Ultrasonic aren’t compute heavy so the bulk of the calculation is converting the visual data into figures that can be used in unison with the remaining data.

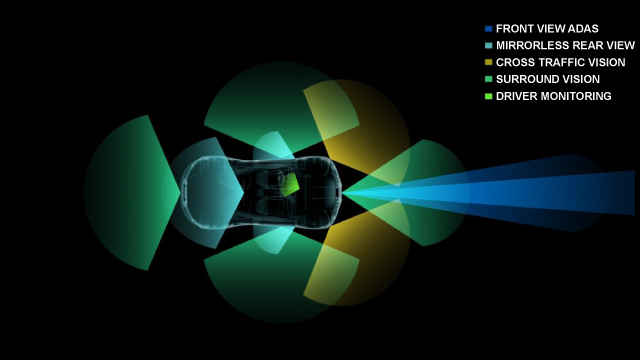

The PX was designed with the intent of processing visual data from 12 different cameras. While not every car manufacturer might use 12 cameras, this particular setup is quite encompassing for a variety of usage scenarios. Current autonomous vehicles that are being developed don’t make use of as many cameras so the system has been designed under the pretext that future vehicles will use as many till the platform is retired. And with the PX 2, NVIDIA has carried on with the 12 camera setup, so this setup is here to stay for a while.

From what we can gather, it seems like the platform uses the following camera arrangement –

- 2 Cameras – Front-view Advanced Driver Assistance Systems

- 3 Cameras – Mirrorless rear-view

- 2 Cameras – Observing cross-traffic (traffic from the sides)

- 3 Cameras – Surround vision

- 1 Camera – Monitoring the driver

The PX 2 Auto-Pilot Platform

While not much information has been released about the PX 2 platform, we scoured the internet to gather as much as we could. The primary reason for this secrecy seems to be the fact that the PX 2 uses two next-gen Tegra processors utilising the Parker architecture and has two additional Pascal GPUs. Both of these architectures are yet to be launched. It seems that the GPU and the SoC make use of TSMC’s 16nm FinFET manufacturing processes against the 20nm process which was used for Maxwell.

|

|

DRIVE PX |

DRIVE PX 2 |

|

SoCs |

2x Tegra X1 |

2x Tegra "Parker" |

|

Discrete GPUs |

None |

2x Pascal |

|

CPU Cores |

8x ARM Cortex-A57 + 8x ARM Cortex-53 |

4x NVIDIA Denver + 8x ARM Cortex-A57 |

|

GPU Cores |

2x Tegra X1 (Maxwell) |

2x Tegra "Parker" (Pascal) + 2x Pascal |

|

FP32 TFLOPS |

> 1 TFLOPS |

8 TFLOPS |

|

FP16 TFLOPS |

> 2 TFLOPS |

16 TFLOPS (24-8) |

Moreover, the upcoming Pascal architecture is touted to use HBM stacks for the video buffer which means they’ll have an immense increment in memory bandwidth. It can be said that the Pascal architecture’s tremendous computational power can be attributed to the shift to FinFET and HBM.

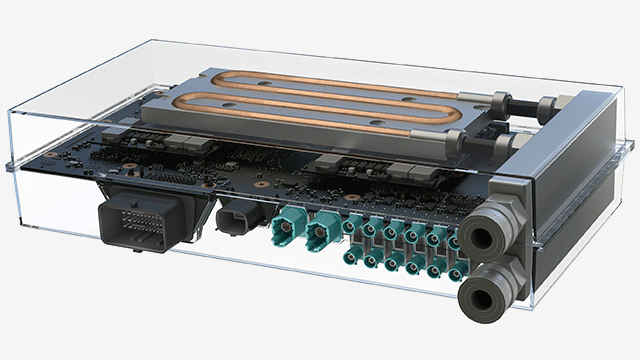

So going from approximately 4 Teraflops to 24 Teraflops is bound to need a lot of power right? The PX 2 has a TDP of 250 watts which puts it in the same league as existing flagship desktop graphics cards like the GeForce GTX 980 Ti. However, desktop graphics cards are placed within well ventilated cabinets and the PX 2 is an in-vehicle device. And we all know how hot car interiors can get. Which makes it imperative that the cooling mechanism better be capable of rapidly reducing temperature. Lo and behold, the PX 2 features a liquid cooler.

The cooling assembly seems to consist of a metal slab with copper pipes snaking through them. And since we can only see the two MXM modules being cooled here, it would be safe to assume that the two GPUs are producing most of the heat. Tegra SoCs have been traditionally designed with a lower TDP in mind so they should work just fine under air-cooling.

Say hello to NVIDIA DriveWorks and NVIDIA DIGITS

NVIDIA isn’t just working on the hardware aspects of self-driving vehicles. All the visual data is being processed by their own set of software tools, libraries and modules. This is akin to what NVIDIA is doing in the GPU space as well. GameWorks is a similar set of software tools APIs which work very well on NVIDIA cards but not so great on AMD. DriveWorks enables sensor calibration, the capture and processing of sensor data and producing an output which will work seamlessly with the rest of NVIDIA technologies that focus on driverless vehicles. This includes Drive CX (cockpit infotainment platform), Drive Design (Digital Instrument Cluster), Visual Computing Module, Jetson (Automotive Development Platform) and some others.

NVIDIA DIGITS is a tool for developing, training and visualising deep neural networks. And neural networks are how systems like these process a ridiculous amount of data to learn patterns. Which is how systems learn to identify different elements in an image like a person, traffic signals, animals, vehicles, traffic signs and everything else that a driver has to pay attention to. DIGITS can work on any NVIDIA GPU-based system so simple machines like your everyday desktop to supercomputers having GPU clusters can run it. In fact, NVIDIA has a development machine – NVIDIA DIGITS DevBox – with four TITAN X GPUs under the hood.

Your future autonomous car – powered by NVIDIA

It was at CES 2015 that NVIDIA announced the PX and since then quite a lot of car manufacturers have adopted the platform. Audi, BMW, Daimler, Ford and Volvo are some of the manufacturers that NVIDIA has roped in. Volvo’s coming out with an autonomous car pilot programme in Sweden called Drive Me which will consist of 100 Volvo XC90 SUVs, all of which will be powered by the PX 2. Cumulatively, NVIDIA claims that over 50 car manufacturers, research institutions, developers and tier 1 suppliers have adopted the PX platform since launch.

What about the competition?

NVIDIA isn’t the only one working on developing hardware for autonomous vehicles. Google’s self-driving vehicle is the first thing that comes to mind when one thinks about autonomous vehicles. And there are many others, like QNX, Delphi, Cisco, Continental, Mobileye, Autotalks, CODHA Wireless, COVISINT, etc. The other big guy is Tesla with its Model X. Tesla’s hardware was made in-house and also consists of a powerful neural network that learns from each Model X which are all part of one huge network. But for their infotainment system, Tesla uses NVIDIA’s CX platform.

Mithun Mohandas

Mithun Mohandas is an Indian technology journalist with 14 years of experience covering consumer technology. He is currently employed at Digit in the capacity of a Managing Editor. Mithun has a background in Computer Engineering and was an active member of the IEEE during his college days. He has a penchant for digging deep into unravelling what makes a device tick. If there's a transistor in it, Mithun's probably going to rip it apart till he finds it. At Digit, he covers processors, graphics cards, storage media, displays and networking devices aside from anything developer related. As an avid PC gamer, he prefers RTS and FPS titles, and can be quite competitive in a race to the finish line. He only gets consoles for the exclusives. He can be seen playing Valorant, World of Tanks, HITMAN and the occasional Age of Empires or being the voice behind hundreds of Digit videos. View Full Profile